Real-Time Scheduling on Linux¶

The open-source software community follows two major approaches to bring real-time requirements into Linux*:

Improve the Linux kernel itself so that it matches real-time requirements, by providing bounded latencies, real-time APIs, and so on. The mainline Linux kernel and the PREEMPT_RT project follow this approach.

Add a layer below the Linux kernel (for example, OS Real-time extension) that will handle all real-time requirements, so that the behavior of Linux does not affect real-time tasks. The Xenomai* project follows this approach.

General Definitions¶

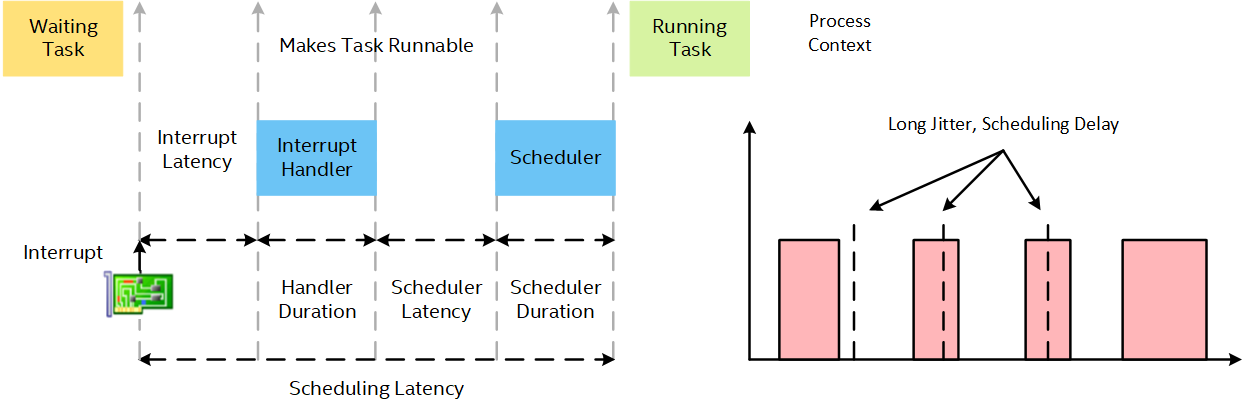

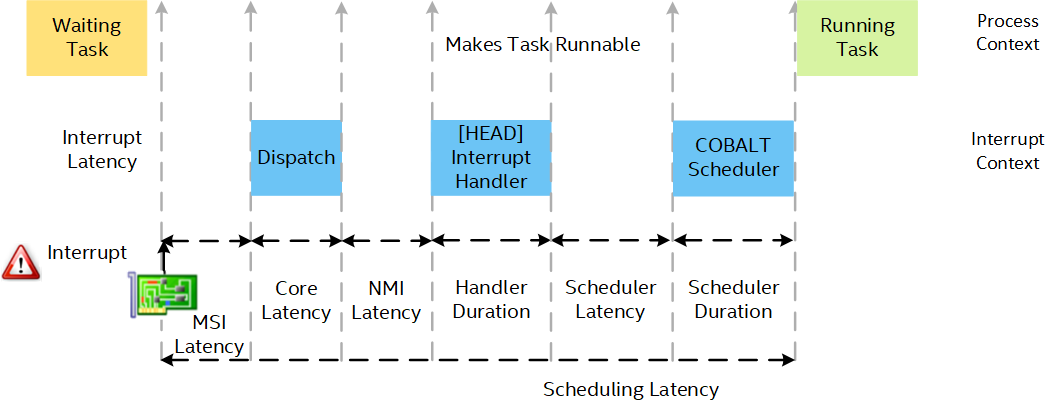

Both approaches aim to bring the “lowest thread scheduling latency” under Linux multi-CPU real-time and non-realtime software execution context.

Note: Scheduling Latency = Interrupt Latency + Handler Duration + Scheduler Latency + Scheduler Duration

IA64 Interrupt Definitions¶

Interrupts can be described as an “immediate response to hardware events”. The execution of this response is typically called an Interrupt Service Routine (ISR). In the process of servicing the ISR, many latencies may occur. These latencies are divided into two components based on their originating source:

Software Interrupt Latency can be predicted based on the system interrupt disable time and the size of the system ISR prologue. This saves the registers manually and performs operations before the start of interrupt handler.

Hardware Interrupt Latency reflects the time required for operations such as retiring in-flight instructions, determining address of interrupt handler, and storing all CPU registers.

The following are the various types of interrupt sources:

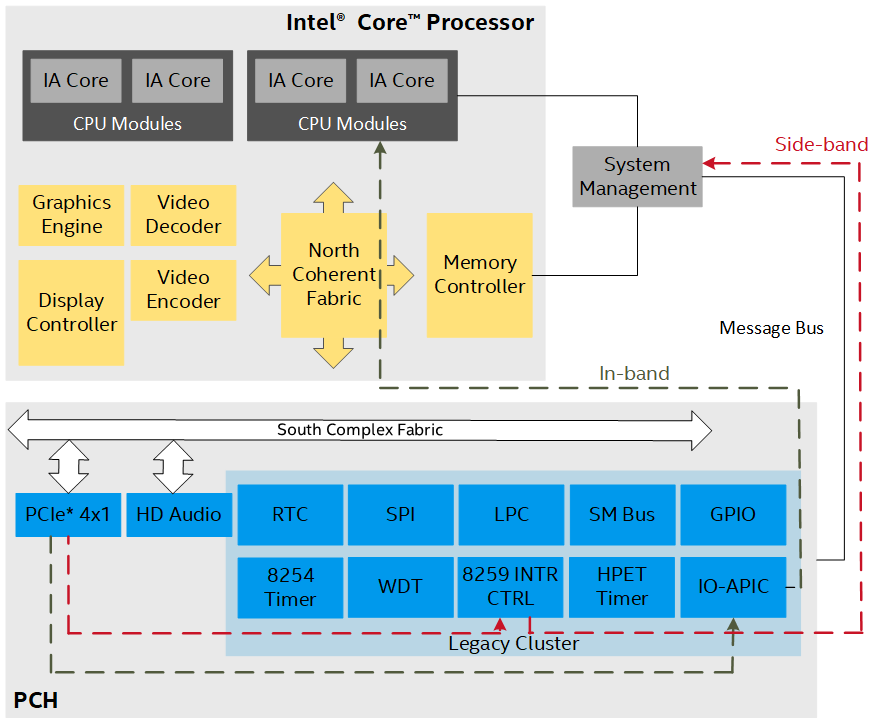

Legacy Interrupts XT-PIC: The

side-bandsignals are backward compatible with PC/AT peripheral IRQs (that is, PIRQ, INTR, INTx).Message-Signaled Interrupts (MSI): The

in-bandmessages, which target a memory address send data along with the interrupt message. MSI messages:Achieve the lowest latency possible. The CPU begins executing the MSI ISR immediately after it finishes its current instruction.

Appear as a Posted Memory Write transaction. As such, a PCI function can request up to 32 MSI messages.

Send data along with the interrupt message but do not receive any hardware acknowledgment.

Write specific device addresses and send transactions to the local IO-APIC of the CPU to which it is assigned.

Non-Maskable Interrupts (NMI): Typically system events (for example, power-button, watchdog timer, and so on). NMI usually originate from Power-Control Unit (PCU) or IA64 firmware sources.

System Control Interrupt (SCI): This is used by hardware to notify the OS through ACPI 5.0, PCAT, or IASOC (Hardware-reduced ACPI).

System Management Interrupt (SMI): This is generated by the power management hardware on the board. SMI exhibit the following characteristics:

SMI processing can last for hundreds of microseconds and are the highest priority interrupt (even higher than the NMI).

The CPU receives an SMI whenever the mode is changed (for example, thermal sensor events, open chassis) and jumps to a hard-wired location in a special SMM address space (System Management RAM).

The SMI cannot be intercepted by user-code since there are no vectors in the CPU. This effectively renders SMI interrupts “invisible” to the OS.

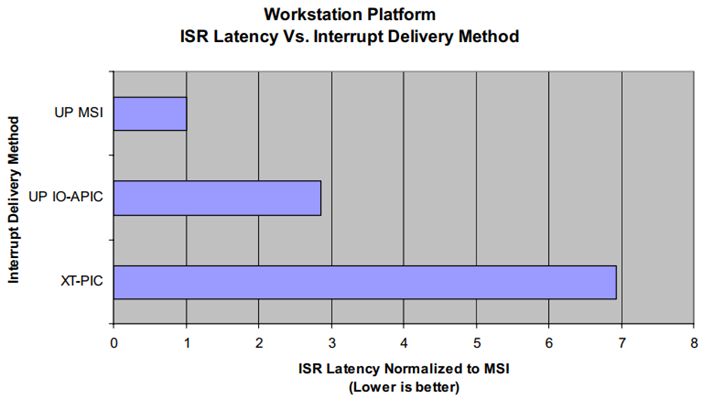

Reducing Interrupt Latency Through the Use of Message Signaled Interrupts-James Coleman-Intel

Linux Multi-threading Definitions¶

User-space process: This is created when the POSIX

fork()command is called and comprises:An address space (for example,

vma), which contains the program code, data, stack, shared libraries, and so on.One thread that starts executing the

main()function.

User-thread: This can be created or added inside an existing process using the POSIX

pthread_create()command.User-threads run in the same address space as the initial process thread.

User-threads start executing a function passed as argument to

pthread_create().

Kernel-thread: This can be created or added inside an kernel module using the POSIX kthread_create() command.

Kernel-threads are light-weight processes cloned from process 0 (the swapper), which share its memory map and limits, but contain a copy of its file descriptor table.

Kernel-threads run in the same address space as the initial process thread.

General Linux Timer Definitions¶

Isochronous applications aim to complete their tasks at exact defined times. Unfortunately, the Linux standard timer does not generally meet the required cycle deadline resolution, precision, or both.

For example, a typical timer function in Linux such as the gettimeofday() system call will return the clock time with microsecond precision, where nanosecond timer precision is often desirable.

To mitigate this limitation, additional POSIX APIs that provide more precise timing capability have been created:

Timer cyclic-task scheduling: Within the PREEMPT_RT scheduling context, a cyclic-task timer can be created with a given clock-domain using the POSIX

timer_create()command. This timer exhibits the following characteristics:Delivery of signals at the expiry of POSIX timers cannot be done in the hard interrupt context of the high resolution timer interrupt.

The signal delivery in both these cases must happen in the thread context due to locking constraints that results in long latencies.

//POSIX timers int timer_create(clockid_t clockid, struct sigevent *sevp, timer_t *timerid);

Task nanosleep cyclic scheduling wake-up: Within the Xenomai* COBALT task scheduling context, cyclic-task timers can be created with a given clock-domain using the POSIX

clock_nanosleep()command. This timer exhibits the following characteristics:The

clock_nanosleep()command does not work on signaling mechanism, hence does not suffer from the latency problem.The task sleep-state timer expiry is executed in the context of the high resolution timer interrupt.

It is recommended that if the an application does not use asynchronous signal handler, then it is better to use

clock_nanosleep.//Clock_nanosleep int clock_nanosleep(clockid_t clock_id, int flags, const struct timespec *request, struct timespec *remain);

PREEMPT_RT Preemptive and Priority Scheduling on Linux OS Runtime¶

The PREEMPT_RT project is an open-source framework under GPLv2 License lead by Linux kernel developers.

The goal is to gradually improve the Linux kernel regarding real-time requirements and to get these improvements merged into the mainline kernel PREEMPT_RT development that works very closely with the mainline development.

Many of the improvements designed, developed, and debugged inside PREEMPT_RT over the years are now part of the mainline Linux kernel. The project is a long-term branch of the Linux kernel that ultimately should disappear when everything is merged.

Setting Low-latency Interrupt Software Handling¶

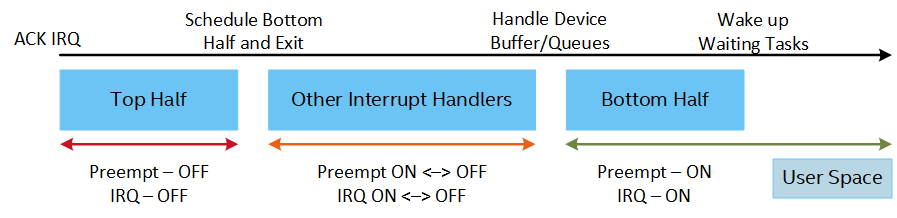

PREEMPT_RT enforces fundamental software design rules to reach full-preemptive and low-latency scheduling by evangelizing “No non-threaded IRQ nesting” development practices across kernel code and numerous drivers/modules code-base.

A top-half, started by the CPU as soon as interrupts are flagged, is supposed to complete as quickly as possible:

The interrupt controller (APIC, MSI, and so on) receives an event from hardware that triggers an interrupt.

The processor switches modes, saves registers, disables preemption, and disables IRQs.

Generic Interrupt vector code is called.

At this point, the context of the interrupted activity is saved.

Lastly, the relevant ISR pertaining to the interrupt event is identified and called.

A bottom-half, scheduled by the top-half, which starts as soft-IRQs, tasklets, or work queues tasks, is to be completed by ISR execution:

Real-time critical interrupts, bottom-half should be used very carefully.

ISR execution is nondeterministic, as the function of all other interrupts are top-half.

Non-Realtime interrupts, bottom-half are threaded to reduce the duration of non-preemptible.

Multi-thread scheduling preemption can happen when:

High priority task wakes up as a result of an interrupt

Time slice expires

System call results in task sleeping

Multi-thread scheduling preemption cannot happen when kernel-code critical section:

Interrupts are explicitly disabled

Preemption is explicitly disabled

Spinlock critical sections unless using preemptive spinlocks

Set Preemptive and Priority Scheduling Policies¶

The standard Linux kernel includes different scheduling policies, as described in the manpage for sched. There are three policies relevant for real-time tasks:

SCHED_FIFOimplements a first-in, first-out scheduling algorithm:When a

SCHED_FIFOtask starts running, it continues to run until either it is preempted by a higher priority thread, it is blocked by an I/O request, or it calls yield function.All other tasks of lower priority will not be scheduled until

SCHED_FIFOtask releases the CPU.Two

SCHED_FIFOtasks with same priority cannot preempt each other.

SCHED_RRis identical to theSCHED_FIFOscheduling, the only difference is in the way it handles the processes with the same priority.The scheduler assigns each

SCHED_RRtask a time slice. When the process exhausts its time slice, the scheduler moves it to the end of the list of processes at its priority.In this manner,

SCHED_RRtask of a given priority is scheduled in a round-robin manner among themselves.If there is only one process at a given priority, the

SCHED_RRscheduling is identical to theSCHED_FIFOscheduling.

SCHED_DEADLINEis implemented using Earliest Deadline First (EDF) scheduling algorithm, in conjunction with Constant Bandwidth Server (CBS).SCHED_DEADLINEpolicy uses three parameters to schedule tasks -Runtime,Deadline, andPeriod.A

SCHED_DEADLINEtask gets “runtime” nanoseconds of CPU time for every “period” nanoseconds. The “runtime” nanoseconds should be available within “deadline” nanoseconds from the period beginning.Tasks are scheduled using EDF based on the scheduling deadlines (these are calculated every time when the task wakes up).

Task with the earliest deadline is executed.

SCHED_DEADLINEthreads are the highest priority (user controllable) threads in the system.If any

SCHED_DEADLINEthread is runnable, it will preempt any thread scheduled under one of the other policies.

Priority Inheritance assumes that the lock (for example, spin_lock, mutex, … ) inherits the priority of the process thread waiting for the lock with greatest priority.

CONFIG_PREEMPT_RT provides priority-inheritance capabilities to rtmutex, spin_lock, and mutex code. A process with a low priority might hold a lock needed by a higher priority process, effectively reducing the priority of this process.

chrt Runtime Processes Linux Scheduling Policies¶

On Linux, the chrt command can be used to set the real-time attributes of a process, such as policy and priority:

To set scheduling policy to FIFO based, where priority values for

SCHED_FIFOcan be between 1 and 99:$ chrt --fifo --pid <priority> <pid>

The following command will set the scheduling attribute to

SCHED_FIFOfor the process with pid 1823:$ chrt --fifo --pid 99 1823

To set scheduling policy to round-robin based, where priority values for

SCHED_RRcan be between 1 and 99:$ chrt -rr --pid <priority> <pid>

The following command will set the scheduling attribute to

SCHED_RRwith a priority 99 for the process with pid 1823:$ chrt --rr --pid 99 1823

To set scheduling policy to deadline based, where priority value for

SCHED_DEADLINEis 0 and runtime <= deadline <= period:$ chrt --deadline --sched-runtime <nanoseconds> \ --sched-period <nanoseconds> \ --sched-deadline <nanoseconds> \ --pid <priority> <pid>

The following example will set scheduling attribute to

SCHED_DEADLINEfor the process with pid 472. The runtime, deadline, and period are given in nanoseconds.$ ps f -g 0 -o pid,policy,rtprio,cmd

The output should look similar to the following:

PID POL RTPRIO CMD 1 TS - /sbin/init nosoftlockup noht 3 185 TS - /lib/systemd/systemd-journald 209 TS - /lib/systemd/systemd-udevd 472 RR 99 /usr/sbin/acpid 476 TS - /usr/sbin/thermald --no-daemon --dbus-enable 486 TS - /usr/sbin/jhid -d

Run the following command to change the policy to

SCHED_DEADLINE(#6):$ chrt --deadline --sched-runtime 10000 \ --sched-deadline 100000 \ --sched-period 1000000 \ --pid 0 472

Run the following command to see the change in the policy of a task:

$ ps f -g 0 -o pid,policy,rtprio,cmd

The output should look similar to the following:

PID POL RTPRIO CMD 1 TS - /sbin/init nosoftlockup noht 3 185 TS - /lib/systemd/systemd-journald 209 TS - /lib/systemd/systemd-udevd 472 #6 0 /usr/sbin/acpid 476 TS - /usr/sbin/thermald --no-daemon --dbus-enable 486 TS - /usr/sbin/jhid -d

Condensing this information in a table:

Priority

Names

99

posixcputmr, migration

50

All IRQ handlers except

39-s-mmc0and42-s-mmc1. For example,367-enp2s0deals with one of the network interfaces49

IRQ handlers

39-s-mmc0and42-s-mmc11

i915/signal,

ktimersoftd,rcu_preempt,rcu_sched,rcub,rcuc0

The rest of the tasks currently running

The highest priority real-time tasks in this system are the timers and migration threads, with priority 99. The lowest priority real-time tasks are

sched_setscheduler() and sched_setattr() Processes Runtime Linux Scheduling Policies¶

The sched_setscheduler function can be used to change the active scheduling policy. The following values can be used to set real-time scheduling policies:

SCHED_FIFO

SCHED_RR

Note: Non-realtime scheduling policies such as SCHED_OTHER, SCHED_BATCH, and SCHED_IDLE are also available. There is no support for deadline scheduling policy in the sched_setscheduler function.

sched_setschedulerfunction sets theSCHED_FIFOorSCHED_RRscheduling policy and priority for a real-time thread policy:int sched_setscheduler(pid_t pid, int policy, const struct sched_param *param);

The following command will configure the running process to use

SCHED_RRscheduling with priority as 99:struct sched_param param_rr; memset(¶m_rr, 0, sizeof(param_rr)); param_rr.sched_priority = 99; pid_t pid = getpid(); if (sched_setscheduler(pid, SCHED_RR, ¶m_rr)) perror("sched_setscheduler error:");

The following command will configure the running process to use

SCHED_FIFOscheduling with priority as 99:struct sched_param param_fifo; memset(¶m_fifo, 0, sizeof(param_fifo)); param_fifo.sched_priority = 99; pid_t pid = getpid(); if (sched_setscheduler(pid, SCHED_FIFO, ¶m_fifo)) perror("sched_setscheduler error:");

sched_setattrfunction setsSCHED_DEADLINEscheduling policy (from kernel version 3.14.):int sched_setattr(pid_t pid, struct sched_attr *attr, unsigned int flags);

In the below example the process in execution is assigned with the

SCHED_DEADLINEpolicy. The process gets a runtime of 2 milliseconds for every 9-millisecond period. The runtime milliseconds should be available within 5 milliseconds of deadline from the period beginning.Click to toggle example code

#define _GNU_SOURCE #include <stdint.h> #include <stdio.h> #include <unistd.h> #include <sys/syscall.h> #include <sched.h> #include <string.h> #include <linux/sched.h> #include <sys/types.h> struct sched_attr { uint32_t size; uint32_t sched_policy; uint64_t sched_flags; int32_t sched_nice; uint32_t sched_priority; uint64_t sched_runtime; uint64_t sched_deadline; uint64_t sched_period; }; int sched_setattr(pid_t pid, const struct sched_attr *attr, unsigned int flags) { return syscall(__NR_sched_setattr, pid, attr, flags); } int main() { unsigned int flags = 0; int status = -1; struct sched_attr attr_deadline; memset(&attr_deadline, 0, sizeof(attr_deadline)); pid_t pid = getpid(); attr_deadline.sched_policy = SCHED_DEADLINE; attr_deadline.sched_runtime = 2*1000*1000; attr_deadline.sched_deadline = 5*1000*1000; attr_deadline.sched_period = 9*1000*1000; attr_deadline.size = sizeof(attr_deadline); attr_deadline.sched_flags = 0; attr_deadline.sched_nice = 0; attr_deadline.sched_priority = 0; status = sched_setattr(pid,&attr_deadline,flags) if(status) perror("sched_setscheduler error:"); return 0; }

pthread POSIX Linux Runtime Scheduling APIs¶

The scheduling policy for threads can be set using the pthread functions: pthread_attr_setschedpolicy, pthread_attr_setschedparam, pthread_attr_setinheritsched.

The following are the steps to create a real-time thread using FIFO scheduling policy and POSIX pthread functions:

To create a thread using FIFO scheduling, initialize the

pthread_attr_t(thread attribute object) object usingpthread_attr_initfunction:pthread_attr_t attr_fifo; pthread_attr_init(&attr_fifo) ;

After initialization, set the thread attributes object referred to by

attr_fifotoSCHED_FIFO(FIFO scheduling policy) usingpthread_attr_setschedpolicy:pthread_attr_setschedpolicy(&attr_fifo, SCHED_FIFO);

Set the priority (can take values between 1 and 99 for FIFO scheduling) of the thread using the

sched_paramobject and copy the parameter values to the thread attribute usingpthread_attr_setschedparam:struct sched_param param_fifo; param_fifo.sched_priority = 92; pthread_attr_setschedparam(&attr_fifo, ¶m_fifo);

Set the inherit-scheduler attribute of the thread attribute. The inherit-scheduler attribute determines whether a new thread takes scheduling attributes from the calling thread or from the attr. To use the scheduling attribute used in attr, call the

pthread_attr_setinheritschedfunction usingPTHREAD_EXPLICIT_SCHED.pthread_attr_setinheritsched(&attr_fifo, PTHREAD_EXPLICIT_SCHED);

Create the thread by calling the

pthread_createfunction:pthread_t thread_fifo; pthread_create(&thread_fifo, &attr_fifo, thread_function_fifo, NULL);

The following code helps in achieving the simplest preemptible multi-threading application under FIFO scheduling policy:

Click to toggle example code

#include <pthread.h> #include <stdio.h> void *thread_function_fifo(void *data) { printf("Inside Thread\n"); return NULL; } int main(int argc, char* argv[]) { struct sched_param param_fifo; pthread_attr_t attr_fifo; pthread_t thread_fifo; int status = -1; memset(¶m_fifo, 0, sizeof(param_fifo)); status = pthread_attr_init(&attr_fifo); if (status) { printf("pthread_attr_init failed\n"); return status; } status = pthread_attr_setschedpolicy(&attr_fifo, SCHED_FIFO); if (status) { printf("pthread_attr_setschedpolicy failed\n"); return status; } param_fifo.sched_priority = 92; status = pthread_attr_setschedparam(&attr_fifo, ¶m_fifo); if (status) { printf("pthread_attr_setschedparam failed\n"); return status; } status = pthread_attr_setinheritsched(&attr_fifo, PTHREAD_EXPLICIT_SCHED); if (status) { printf("pthread_attr_setinheritsched failed\n"); return status; } status = pthread_create(&thread_fifo, &attr_fifo, thread_function_fifo, NULL); if (status) { printf("pthread_create failed\n"); return status; } pthread_join(thread_fifo, NULL); return status; }

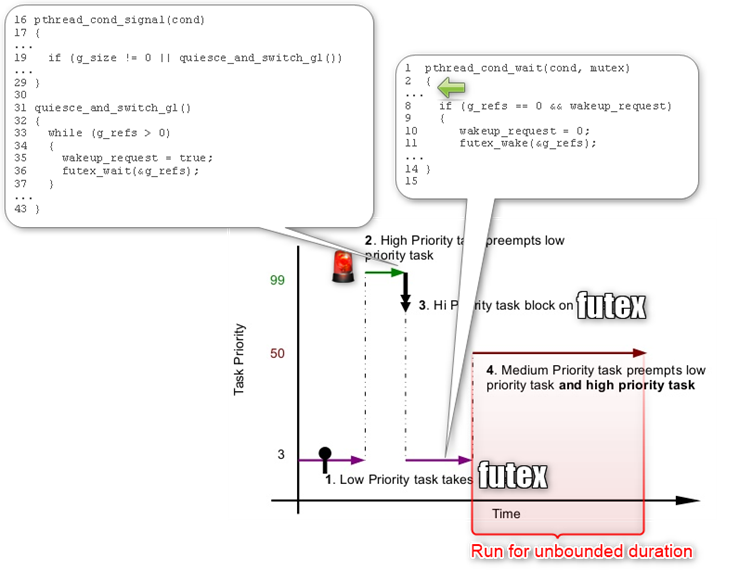

On Glibc 2.25 (and onward), POSIX pthread condition variable (for example, pthread_cond*) is used to define priority-inheritance:

rt_mutex: Cannot be in a state with waiters and no owner

pthread_cond*: APIs re-implemented signal threads for the_wait()and_signal()operations without using PI-aware futex operations to put the calling waiter.

Note: Reference - Pthread Condvars: Posix Compliance and the PI gap.`

kthread Read-Copy Update (RCU)¶

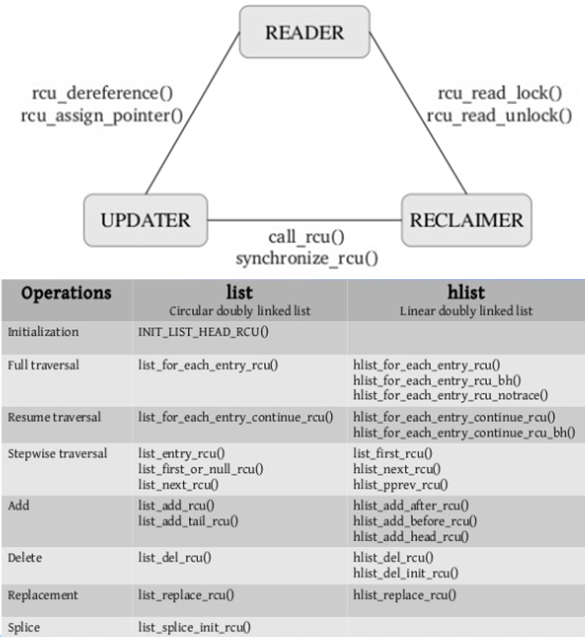

Read-Copy Update (RCU) APIs are used heavily in the Linux code to synchronize kernel threads without locks: These APIs are:

Excellent for read-mostly data where staleness and inconsistency are OK.

Good for read-mostly data where consistency is required.

Can be OK for read-write data where consistency is required.

Might not be best for update-mostly consistency-required data.

Provide existence guarantees that are useful for scalable updates.

Tuning RCU is part of any deterministic and synchronized data-segmentation:

CONFIG_PREEMPT_RCU: Real-time Preemption and RCU readers manipulate CPU-local counters to limit blocking within RCU read-side critical sections. Refer to Real-Time Preemption and RCU.

CONFIG_RCU_NOCB_CPU: RCU Callback Offloading directed to the CPUs of your choice.

CONFIG_RCU_BOOST: RCU priority boosting tasks blocking the current grace period for more than half a second to real-time priority level 1.

CONFIG_RCU_KTHREAD_PRIOandCONFIG_RCU_BOOST_DELAY: Provide additional control of RCU priority boosting.

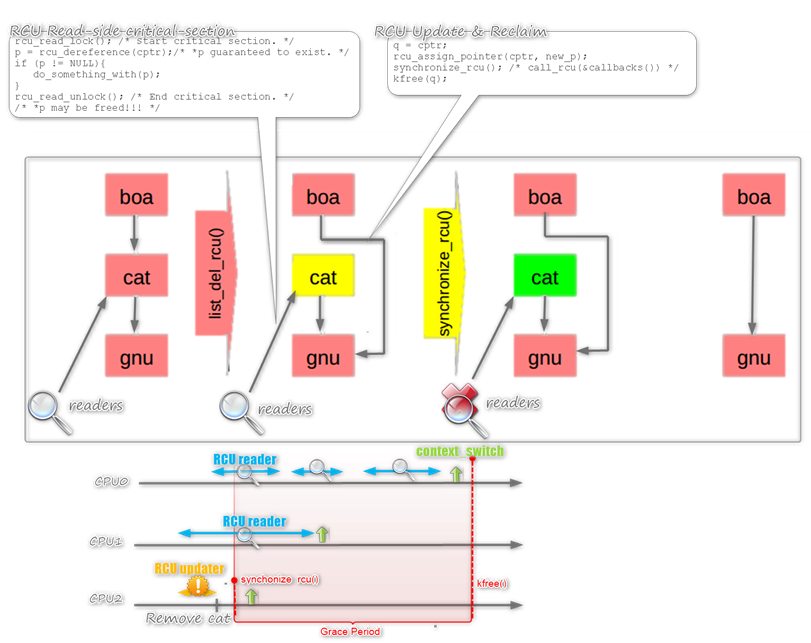

Pointer to RCU-protected object is guaranteed to exist throughout RCU read-side critical section using very light weight primitives:

All RCU writers must wait for an RCU grace period to elapse between making something inaccessible to readers and freeing it, before reclaiming.

spinlock(&updater_lock); q = cptr; rcu_assign_pointer(cptr, new_p); spin_unlock(&updater_lock); synchronize_rcu(); /* Wait for grace period. */ kfree(q);RCU grace period is for all pre-existing readers to complete their RCU read-side critical sections. Grace period begins after the

synchronize_rcu()call and ends after all CPUs execute a context switch.

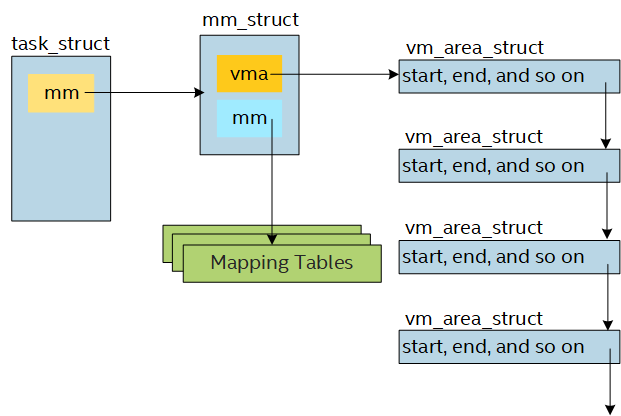

Set POSIX Thread Virtual Memory Allocation (vma)¶

Linux process memory management is considered as one of the important and critical aspects of PREEMPT_RT Linux runtime as compared to a standard Linux runtime. From the kernel scheduling point of view it makes no difference as processes and Threads represent each one as task_struct kernel structure of type running. However, from the scheduling latency standpoint a Process context-switch is significantly longer than a User-thread context-switch within the same process, as process switching needs to flush TLB.

There are different algorithms for memory management designed to optimize the runnable processes and improve system performance. For instance, if kernel-allocated mmap()* returns processes that need the full memory page or only part of a page, memory management works along with the scheduler to optimally utilize resources.

Let us explore three main areas of memory management:

- Memory Locking

Memory locking is essential as part of the initialization the program. Most of the real-time processes lock the memory throughout their execution. Memory locking API

mlockandmlockallfunctions can be used by applications to lock the memory, whilemunlockandmunlockallare used to unlock the memory pages (virtual address space) of the application into the main memory.mlock(void *addr,size_t len)- This function locks a selected region (starting from address to length bytes) of address space of the calling process into memory.mlockall(int flags)- This function locks all process address spaces.MCL_CURRENT,MCL_FUTURE, andMCL_ONFAULTare different flags available.munlock(void *addr,size_t len)- This function will unlock a specified region of a process address space.munlockall(void)- This system call will unlock all process address spaces.

Locking of memory will make sure that the application pages are not removed from main memory during crisis. This will also ensure that page-fault does not occur during RT critical operations, which is very important.

- Stack Memory

Each of the threads within an application has its stack. The size of the stack can be specified by using the

pthreadfunctionpthread_attr_setstacksize().Syntax of

pthread_attr_setstacksize(pthread_attr_t *attr, size_t stacksize):attr- Thread attribute structure.stacksize- In bytes, should not be less thanPTHREAD_STACK_MIN(16384 bytes). The default stack size on Linux is 2 MB.

If the size of the stack is not set explicitly, then the default stack size is allocated. If the application uses a large number of RT threads, it is advised to use stack size smaller than the default size.

- Dynamic Memory Allocation

Dynamic memory allocation of memory is not suggested for RT threads while the execution is in RT critical path as this increases the chance of page faults. It is suggested to allocate the required memory before the start of RT execution and lock the memory using the

mlockormlockallfunctions. In the following example, the thread function is trying to dynamically allocate memory to a thread local variable and trying to access data stored in these random locations.#define BUFFER_SIZE 1048576 void *thread_function_fifo(void *data) { double sum = 0.0; double* tempArray = (double*)calloc(BUFFER_SIZE, sizeof(double)); size_t randomIndex; int i = 50000; while(i--) { randomIndex = rand() % BUFFER_SIZE; sum += tempArray[randomIndex]; } return NULL; }

Set NoHz (Tickless) Kernel¶

The Linux kernel used to send the scheduling clock interrupt (ticks) to each CPU at every jiffy to shift CPU attention periodically towards multiple tasks. A jiffy is a very short time period, which is determined by the value of kernel Hz.

It is not used in cases such as:

Devices with input power constrain such as mobile devices. Triggering clock interrupt can drain its power source very quickly even if it is idle.

Virtualization. Multiple OS instances might find that half of its CPU time is consumed by unnecessary scheduling clock interrupts.

A tickless kernel inherently reduces the number of scheduling clock interrupt, which helps to improve energy efficiency and reduce Linux runtime scheduling jitter.

For the following three contexts, you might want to configure scheduling-clock interrupts to improve energy efficiency:

- CPU with Heavy Workload

CONFIG_HZ_PERIODIC=y(for older kernelsCONFIG_NO_HZ=n): There are situations when CPU with heavy workloads with numerous tasks, which use very short time of CPU, has very frequent idle periods. These idle periods are also short (order of tens or hundreds of microseconds). Reducing scheduling-clock ticks will have the reverse effect of increasing the overhead of switching to and from idle and transitioning between user and kernel execution.- CPU in idle

CONFIG_NO_HZ_IDLE=y: The primary purpose of a scheduling-clock interrupt is to force a busy CPU to shift its attention among multiple tasks. But with an idle CPU, there are no tasks to shift its attention, therefore sending scheduling-clock interrupt is of no use. Instead, configure tickless kernel to avoid sending scheduling-clock interrupts to idle CPUs, thereby improving energy efficiency of the systems. This mode can be disabled from boot command line by specifyingnohz=off. By default, kernel boots withnohz=on.- CPU with Single Task

CONFIG_NO_HZ_FULL=y: If the CPU is predefined with only one task, there is no point in sending scheduling-clock interrupt to switch task. So, to avoid sending-clock interrupt to the CPUs, the configuration setting in Kconfig of Linux kernel will be useful.

Set High Resolution Timers Thread¶

Timer resolution has been progressively improved by the Linux.org community to offer a more precise way of waking up the system and process data at more accurate intervals:

Initially Unix/Linux systems used timers with a frequency of 100 Hz (that is, 100 timer events per second/one event every 10ms).

In Linux version 2.4, i386 systems started using timers with frequency of 1000 Hz (that is, 1000 timer events per second/one event every 1ms). The 1ms timer event improves minimum latency and interactivity, but at the same time it also incurs higher timer overhead.

In Linux kernel version 2.6, timer frequency was reduced to 250 Hz (that is, 250 timer events per second/one event every 4ms) to reduce timer overhead.

Finally, Linux kernel streamlined high-resolution timers nanosecond precision thread usage by adding

CONFIG_HIGH_RES_TIMERS=ykernel built-in driver.

You can also examine the timer_list per CPU core from the /proc/timer_list file system:

.resolutionvalue of 1 nanosecond, clock supports high resolution.

event_handleris set tohrtimer_interrupt, which represents high resolution timer feature is active.

.hres_activehas a value of 1, which means high resolution timer feature is active.Note: A resolution of 1ns is not reasonable. This indicates that the system uses HRTs. The usual resolution of HRTs on modern systems is in the microseconds.

root@intel-corei7-64:~#cat /proc/timer_list | grep 'cpu:\|resolution\|hres_active\|clock\|event_handler' cpu: 0 clock 0: .resolution: 1 nsecs #2: <ffffc9000214ba00>, hrtimer_wakeup, S:01, schedule_hrtimeout_range_clock.part.28, rpcbind/507 #3: <ffffc900026d7d80>, hrtimer_wakeup, S:01, schedule_hrtimeout_range_clock.part.28, cleanupd/585 #4: <ffffc9000269fd80>, hrtimer_wakeup, S:01, schedule_hrtimeout_range_clock.part.28, smbd-notifyd/584 #8: <ffffc9000261fd80>, hrtimer_wakeup, S:01, schedule_hrtimeout_range_clock.part.28, smbd/583 #9: <ffffc9000212bd80>, hrtimer_wakeup, S:01, schedule_hrtimeout_range_clock.part.28, syslog-ng/494 #10: <ffffc900026dfd80>, hrtimer_wakeup, S:01, schedule_hrtimeout_range_clock.part.28, lpqd/587 clock 1: .resolution: 1 nsecs clock 2: .resolution: 1 nsecs clock 3: .resolution: 1 nsecs .get_time: ktime_get_clocktai .hres_active : 1 cpu: 2 clock 0: .resolution: 1 nsecs #2: <ffffc90002313a00>, hrtimer_wakeup, S:01, schedule_hrtimeout_range_clock.part.28, thermald/548 #3: <ffffc900023fb8c0>, hrtimer_wakeup, S:01, schedule_hrtimeout_range_clock.part.28, wpa_supplicant/562 clock 1: .resolution: 1 nsecs clock 2: .resolution: 1 nsecs clock 3: .resolution: 1 nsecs .get_time: ktime_get_clocktai .hres_active : 1 event_handler: tick_handle_oneshot_broadcast event_handler: hrtimer_interrupt event_handler: hrtimer_interrupt

pthread_…() POSIX Linux Isochronous Scheduling¶

An isochronous application will be repeated after a fixed period of time:

The execution time of this application should always be less than its period.

An isochronous application should always be a real-time thread to measure performance.

The following steps breaks down the procedure to develop a simple isoch-rt-thread sanity check:

Define a structure that will have the time period information along with the current time of the clock. This structure will be used to pass data between multiple tasks:

/*Data format to be passed between tasks*/ struct time_period_info { struct timespec next_period; long period_ns;

Define the time period of the cyclic thread as 1ms and get the current time of the system:

/*Initialize the periodic task with 1ms time period*/ static void initialize_periodic_task(struct time_period_info *tinfo) { /* keep time period for 1ms */ tinfo->period_ns = 1000000; clock_gettime(CLOCK_MONOTONIC, &(tinfo->next_period)); }

Use the Timer increment module to go for nanosleep to complete the time period of the real-thread:

/*Increment the timer until the time period elapses and the Real time task will execute*/ static void inc_period(struct time_period_info *tinfo) { tinfo->next_period.tv_nsec += tinfo->period_ns; while(tinfo->next_period.tv_nsec >= 1000000000){ tinfo->next_period.tv_sec++; tinfo->next_period.tv_nsec -=1000000000; } }

Use a loop to wait for time period to complete. It is assumed that the thread execution time is less when compared to time period:

/*Assumption: Real time task requires less time to complete task as compared to period length, so wait till period completes*/ static void wait_for_period_complete(struct period_info *pinfo) { inc_period(pinfo); /* Ignore possibilities of signal wakes */ clock_nanosleep(CLOCK_MONOTONIC, TIMER_ABSTIME, &pinfo->next_period, NULL); }

Define a real-time thread. For simplicity, a print statement is included:

static void real_time_task() { printf("Real-Time Task executing\n"); return NULL; }

Initialize and trigger the real-time thread cyclic execution. This will wait for the time period completion. This thread will be created from the main thread as a POSIX thread.

void *realtime_isochronous_task(void *data) { struct time_period_info tpinfo; periodic_task_init(&tpinfo); while (1) { real_time_task(); wait_for_period_complete(&tpinfo); } return NULL; }

Note: A non-realtime main thread will spawn a real-time isochronous application thread here. Also, it sets the preemptive scheduling priority and policy.

Created a POSIX

mainthread to create and initialize all threads with the attributes:int main(int argc, char* argv[]) { struct sched_param param_fifo; pthread_attr_t attr_fifo; pthread_t thread_fifo; int status = -1; memset(¶m_fifo, 0, sizeof(param_fifo)); status = pthread_attr_init(&attr_fifo); if (status) { printf("pthread_attr_init failed\n"); return status; }

Next, set the real-time thread with FIFO scheduling policy:

status = pthread_attr_setschedpolicy(&attr_fifo, SCHED_FIFO); if (status) { printf("pthread_attr_setschedpolicy failed\n"); return status; }

The real-time task priority is set as 92. The priority can be between 1 and 99:

param_fifo.sched_priority = 92; status = pthread_attr_setschedparam(&attr_fifo, ¶m_fifo); if (status) { printf("pthread_attr_setschedparam failed\n"); return status; }

Set the

inherit-schedulerattribute of the thread attribute. Theinherit-schedulerattribute determines if a new thread takes scheduling attributes from the calling thread or from the attr:status = pthread_attr_setinheritsched(&attr_fifo, PTHREAD_EXPLICIT_SCHED); if (status) { printf("pthread_attr_setinheritsched failed\n"); return status; }

The following code creates a real-time isochronous application thread:

status = pthread_create(&thread_fifo, &attr_fifo, realtime_isochronous_task, NULL); if (status) { printf("pthread_create failed\n"); return status; }

Wait for real-time task completion:

pthread_join(thread_fifo, NULL); return status; }

Here is the complete code:

Click to toggle example code

/*Header Files*/ #include <pthread.h> #include <stdio.h> #include <string.h> /*Data format to be passed between tasks*/ struct time_period_info { struct timespec next_period; long period_ns; }; /*Initialize the periodic task with 1ms time period*/ static void initialize_periodic_task(struct time_period_info *tinfo){ /*Keep time period for 1ms*/ tinfo->period_ns = 1000000; clock_gettime(CLOCK_MONOTONIC, &(tinfo->next_period)); } /*Increment the timer to till time period elapsed*/ static void inc_period(struct time_period_info *tinfo){ tinfo->next_period.tv_nsec += tinfo->period_ns; while(tinfo->next_period.tv_nsec >= 1000000000){ tinfo->next_period.tv_sec++; tinfo->next_period.tv_nsec -=1000000000; } } /*Real time task requires less time to complete task as compared to period length, so wait till period completes*/ static void wait_for_period_complete(struct time_period_info *tinfo){ inc_period(tinfo); clock_nanosleep(CLOCK_MONOTONIC, TIMER_ABSTIME, &tinfo->next_period, NULL); } /*Real Time Task*/ static void* real_time_task(){ printf("Real-Time Task executing\n"); return NULL; } /*Main module for an isochronous application task with Real Time priority and scheduling call as SCHED_FIFO */ void *realtime_isochronous_task(void *data){ struct time_period_info tinfo; initialize_periodic_task(&tinfo); while(1){ real_time_task(); wait_for_period_complete(&tinfo); } return NULL; } /*Non Real Time master thread that will spawn a Real Time isochronous application thread*/ int main(int argc, char* argv[]) { struct sched_param param_fifo; pthread_attr_t attr_fifo; pthread_t thread_fifo; int status = -1; memset(¶m_fifo, 0, sizeof(param_fifo)); status = pthread_attr_init(&attr_fifo); if (status) { printf("pthread_attr_init failed\n"); return status; } status = pthread_attr_setschedpolicy(&attr_fifo, SCHED_FIFO); if (status) { printf("pthread_attr_setschedpolicy failed\n"); return status; } param_fifo.sched_priority = 92; status = pthread_attr_setschedparam(&attr_fifo, ¶m_fifo); if (status) { printf("pthread_attr_setschedparam failed\n"); return status; } status = pthread_attr_setinheritsched(&attr_fifo, PTHREAD_EXPLICIT_SCHED); if (status) { printf("pthread_attr_setinheritsched failed\n"); return status; } status = pthread_create(&thread_fifo, &attr_fifo, realtime_isochronous_task, NULL); if (status) { printf("pthread_create failed\n"); return status; } pthread_join(thread_fifo, NULL); return status; }

Set Thread temporal-isolation via Kernel Boot Parameters¶

Assume the following best known configuration to implement CPU core Temporal-isolation:

cpu1 (Critical Core): Will run real-time applications

cpu0: Will run everything else

The following table lists the kernel command line options that act on thread/process cores affinity at boot-time:

Command Line |

Parameter |

Isolation |

|---|---|---|

|

List of critical cores |

The kernel scheduler will not migrate tasks from other cores to the critical cores |

|

List of non-critical cores |

Protects the cores from IRQs |

|

List of critical cores |

Stops RCU callbacks from getting called |

|

List of critical cores |

If the core is idle or has a single running task, it will not get scheduling clock ticks.

Use together with |

In this example, the resulting parameters will look like:

isolcpus=1 irqaffinity=0 rcu_nocbs=2 nohz=off nohz_full=2

Edit the systemd-bootx64.efi OS loader entry file to add custom boot parameters:

root@intel-corei7-64:~# vi /boot/EFI/loader/entries/boot.conf

title boot

linux /vmlinuz

initrd /initrd

options LABEL=boot isolcpus=1 irqaffinity=0 rcu_nocbs=2 nohz=off nohz_full=2 i915.enable_rc6=0 i915.enable_dc=0 i915.disable_power_well=0 i915.enable_execlists=0 i915.powersave=0 processor.max_cstate=0 intel.max_cstate=0 processor_idle.max_cstate=0 intel_idle.max_cstate=0 clocksource=tsc tsc=reliable nmi_watchdog=0 nosoftlockup intel_pstate=disable noht nosmap mce=ignore_mce nohalt acpi_irq_nobalance noirqbalance vt.handoff=7

Xenomai Cobalt Preemptive and Priority Scheduling Linux OS Runtime¶

The Xenomai* project is an open-source RTOS-to-Linux Portability Framework under Creative Commons BY-SA 3.0 and GPLv2 Licenses, which comes in two flavors:

As co-kernel/real-time extension(RTE) for patched Linux (codenamed Cobalt).

As libraries for native Linux (incl. PREEMPT-RT) (codenamed Mercury). It aims at working both as a co-kernel and on top of PREEMPT_RT in the 3.0 branch.

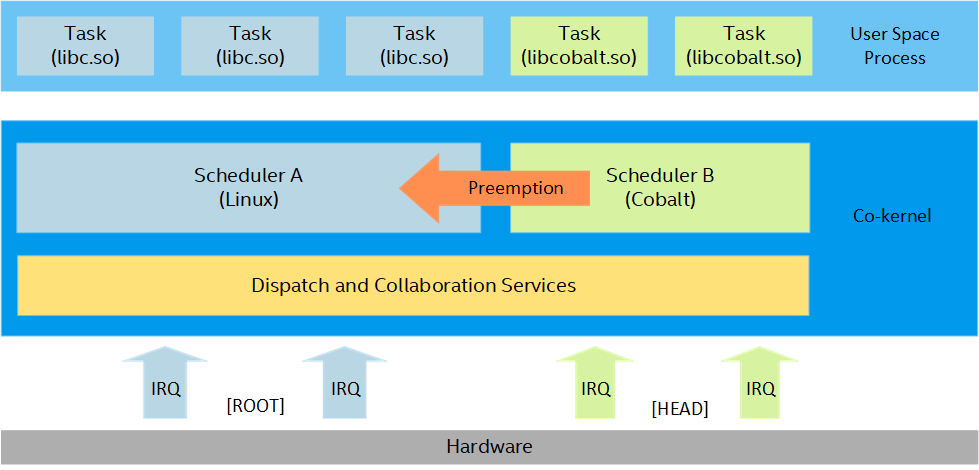

Xenomai project merged a real-time core (named Cobalt core) into Linux kernel, which co-exists in kernel space. The interrupts and threads in the Cobalt core have higher priority than the interrupts and threads in Linux kernel. Since the Cobalt core executes with less instructions compared to the Linux kernel, unnecessary delay and historical burden in calling path can be reduced.

Using this real-time targeted design, a Xenomai-patched Linux kernel can achieve a good real-time multi-threading performance.

Set Two-stage Interrupt Pipeline - [Head] and [Root] Stages¶

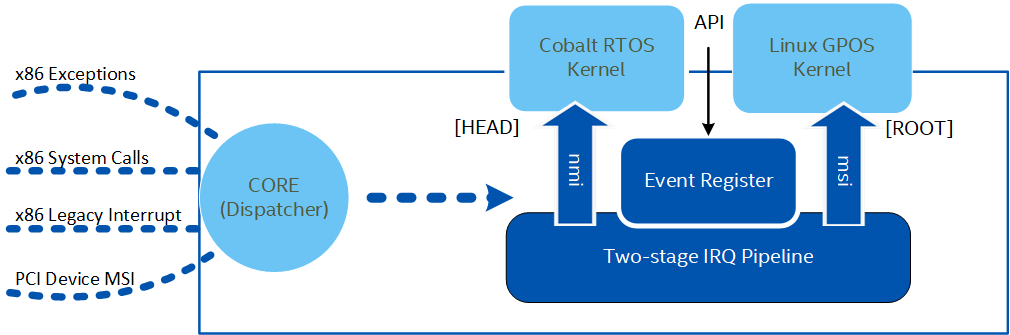

The two-stage interrupt pipeline is the underlying mechanism enabling the Xenomai real-time framework.

The Xenomai-patched Linux kernel takes dominance over hardware interrupts, which originally belong to the Linux kernel. Xenomai will firstly handle the interrupts it is interested in, and then route the other interrupts to the Linux kernel.

The former named head stage and the latter is named root stage:

The [Head] stage corresponds to the Cobalt core (real-time domain or out-of-band context).

The [Root] stage corresponds to the Linux kernel (non real-time domain or in-band context).

The [Head] stage has higher priority over the [Root] stage, and offers the shortest response time by squeezing both the hardware and software.

The Xenomai patches that implement these mechanics are named Dovetail patches; the patches are hosted at: https://source.denx.de/Xenomai/linux-dovetail/.

Set POSIX Thread Context Migration between [Head] and [Root] Stages¶

On Linux, the taskset command allows you to change the CPU affinity of a process. It is typically used in conjunction with the CPU isolation determined by the kernel command line. The following example script demonstrates changing the real-time process affinity to core 1:

#!/bin/bash

cpu="1"

cycle="250"

time_total="345600"

taskset -c $cpu /usr/bin/latency -g ./latency.histo.log -s -p $cycle -c $cpu -P 99 -T $time_total 2>&1 | tee ./latency.log

Ensuring that workloads are running isolated on CPUs can be determined by monitoring the state of currently running tasks. One such utility that enables monitoring of task CPU affinity is htop.

Cobalt core’s threads are not entirely isolated from the Linux kernel’s threads. Instead, it reuses ordinary kthread and adds special capabilities; kthread can jump between Cobalt’s real-time context (out-of-band context) and common Linux kernel context (in-band context). The advantage is when under in-band context the thread can enjoy Linux kernel’s infrastructure. In a typical scenario, a Cobalt thread will start up as a normal kthread, call the Linux kernel’s API for preparation work, then switch to out-of-band context and behave as a Cobalt thread to perform real-time work. The disadvantage is that during out-of-band context the Cobalt thread is easily migrated to in-band context by a mistakenly called Linux kernel API. In such a case, it is difficult discover; developers misleadingly consider their thread to be running under the Cobalt core, and do not notice the issue until checking the ftrace output or when the task exceeds its deadline.

A Xenomai/Cobalt POSIX-based userspace application can shadow the same thread between Preemptive and Common Time-Sharing (SCHED_OTHER) scheduling policy:

Secondary mode: Where Linux GPOS services and Linux [ROOT] domain device drivers are accessible (that is,

ps –xortopLinux commands can be used).Primary mode: Where all Xenomai RTOS services and RTDM [HEAD] domain device drivers are accessible.

# root@intel-corei7-64:~# cat /proc/xenomai/sched/stat

CPU PID MSW CSW XSC PF STAT %CPU NAME

0 0 0 5321688352 0 0 00018000 96.8 [ROOT/0]

1 0 0 1067292 0 0 00018000 100.0 [ROOT/1]

1 852 1 1 5 0 000680c0 0.0 latency

1 854 532167 1064334 532171 0 00068042 0.0 display-852

0 855 2 5321669632 5322202288 0 0004c042 2.4 sampling-852

1 0 0 13288313 0 0 00000000 0.0 [IRQ4355: [timer]]

pthread POSIX Skin Runtime Scheduling APIs¶

When linked with libcobalt.so Linux pthread_create() with pthread_setschedparam() policy, any change to the SCHED_FIFO and SCHED_RR standard (S) POSIX scheduling policy via system calls are trampolined to Xenomai/Cobalt task by a mechanism called shadowing.

On top of those, Xenomai/Cobalt provides supplementary scheduling policies (X):

SCHED_TPimplements the temporal partitioning scheduling policy for groups of threads (a group can be one or more threads).SCHED_SPORADICimplements a task server scheduler used to run sporadic activities with quota to avoid periodic (underSCHED_RRorSCHED_FIFO) tasks perturbation.SCHED_QUOTAimplements a budget-based scheduling policy. The group of threads is suspended since the budget exceeded. The budget is refilled every quota interval.Scheduling Policies

Linux Vanilla

Linux vanilla with PREEMPT_RT

Linux Vanilla with Xenomai/COBALT

SCHED_TP, SCHED_BATCH, SCHED_IDLE

S

S

S

SCHED_FIFO, SCHED_RR

N

N

N

SCHED_FIFO

P

SCHED_TP, SCHED_SPORADIC, and SCHED_QUOTA

X

_Legend:_

S = Standard scheduling policies

N = CONFIG_PREEMPT supplementary scheduling policies

P = CONFIG_PREEMPT_RT supplementary scheduling policies

X = CONFIG_XENOMAI supplementary scheduling policies

Set High Resolution Timers Thread in Xenomai¶

Xenomai/Cobalt High Resolution Timers (CONFIG_XENO_OPT_TIMER_RBTREE=y) allow the use of available X86 hardware timers to create time-interval based of high-priority [HEAD] interrupts:

[host-timer/x]and[watchdog]multiplexed into a number of software programmable timers exposed to the Cobalttimerfd_handlerPOSIX timers API call from userspaceclock_nanosleep()thread accurate wakeup by high-resolution timer hw-offload

Linux Userspace filesystem interface allow you to report Cobalt timer information:

# cat /proc/xenomai/timer/coreclk

CPU SCHED/SHOT TIMEOUT INTERVAL NAME

0 79845094/32427139 419us - [host-timer/0]

0 673759/673758 164ms579us 1s [watchdog]

1 41484498/15201506 419us - [host-timer/1]

1 673759/673758 164ms583us 1s [watchdog]

0 2440455925/2440400928 94us 100us timerfd_handler

Further Reading Reference¶

This document does not cover all technical details of the Xenomai framework. Refer to the official documentation for further reading:

RT-Scheduling Sanity Checks¶

The following section is applicable to:

For the following tests, the admin user must log in as the root user.

Sanity Check 1: Monitor Thread CPU Core Affinity¶

Run the

pscommand to report the tree of all the processes executing on the computer, listed together with their Process ID, Scheduling Policy, Real-time Priority, and command line.Click to toggle example output

root@intel-corei7-64:~# ps f -g 0 -o pid,policy,rtprio,cmd PID POL RTPRIO CMD 2 TS - [kthreadd] 3 TS - \_ [ksoftirqd/0] 4 FF 1 \_ [ktimersoftd/0] 6 TS - \_ [kworker/0:0H] 8 FF 1 \_ [rcu_preempt] 9 FF 1 \_ [rcu_sched] 10 FF 1 \_ [rcub/0] 11 FF 1 \_ [rcuc/0] 12 TS - \_ [kswork] 13 FF 99 \_ [posixcputmr/0] 14 FF 99 \_ [migration/0] 15 TS - \_ [cpuhp/0] 16 TS - \_ [cpuhp/2] 17 FF 99 \_ [migration/2] 18 FF 1 \_ [rcuc/2] 19 FF 1 \_ [ktimersoftd/2] 20 TS - \_ [ksoftirqd/2] 22 TS - \_ [kworker/2:0H] 23 FF 99 \_ [posixcputmr/2] 24 TS - \_ [kdevtmpfs] 25 TS - \_ [netns] 27 TS - \_ [oom_reaper] 28 TS - \_ [writeback] 29 TS - \_ [kcompactd0] 30 TS - \_ [crypto] 31 TS - \_ [bioset] 32 TS - \_ [kblockd] 33 FF 50 \_ [irq/9-acpi] 34 TS - \_ [md] 35 TS - \_ [watchdogd] 36 TS - \_ [rpciod] 37 TS - \_ [xprtiod] 39 TS - \_ [kswapd0] 40 TS - \_ [vmstat] 41 TS - \_ [nfsiod] 63 TS - \_ [kthrotld] 66 TS - \_ [bioset] 67 TS - \_ [bioset] 68 TS - \_ [bioset] 69 TS - \_ [bioset] 70 TS - \_ [bioset] 71 TS - \_ [bioset] 72 TS - \_ [bioset] 73 TS - \_ [bioset] 74 TS - \_ [bioset] 75 TS - \_ [bioset] 76 TS - \_ [bioset] 77 TS - \_ [bioset] 78 TS - \_ [bioset] 79 TS - \_ [bioset] 80 TS - \_ [bioset] 81 TS - \_ [bioset] 83 TS - \_ [bioset] 84 TS - \_ [bioset] 85 TS - \_ [bioset] 86 TS - \_ [bioset] 87 TS - \_ [bioset] 88 TS - \_ [bioset] 89 TS - \_ [bioset] 90 TS - \_ [bioset] 92 FF 50 \_ [irq/27-idma64.0] 93 FF 50 \_ [irq/27-i2c_desi] 94 FF 50 \_ [irq/28-idma64.1] 95 FF 50 \_ [irq/28-i2c_desi] 96 FF 50 \_ [irq/29-idma64.2] 98 FF 50 \_ [irq/29-i2c_desi] 100 FF 50 \_ [irq/30-idma64.3] 101 FF 50 \_ [irq/30-i2c_desi] 102 FF 50 \_ [irq/31-idma64.4] 103 FF 50 \_ [irq/31-i2c_desi] 104 FF 50 \_ [irq/32-idma64.5] 106 FF 50 \_ [irq/32-i2c_desi] 107 FF 50 \_ [irq/33-idma64.6] 108 FF 50 \_ [irq/33-i2c_desi] 109 FF 50 \_ [irq/34-idma64.7] 110 FF 50 \_ [irq/34-i2c_desi] 111 FF 50 \_ [irq/4-idma64.8] 112 FF 50 \_ [irq/5-idma64.9] 113 FF 50 \_ [irq/35-idma64.1] 115 FF 50 \_ [irq/37-idma64.1] 117 TS - \_ [nvme] 118 FF 50 \_ [irq/365-xhci_hc] 121 TS - \_ [scsi_eh_0] 122 TS - \_ [scsi_tmf_0] 123 TS - \_ [usb-storage] 124 TS - \_ [dm_bufio_cache] 125 FF 50 \_ [irq/39-mmc0] 126 FF 49 \_ [irq/39-s-mmc0] 127 FF 50 \_ [irq/42-mmc1] 128 FF 49 \_ [irq/42-s-mmc1] 129 TS - \_ [ipv6_addrconf] 144 TS - \_ [bioset] 175 TS - \_ [bioset] 177 TS - \_ [mmcqd/0] 182 TS - \_ [bioset] 187 TS - \_ [mmcqd/0boot0] 189 TS - \_ [bioset] 191 TS - \_ [mmcqd/0boot1] 193 TS - \_ [bioset] 195 TS - \_ [mmcqd/0rpmb] 332 TS - \_ [kworker/2:1H] 342 TS - \_ [kworker/0:1H] 407 TS - \_ [jbd2/mmcblk0p2-] 409 TS - \_ [ext4-rsv-conver] 429 TS - \_ [bioset] 463 TS - \_ [loop0] 466 TS - \_ [jbd2/loop0-8] 467 TS - \_ [ext4-rsv-conver] 558 FF 50 \_ [irq/366-mei_me] 559 FF 50 \_ [irq/8-rtc0] 560 FF 50 \_ [irq/35-pxa2xx-s] 561 TS - \_ [spi1] 563 FF 50 \_ [irq/37-pxa2xx-s] 564 TS - \_ [spi3] 572 FF 50 \_ [irq/369-i915] 798 FF 1 \_ [i915/signal:0] 800 FF 1 \_ [i915/signal:1] 801 FF 1 \_ [i915/signal:2] 802 FF 1 \_ [i915/signal:4] 832 FF 50 \_ [irq/367-enp2s0] 835 FF 50 \_ [irq/368-enp3s0] 844 FF 50 \_ [irq/4-serial] 846 FF 50 \_ [irq/5-serial] 4167 TS - \_ [kworker/0:1] 4194 TS - \_ [kworker/u8:1] 4234 TS - \_ [kworker/2:0] 4242 TS - \_ [kworker/0:0] 4288 TS - \_ [kworker/u8:0] 4313 TS - \_ [kworker/2:1] 4318 TS - \_ [kworker/0:2] 1 TS - /sbin/init initrd=\initrd LABEL=boot processor.max_cstate=0 intel_idle.max_cstate=0 clocksource=tsc tsc=reliable nmi_watchdog=0 nosoftlockup intel_pstate=disable i915.disable_power_well=0 i915.enable_rc6=0 noht 3 snd_hda_intel.power_save=1 snd_hda_intel.power_save_controller=y scsi_mod.scan=async console=ttyS2,115200 rootwait console=ttyS0,115200 console=tty0 491 TS - /lib/systemd/systemd-journald 525 TS - /lib/systemd/systemd-udevd 551 TS - /usr/sbin/syslog-ng -F -p /var/run/syslogd.pid 571 TS - /usr/sbin/jhid -d 625 TS - /usr/sbin/connmand -n 629 TS - /lib/systemd/systemd-logind 631 TS - /usr/sbin/thermald --no-daemon --dbus-enable 658 TS - /usr/sbin/ofonod -n 712 TS - /usr/sbin/acpid 828 TS - /sbin/agetty --noclear tty1 linux 829 TS - /sbin/agetty -8 -L ttyS0 115200 xterm 830 TS - /sbin/agetty -8 -L ttyS1 115200 xterm 834 TS - /usr/sbin/wpa_supplicant -u 836 TS - /usr/sbin/nmbd 849 TS - /usr/sbin/smbd 850 TS - \_ /usr/sbin/smbd 851 TS - \_ /usr/sbin/smbd 853 TS - \_ /usr/sbin/smbd 855 TS - /usr/sbin/dropbear -i -r /etc/dropbear/dropbear_rsa_host_key -B 856 TS - \_ -sh 3804 TS - /usr/sbin/dropbear -i -r /etc/dropbear/dropbear_rsa_host_key -B 3805 TS - \_ -sh 4394 TS - \_ ps f -g 0 -o pid,policy,rtprio,cmd 4393 TS - /sbin/agetty -8 -L ttyS2 115200 xterm

Expected outcomes:

Processes between brackets belong to the kernel.

Processes with regular Best-Effort CBS scheduling policy (timesharing,

`TS`)Processes with a real time policy (FIFO,

`FF`…. )

Run

htopin an interactive system-monitor process-viewer and process-manager:$ htopExpected outcomes:

Reports shows an updated listing of the processes running on a computer normally ordered by the amount of CPU usage.

Color coding provides visual information about processor, swap, and memory status.

You can add additional columns to the output of

htop. To configurehtopto filter tasks by CPU affinity, do the following:Press the F2 key to open the menu system.

Press the ↓ arrow key until Columns is selected from the Setup category.

Press the → arrow key to until the selector moves to the Available Columns section.

Press the ↓ arrow key until PROCESSOR is selected from the Available Columns section.

Press the Enter key to add PROCESSOR to the Active Columns section.

Press the F10 key to complete the addition.

Press the F6 key to open the Sort by menu.

Use the ← →↑↓ arrow keys to move the selection to PROCESSOR.

Press the Enter key to filter tasks by processor affinity.

Note: Workloads may consist of many individual child tasks. These child tasks are constituent to the workload and it is acceptable and preferred that they run on the same CPU.

Sanity Check 2: Monitor Kernel Interrupts¶

List unwanted interrupt source by monitoring the number of interrupts that occur on a CPU:

$ watch -n 0.1 cat /proc/interrupts

Expected outcome:

This command polls the processor every 100 milliseconds and displays interrupt counts. Ideally, isolated CPUs 1, 2, and 3 should not show any incrementing interrupt count. In practice, the Linux “Local timer interrupt” might still occur, but properly prioritized workloads should not be impacted.

Sanity Check 3: Determine CPU LLC Cache Allocation Preset¶

Note: If target system CPU supports CAT, it can help in reducing the worst case jitter. Refer to Cache Allocation Technology for description and usage.

As an admin user:

Verify whether the cache partitioning configuration is per-CPU or per-CPU modules to mitigate LLC cache misses resulting in thread execution overhead, page-fault scheduling penalties, and so on:

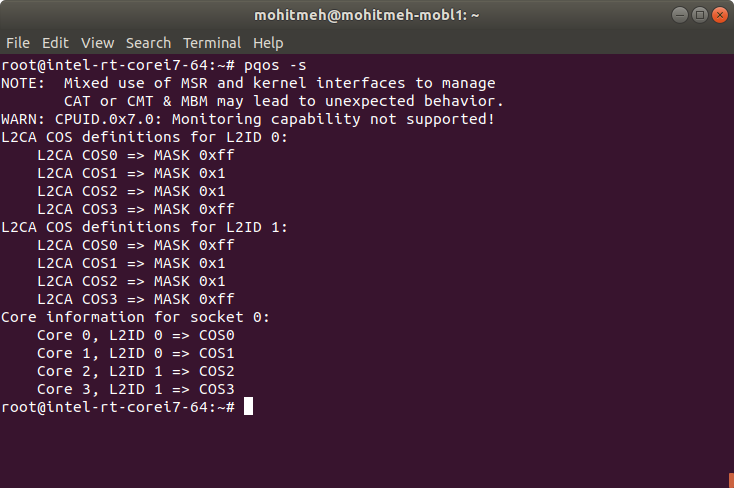

$ pqos -s

Expected outcome:

The following is the output from a target system utilizing CAT:

Sanity Check 4: Check IA UEFI Firmware Setting¶

Modify the BIOS menu settings of UEFI firmware to improve the target system’s real-time performance.

As an admin user:

Verify whether the CPU’s speedstep/speedshift is turned OFF, CPU frequency is fixed, and CPU while running always is stuck to the C0 state:

Expected outcome: Refer to Recommended ECI BIOS Optimizations.

Verify whether hyper-threading is disabled:

Expected outcome: Refer to Recommended ECI BIOS Optimizations.

Verify the North-complex power management policy on Gfx state/frequency and Bus Fabric (for example, Gersville/GV and so on).

Expected outcome: Refer to Recommended ECI BIOS Optimizations.

Check the North-complex IP - PCIe ASPM, USB PM, and so on (varies based on OEM and SKUs):

Expected outcome: Refer to the power management policy in Recommended ECI BIOS Optimizations.

Note that the Linux kernel can override the BIOS settings if related hardware register exposed to kernel space.

Note: The items displayed in the BIOS menu vary among different board vendors. OEM might hide some useful configuration items and you might not be able to modify those.

Sanity Check 5: Check Linux Kernel Command Line Parameters¶

Certain kernel boot parameters should be added for tuning the real-time performance.

As an admin user:

Review kernel command line boot to fixed as documented in ECI Kernel Boot Optimizations, which isolates CPUs 1, 2, 3, respectively.

i915.: Turns ON and OFF the power managementprocessor*.andintel*.: Power saving and clocking gating featuresisolcpus: CPU isolationirqaffinity: CPU interrupt affinity

Expected outcome: The following is an example with CPU 1 isolated (reserved for real-time process) and binding IRQ affinity to CPU 0:

i915.enable_rc6=0 i915.enable_dc=0 i915.disable_power_well=0 i915.enable_execlists=0 i915.powersave=0 processor.max_cstate=0 intel.max_cstate=0 processor_idle.max_cstate=0 intel_idle.max_cstate=0 clocksource=tsc tsc=reliable nmi_watchdog=0 nosoftlockup intel_pstate=disable noht nosmap mce=ignore_mce nohalt acpi_irq_nobalance noirqbalance vt.handoff=7 rcu_nocbs=1 rcu_nocb_poll nohz_full=1 isolcpus=1 irqaffinity=0 vt.handoff=1Use

/etc/default/grubto add kernel command line toGRUB_CMDLINE_LINUX="". Runupdate-grubbefore rebooting.- Note:

Monitor the thermal condition of the target system after changing the BIOS and kernel command line. Lower the CPU frequency and use cooling apparatus if the CPU is too hot.

Sanity Check 6: Report Thread KPIs as Latency Histograms¶

The minimally invasive Linux tracing events are commonly used to report latency histogram of a particular thread over long runtime. For example:

Thread wakeup + scheduling + execution time KPIs overhead

Thread semaphore acquire/release performance

Thread WCET jitter

As an admin user:

Check if the kernel CONFIG enables

/sys/kernel/debug/tracingto established comparable KPIs measurement across various Linux kernel runtimes, that is,*PREEMPT_RT* and COBALT:if [ -d /sys/kernel/debug/tracing ] ; then echo PASS; else echo FAIL; fi

Expected outcome: PASS

Check the trace-event ram-buffer records of multi-threaded scheduling timeline for time precision (that is, nanosecond TSC-clock epoch-time):

echo nop > /sys/kernel/debug/tracing/tracer echo 1 > /sys/kernel/debug/tracing/events/sched/sched_wakeup/enable echo 1 > /sys/kernel/debug/tracing/events/sched/sched_switch/enable echo 1 > /sys/kernel/debug/tracing/trace_on sleep 5 echo 0 > /sys/kernel/debug/tracing/trace_on echo 0 > /sys/kernel/debug/tracing/events/sched/sched_wakeup/enable echo 0 > /sys/kernel/debug/tracing/events/sched/sched_switch/enable

Export all records into a filesystem:

cat /sys/kernel/debug/tracing/trace > ~/ftrace_buffer_dump.txtExpected outcome:

Click to toggle example output

Task2-1891 ( 1026) [000] d..h2.. 6528.499461: hrtimer_cancel: hrtimer=ffffb09ec138be58 Task2-1891 ( 1026) [000] d..h1.. 6528.499461: hrtimer_expire_entry: hrtimer=ffffb09ec138be58 function=hrtimer_wakeup now=6528499005755 ts0--> Task2-1891 ( 1026) [000] d..h2.. 6528.499461: sched_waking: comm=Task pid=1890 prio=33 target_cpu=000 Task2-1891 ( 1026) [000] d..h3.. 6528.499462: sched_wakeup: comm=Task pid=1890 prio=33 target_cpu=000 Task2-1891 ( 1026) [000] d..h1.. 6528.499462: hrtimer_expire_exit: hrtimer=ffffb09ec138be58 Task2-1891 ( 1026) [000] d..h1.. 6528.499462: write_msr: 6e0, value 136d628c2e73e7 Task2-1891 ( 1026) [000] d..h1.. 6528.499463: local_timer_exit: vector=239 Task2-1891 ( 1026) [000] d...2.. 6528.499463: sched_waking: comm=ktimersoftd/0 pid=8 prio=98 target_cpu=000 Task2-1891 ( 1026) [000] d...3.. 6528.499463: sched_wakeup: comm=ktimersoftd/0 pid=8 prio=98 target_cpu=000 Task2-1891 ( 1026) [000] ....... 6528.499464: sys_exit: NR 202 = 1 Task2-1891 ( 1026) [000] ....1.. 6528.499464: sys_futex -> 0x1 Task2-1891 ( 1026) [000] ....... 6528.499472: sys_enter: NR 230 (1, 1, 7ff75266fdb0, 0, 2, 7fff10bda080) Task2-1891 ( 1026) [000] ....1.. 6528.499472: sys_clock_nanosleep(which_clock: 1, flags: 1, rqtp: 7ff75266fdb0, rmtp: 0) Task2-1891 ( 1026) [000] ....... 6528.499472: hrtimer_init: hrtimer=ffffb09ec13bbe58 clockid=CLOCK_MONOTONIC mode=ABS Task2-1891 ( 1026) [000] d...1.. 6528.499473: hrtimer_start: hrtimer=ffffb09ec13bbe58 function=hrtimer_wakeup expires=6528499988112 softexpires=6528499988112 mode=ABS Task2-1891 ( 1026) [000] d...1.. 6528.499473: write_msr: 6e0, value 136d628c2e1be1 Task2-1891 ( 1026) [000] d...1.. 6528.499474: rcu_utilization: Start context switch Task2-1891 ( 1026) [000] d...1.. 6528.499474: rcu_utilization: End context switch Task2-1891 ( 1026) [000] d...2.. 6528.499475: sched_switch: prev_comm=Task2 prev_pid=1891 prev_prio=33 prev_state=D ==> next_comm=Task next_pid=1890 next_prio=33 Task2-1891 ( 1026) [000] d...2.. 6528.499475: x86_fpu_regs_deactivated: x86/fpu: ffff96a6199156c0 initialized: 1 xfeatures: 3 xcomp_bv: 800000000000001f Task2-1891 ( 1026) [000] d...2.. 6528.499475: write_msr: c0000100, value 7ff75292c700 Task2-1891 ( 1026) [000] d...2.. 6528.499476: x86_fpu_regs_activated: x86/fpu: ffff96a619913880 initialized: 1 xfeatures: 3 xcomp_bv: 800000000000001f Task-1890 ( 1026) [000] ....... 6528.499476: sys_exit: NR 230 = 0 Task-1890 ( 1026) [000] ....1.. 6528.499476: sys_clock_nanosleep -> 0x0 Task-1890 ( 1026) [000] ....... 6528.499482: sys_enter: NR 230 (1, 1, 7ff75292bdb0, 0, 2, 7fff10bda080) Task-1890 ( 1026) [000] ....1.. 6528.499483: sys_clock_nanosleep(which_clock: 1, flags: 1, rqtp: 7ff75292bdb0, rmtp: 0) Task-1890 ( 1026) [000] ....... 6528.499483: hrtimer_init: hrtimer=ffffb09ec138be58 clockid=CLOCK_MONOTONIC mode=ABS Task-1890 ( 1026) [000] d...1.. 6528.499483: hrtimer_start: hrtimer=ffffb09ec138be58 function=hrtimer_wakeup expires=6528500005368 softexpires=6528500005368 mode=ABS Task-1890 ( 1026) [000] d...1.. 6528.499483: rcu_utilization: Start context switch Task-1890 ( 1026) [000] d...1.. 6528.499484: rcu_utilization: End context switch ts1--> Task-1890 ( 1026) [000] d...2.. 6528.499484: sched_switch: prev_comm=Task prev_pid=1890 prev_prio=33 prev_state=D ==> next_comm=rcuc/0 next_pid=12 next_prio=98

Review trace-event specific to print format:

cat /sys/kernel/debug/tracing/events/cobalt_core/sched_switch/format cat /sys/kernel/debug/tracing/events/cobalt_core/cobalt_switch_context/format

Expected outcome:

name: cobalt_switch_context ID: 451 format: field:unsigned short common_type; offset:0; size:2; signed:0; field:unsigned char common_flags; offset:2; size:1; signed:0; field:unsigned char common_preempt_count; offset:3; size:1; signed:0; field:int common_pid; offset:4; size:4; signed:1; field:struct xnthread * prev; offset:8; size:8; signed:0; field:struct xnthread * next; offset:16; size:8; signed:0; field:__data_loc char[] prev_name; offset:24; size:4; signed:1; field:__data_loc char[] next_name; offset:28; size:4; signed:1; print fmt: "prev=%p(%s) next=%p(%s)", REC->prev, __get_str(prev_name), REC->next, __get_str(next_name)

#.Enable a primary trace-event as hist:keys trigger start condition:

hist:keys trigger: Add event data to a histogram instead of logging it to the trace buffer.

if (): Event filters narrow down number of event triggers.

vals=: Variables evaluate and save multi-event quantities.$ echo 'hist:keys=common_pid:vals=$ts0,$root:ts0=common_timestamp.usecs if ( comm == "IEC_mainTask" )' >> \ /sys/kernel/debug/tracing/events/sched/sched_wakeup/triggerExpected outcome:

$ cat /sys/kernel/debug/tracing/events/sched/sched_wakeup/hist # event histogram # # trigger info: hist:keys=common_pid:vals=hitcount,common_timestamp.usecs,pid:ts0=common_timestamp.usecs:sort=hitcount:size=2048:clock=global if ( name == "Task-1890" ) [active] # { common_pid: 1890 } hitcount: 146 common_timestamp: 8563360302 Totals: Hits: 146 Entries: 1 Dropped: 0

Enable

synthetic_eventsto create user-defined trace-events:$ echo 'iectask_wcet u64 lat; ; pid_t pid;' > \ /sys/kernel/debug/tracing/synthetic_events $ cat /sys/kernel/debug/tracing/synthetic_events/iectask_wcet/format

Expected outcome: Not applicable

Enable a secondary trace events

hist:keys triggerstop condition and actions.Trigger actions inject quantities seamlessly back into the trace event subsystem:

onmatch().xxxx: Generates synthetic events.onmax(): Saves maximum latency values and arbitrary context.snapshot(): Generates any a small porting of ftrace buffer.

$ echo 'hist:keys=common_pid:latency=common_timestamp.usecs-$ts0:\ onmatch(sched.sched_switch).iectask_wcet($latency,pid) \ if ( prev_comm == "IEC_mainTask" )' >> \ /sys/kernel/debug/tracing/events/sched/sched_switch/trigger

expected outcomes N/A

Report

synthetic_eventsas a histogram sorted from minimum to maximum.$ echo 'hist:keys=pid,lat:sort=pid,lat' \ >> /sys/kernel/debug/tracing/events/synthetic_events/iectask_wcet/trigger $ cat /sys/kernel/debug/tracing/events/synthetic_events/iectask_wcet/hist

Expected outcome: Not applicable

# event histogram # # trigger info: hist:keys=pid,lat:vals=hitcount:sort=pid,lat:size=2048 [active] # { pid: 854, lat: 6 } hitcount: 2 { pid: 854, lat: 7 } hitcount: 109 { pid: 854, lat: 8 } hitcount: 55 { pid: 854, lat: 9 } hitcount: 6 { pid: 854, lat: 10 } hitcount: 2 Totals: Hits: 174 Entries: 5

Note: Some tips and tricks:

<event>/triggersyntax ERROR is reported under <event>/hist (for example, ERROR: Variable already defined: ts2).Systematically erase each

<event>/triggerusing the ‘!’ character before issuing another command into same <event>/trigger, for example,echo ‘!hist:keys=thread:…' >><event>/trigger.Only one hist trigger per

<event>can exist simultaneously.