Intel Embodied Intelligence SDK

Embodied Intelligence SDK is a suite of intuitive, easy-to-use software stack designed to streamline the development process of Embodied Intelligence product and applications on Intel platform. The SDK provides developers with a comprehensive environment for developing, testing, and optimizing Embodied Intelligence software and algorithms efficiently. It also provides necessary software framework, libraries, tools, Best known configuration(BKC), tutorials and example codes to facilitate AI solution development.

Embodied Intelligence SDK includes below features:

Comprehensive software platform from BSP, acceleration libraries, SDK to reference demos, with documentation and developer tutorials;

Real-time BKC, Linux RT kernel and optimized EtherCAT;

Traditional vision and motion planning acceleration on CPU, Reinforcement/Imitation Learning-based manipulation, AI-based vision & LLM/VLM acceleration on iGPU & NPU;

Typical workflows and examples including ACT/DP-based manipulation, LLM task planning, Pick & Place, ORB-SLAM3, etc.

Software Architecture

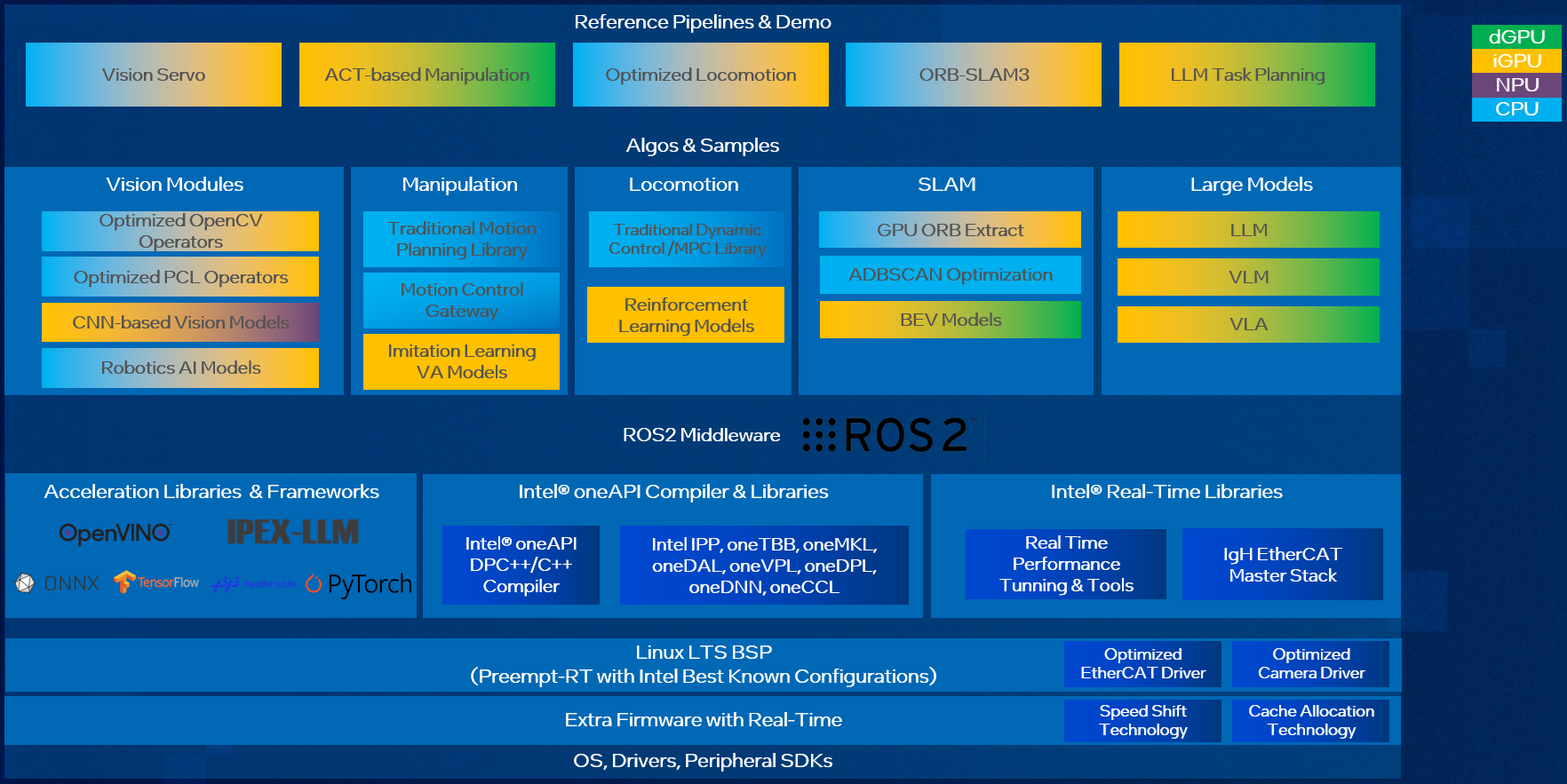

Below picture is high level software architecture of Embodied Intelligence SDK:

This software architecture is designed to power Embodied Intelligence systems by integrating computer vision, AI-driven manipulation, locomotion, SLAM, and large models into a unified framework. Built on ROS2 middleware, it takes advantage of Intel’s CPU, iGPU, dGPU, and NPU to optimize performance for robotics and AI applications. The stack includes high-performance AI frameworks, real-time libraries, and system-level optimizations, making it a comprehensive solution for Embodied Intelligence products.

At the highest level, the architecture is structured around key reference pipelines and demos that demonstrate its core capabilities. These include Vision Servo, which enhances robotic perception using AI-powered vision modules, and ACT-based Manipulation, which applies reinforcement learning and imitation learning to improve robotic grasping and movement. Optimized Locomotion leverages traditional control algorithms like MPC (Model Predictive Control) and LQR (Linear Quadratic Regulator), alongside reinforcement learning models for adaptive motion. Additionally, the ORB-SLAM3 pipeline focuses on real-time simultaneous localization and mapping, while LLM Task Planning integrates large language models for intelligent task execution.

Beneath these pipelines, the software stack includes specialized AI and robotics modules. The vision module supports CNN-based models, OpenCV, and PCL operators for optimized perception, enabling robots to interpret their surroundings efficiently. The manipulation module combines traditional motion planning with AI-driven control, allowing robots to execute complex movements. For locomotion, the system blends classic control techniques with reinforcement learning models, ensuring smooth and adaptive movement. Meanwhile, SLAM components such as GPU ORB extraction and ADBSCAN optimization enhance mapping accuracy, and BEV (Bird’s Eye View) models contribute to improved spatial awareness. The large model module supports LLMs, Vision-Language Models (VLM), and Vision-Language-Action Models (VLA), enabling advanced reasoning and decision-making capabilities.

At the core of the system is ROS2 middleware and acceleration frameworks, which provide a standardized framework for robotics development. The architecture is further enhanced by Intel’s AI acceleration libraries, including Intel® OpenVINO™ for deep learning inference, Intel® LLM Library for PyTorch* (IPEX-LLM) for optimized large model execution, and compatibility with TensorFlow*, PyTorch*, and ONNX*. The Intel® oneAPI compiler and libraries offer high-performance computing capabilities, leveraging oneMKL for mathematical operations, oneDNN for deep learning, and oneTBB for parallel processing. Additionally, Intel’s real-time libraries ensure low-latency execution, with tools for performance tuning and EtherCAT-based industrial communication.

To ensure seamless integration with robotic hardware, the SDK runs on a real-time optimized Linux BSP. It includes support for optimized EtherCAT and camera drivers, along with Intel-specific features such as Speed Shift Technology and Cache Allocation to enhance power efficiency and performance. These system-level enhancements allow the software stack to deliver high responsiveness, making it suitable for real-time robotics applications.

Overall, the Embodied Intelligence SDK provides a highly optimized, AI-driven framework for robotics and Embodied Intelligence, combining computer vision, motion planning, real-time processing, and large-scale AI models into a cohesive system. By leveraging Intel’s hardware acceleration and software ecosystem, it enables next-generation robotic applications with enhanced intelligence, efficiency, and adaptability.

Release Note

Release Notes

Click each tab to learn about the new and updated features in each release of Intel® Embodied Intelligence SDK.

Embodied Intelligence SDK v25.36 enhances model optimization capabilities with Intel® OpenVINO™ and provides typical workflows and examples, including Diffusion Policy (DP), Robotic Diffusion Transformer (RDT), Improved 3D Diffusion Policy (IDP3), Visual Servoing (CNS) and LLM Robotic Demo. This release also updated Real-time optimized BKC to improve on AI/Control performance, and supporting on Intel Arc B-Series Graphic card (B570).

New Features:

Updated Real-time optimization BKC, including BIOS and runtime optimization, balancing performance with AI & Control consolidation.

Added support for Intel Arc B-Series (Battlemage) Graphics card (B570).

Fixed deadlock issue when reading i915 perf event in Preempt-RT kernel.

New EtherCAT Master stack features supporting user-space EtherCAT Master and multiple EtherCAT masters.

Added Diffusion Policy pipeline with OpenVINO optimization.

Added Robotics Diffusion Transformer (RDT) pipeline with OpenVINO optimization.

Added Improved 3D Diffusion Policy (IDP3) model with OpenVINO optimization.

Added Visual Servoing (CNS) model with OpenVINO optimization.

Provided new tutorials for typical AI model optimization with OpenVINO.

ACRN initial enablement on ARL platform.

Added new Dockerfile to build containerized RDT pipeline.

Known Issues and Limitations

ACRN feature and performance

iGPU performance degradation observed when using passthrough iGPU to VM on ACRN.

Display becomes unresponsive in VMs when running concurrent AI workloads with iGPU SR-IOV enabled on ACRN.

The following model algorithms were added and optimized by Intel® OpenVINO™:

Algorithm |

Description |

|---|---|

Qwen2.5VL |

|

Whisper |

|

FunASR (Automatic speech recognition) |

Refer to the FunASR Setup in LLM Robotics sample pipeline |

A graph neural network-based solution for image servo utilizing explicit keypoints correspondence obtained from any detector-based feature matching methods |

|

A visuomotor policy learning model in the field of robotic visuomotor policy learning, which represents policies as conditional denoising diffusion processes |

|

A diffusion policy model enhancing capabilities for 3D robotic manipulation tasks |

|

A diffusion-based foundation model for robotic manipulation |

The following pipelines were added:

Pipeline Name |

Description |

|---|---|

An innovative method for generating robot actions by conceptualizing visuomotor policy learning as a conditional denoising diffusion process |

|

A RDT pipeline provided for evaluating the VLA model on the simulation task |

|

A code generation demo for robotics, interacting with a chatbot utilizing AI technologies such as large language models (Phi-4) and computer vision (SAM, CLIP) |

Embodied Intelligence SDK v25.15 provides necessary software framework, libraries, tools, BKC, tutorials and example codes to facilitate embodied intelligence solution development on Intel® Core Ultra Series 2 processors (Arrow Lake-H), It provides Intel Linux LTS kernel v6.12.8 with Preempt-RT, and supports for Canonical® Ubuntu® 22.04, introduces initial support for ROS2 Humble. It supports many models optimization with Intel® OpenVINO™, and provides typical workflows and examples including ACT manipulation, ORB-SLAM3, etc.

New Features:

Provided Linux* OS 6.12.8 BSP with Preempt-RT

Provided Real-time optimization BKC

Optimized IgH EtherCAT master with Linux* kernel v6.12

Added ACT manipulation pipeline with Intel® OpenVINO™/Intel® Extension for PyTorch* optimization

Added ORB-SLAM3 pipeline focuses on real-time simultaneous localization and mapping

Provided typical AI models optimization tutorials with Intel® OpenVINO™

Known Issues and Limitations

There is a known deadlock risk and limitation to use

intel_gpu_topto read i915 perf event in Preempt-RT kernel, it will be fixed with next release.

The following model algorithms were optimized by Intel® OpenVINO™:

Algorithm |

Description |

|---|---|

CNN based object detection |

|

CNN based object detection |

|

CNN based object detection |

|

Transformer based segmentation |

|

Extend SAM to video segmentation and object tracking with cross attention to memory |

|

Lightweight substitute to SAM |

|

Lightweight substitute to SAM (Same model architecture with SAM. Can refer to OpenVINO SAM tutorials for model export and application) |

|

CNN based segmentation and diffusion model |

|

Transformer based object detection |

|

Transformer based object detection |

|

Transformer based image classification |

|

An end-to-end imitation learning model designed for fine manipulation tasks in robotics |

|

A self-supervised framework for interest point detection and description in images, suitable for a large number of multiple-view geometry problems in computer vision |

|

A model designed for efficient and accurate feature matching in computer vision tasks |

|

Obtaining a BEV perception is to gain a comprehensive understanding of the spatial layout and relationships between objects in a scene |

|

A powerful tool that leverages deep learning to infer 3D information from 2D images |

The following pipelines were added:

Pipeline Name |

Description |

|---|---|

Imitation learning pipeline using Action Chunking with Transformers(ACT) algorithm to train and evaluate in simulator or real robot environment with Intel optimization |

|

One of popular real-time feature-based SLAM libraries able to perform Visual, Visual-Inertial and Multi-Map SLAM with monocular, stereo and RGB-D cameras, using pin-hole and fisheye lens models |