Intel® Edge Insights for Industrial¶

Intel® Edge Insights for Industrial (EII) enables the rapid deployment of solutions aimed at finding and revealing insights on compute devices outside data centers. The title of the software itself alludes to its intended purposes: Edge – systems existing outside of a data center and Insights – understanding relationships.

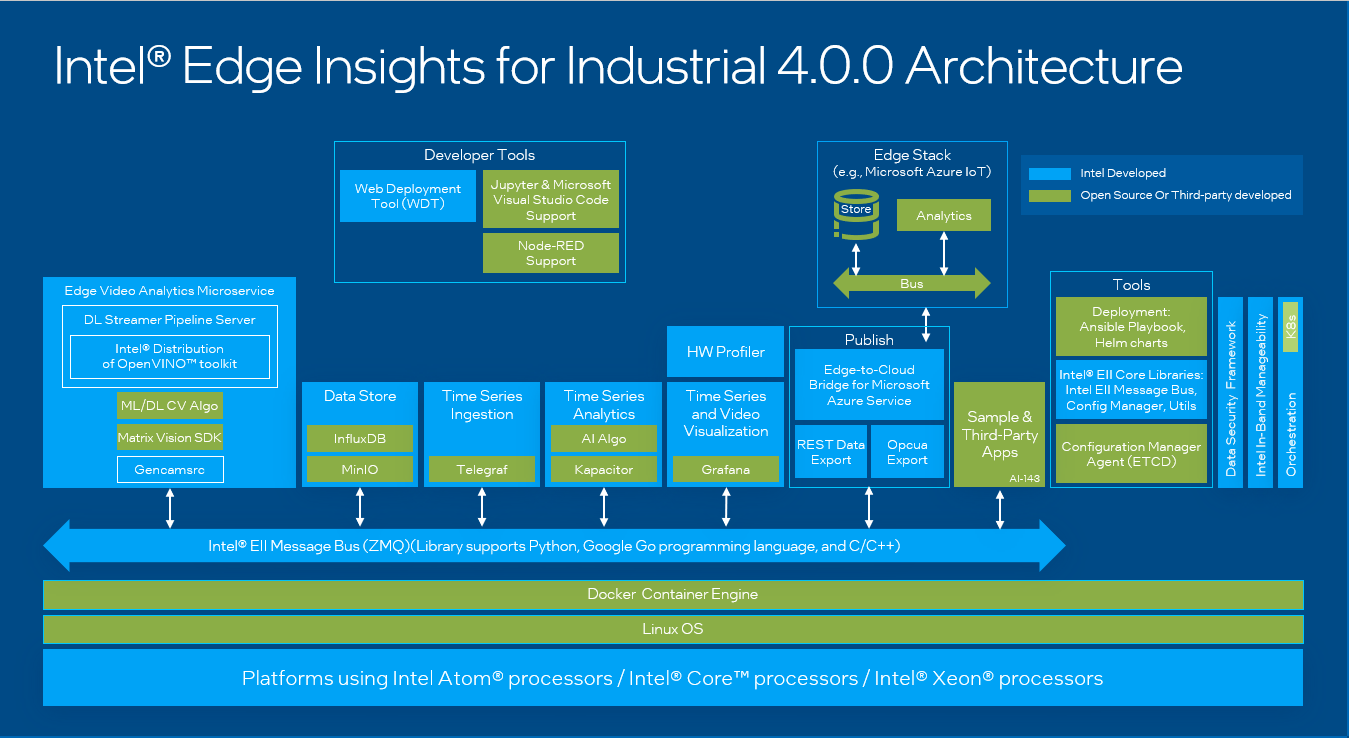

This software consists of a set of pre-integrated ingredients optimized for Intel architecture. It includes modules that enable data collection, storage, and analytics for both time-series and video data, as well as the ability to act on these insights by sending downstream commands to tools or devices.

Attention

Intel® Edge Insights for Industrial is an independent product. For help and support with EII, refer to the following:

Product page: https://www.intel.com/content/www/us/en/internet-of-things/industrial-iot/edge-insights-industrial.html

Online documentation: https://eiidocs.intel.com/

Community forum: https://community.intel.com/t5/Intel-Edge-Software-Hub/bd-p/edge-software-hub

Intel® EII Overview¶

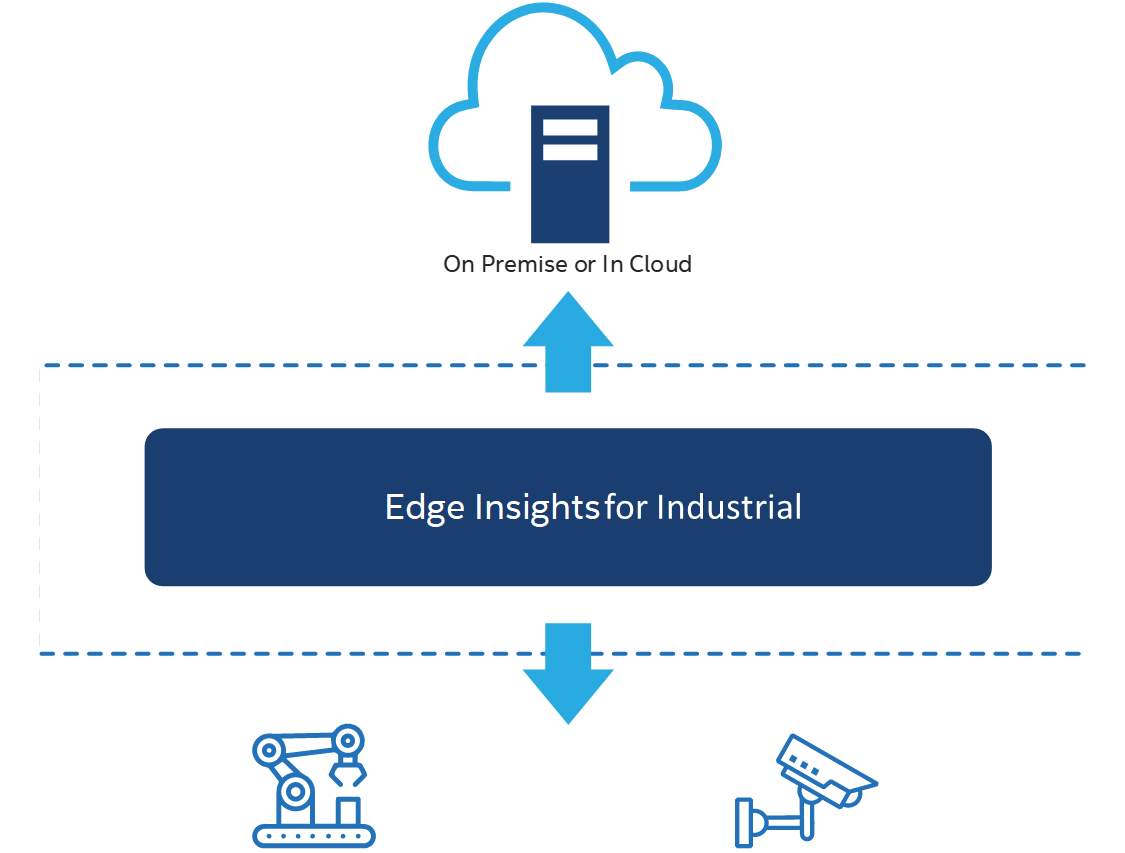

By way of analogy, EII includes both northbound and southbound data connections. As shown in the figure below, supervisory applications, such as Manufacturing Execution Systems (MES), or Work In Progress (WIP) management systems, Deep Learning Training Systems, and the cloud (whether on premise or in a data center) make northbound connections to the EII. Southbound connections from the EII are typically made to IoT devices, such as a programmable logic controller (PLC), camera, or other sensors and actuators.

The typology of the northbound relationship with MES, WIP management systems, or cloud applications is typically a star or hub and spoke shaped, meaning that multiple Edge Insight nodes communicate with one or more of these supervisory systems.

Incremental learning, for example, takes full advantage of this northbound relationship. In solutions that leverage deep learning, offline learning is necessary to fine-tune, or to continuously improve the algorithm. This can be done by sending the Insight results to an on premise or cloud-based training framework, and periodically retraining the model with the full dataset, and then updating the model on the EII. The figure below provides an example of this sequence.

Note: It is important to use the full dataset, including the original data used during initial training of the model; otherwise, the model may over-fit. For more information on model development best practices, refer to the Introduction to Machine Learning Course.

Intel® EII Architecture¶

Consider EII as a set of containers. The following figure depicts these containers as dashed lines around the components held by each container. The high-level functions of EII are data ingestion (video and time-series), data storage, data analytics, as well as data and device management.

This EII configuration is designed for deployment near to the site of data generation (for example, a PLC, robotic arm, tool, video camera, sensor, and so on). The EII Docker containers perform video and time-series data ingestion, real-time 1 analytics, and allow closed-loop control 2.

Note: 1 Real-time measurements, as tested by Intel, are as low as 50 milliseconds. 2 EII closed-loop control functions do not provide deterministic control.

Intel® EII Prerequisites¶

For more information on the prerequisites, refer to the EII Getting Started Guide.

Note: You need to configure a proxy if running the EII device behind a HTTP proxy server. It is recommended to refer to Docker instructions.

Intel® EII Minimum System Requirements¶

Processor - 8th generation Intel® CoreTM processor or greater with Intel® HD Graphics or Intel® Xeon® processor

Memory - At least 16 GB

Storage - Minimum 128 GB and Recommended 256 GB

Network - A working internet connection

Note

To use EII, ensure that you are connected to the internet.

The recommended RAM capacity for the Video Analytics pipeline is 16 GB. The recommended RAM for the Time Series Analytics pipeline is 4 GB with Intel® Atom processors.

EII is validated on Canonical® Ubuntu® 20.04 (Focal Fossa). You can install EII stack on other Linux distributions with support for Docker CE and

docker composetools.

Install Intel® EII¶

Make sure that the Intel® EII Prerequisites are met.

Intel® EII requires the Docker Engine. Install Docker if not already done.

Install the Docker Compose plugin on the target system.

Setup the ECI repository, then perform either of the following commands to install this component:

- Install from meta-package

$ sudo apt install eci-inference-eii

- Install from individual Deb packages

$ sudo apt install eii

Deploy Intel® EII¶

The following section is applicable to:

See also

This is an abridged guide based on the EII Advanced Guide. For a complete guide, refer to the EII documentation.

Prepare Intel® EII¶

Login to the target system and make sure that you have installed EII.

Change ownership of the EII directory to the current user:

$ sudo chmown -R $USER:$USER /opt/Intel-EdgeInsights

Navigate to

/opt/Intel-EdgeInsights/IEdgeInsights/build$ cd /opt/Intel-EdgeInsights/IEdgeInsights/build

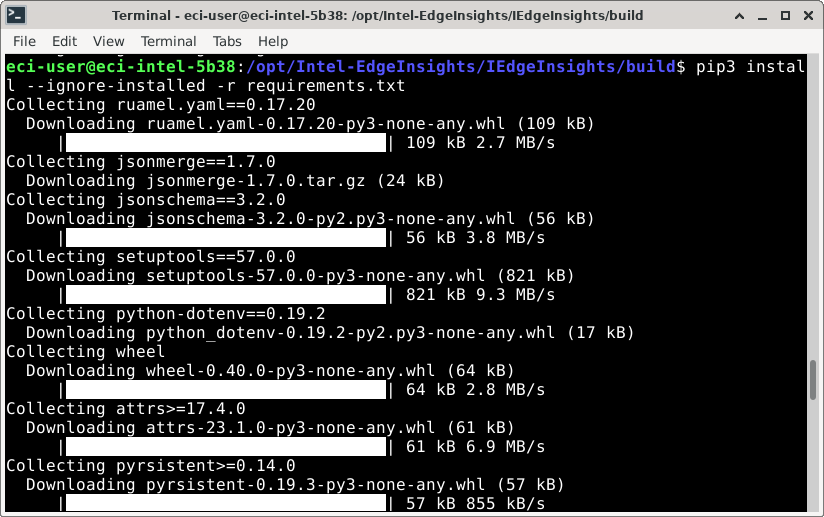

Install python requirements:

$ pip3 install --ignore-installed -r requirements.txt

EII requires a web server to host resources used during the build process. This web server will be hosted on the target system in a container environment. Modify the environment file

.envto update thePKG_SRCvariable with the target system web server address.Port

8899is used in this example. If port8899is already in use, change to any available port.$ echo $(ip route get 1 | sed -n 's/^.*src \([0-9.]*\) .*$/\1/p') | xargs -I {} sed -i "s/PKG_SRC=.*$/PKG_SRC=http:\/\/{}:8899/" .env

Note

You may also want to modify the service credentials in the environment file

.env. They are pre-populated, but you should modify them before using in production.# Service credentials # These are required to be updated in both DEV mode & PROD mode ETCDROOT_PASSWORD=etcdrootPassword INFLUXDB_USERNAME=influxdbUsername INFLUXDB_PASSWORD=influxdbPassword MINIO_ACCESS_KEY=minioAccessKey MINIO_SECRET_KEY=minioSecretKey

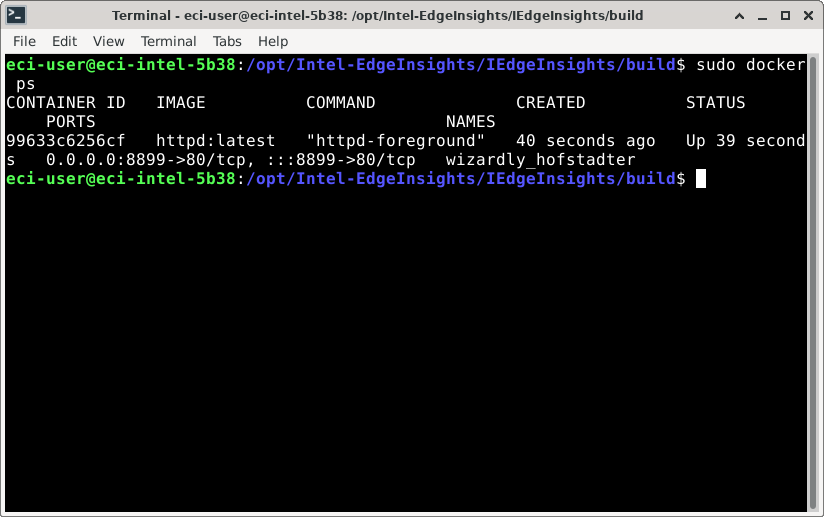

Host the EII resources using a web server in a container environment:

Port

8899is used in this example. If you modified this port in the previous step, modify it here accordingly as well.$ sudo docker run -dit --rm -h 127.0.0.1 -p 8899:80 -v /opt/Intel-EdgeInsights/IEdgeInsights/CoreLibs/:/usr/local/apache2/htdocs/ httpd:latest

Check that the web server container is running:

$ sudo docker ps

Generate and Build Intel® EII scenario¶

Generate microservice configurations

EII uses

docker composeand config files to deploy microservices. These files are auto-generated from a scenario file, which determines the microservices that are to be deployed. You could provide your own scenario, or select an example scenario (see the following tables).Main Scenarios

Scenario File

Video + Timeseries

video-timeseries.ymlVideo

video.ymlTimeseries

time-series.ymlVideo Pipeline Scenarios

Scenario File

Video Streaming

video-streaming.ymlVideo streaming with history

video-streaming-storage.ymlVideo streaming with Azure

video-streaming-azure.ymlVideo streaming & custom UDFS

video-streaming-all-udfs.ymlBuild microservice configurations for a specific scenario:

$ python3 builder.py -f ./usecases/<scenario_file>

This example uses the Video Streaming scenario:

$ python3 builder.py -f ./usecases/video-streaming.yml

Note: Additional capabilities for scenarios exist. For example, you may modify/add microservices or deploy multiple instances of microservices. For more information, refer to the EII documentation.

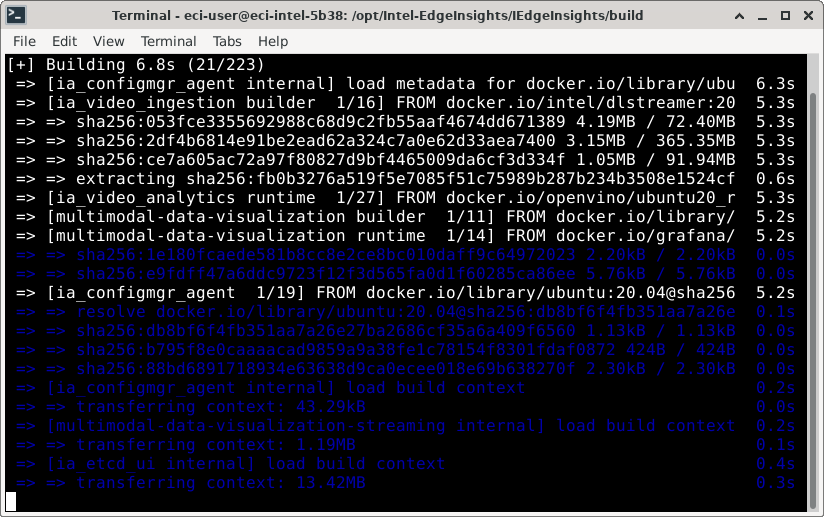

Build EII scenario

$ sudo docker compose build

Note: Building the microservices may require longer than 30 minutes. The time required is primarily impacted by network and CPU performance.

Attention

You can mitigate build errors, if any, by cleaning the existing images and containers and attempting the build again. Run the following commands to clean all Docker images and containers:

$ docker kill $(docker ps -q) $ docker rmi $(docker images -a -q) $ docker system prune -a

Attention

If deploying behind a network proxy, update the system configuration accordingly. Typical configuration files include:

~/.docker/config.json/etc/systemd/system/docker.service.d/https-proxy.conf/etc/systemd/system/docker.service.d/http-proxy.conf

For more information on deploying behind a network proxy, refer to the following guides:

If connection issues persist, update the resolver configuration. Run the following command to link the resolver configuration:

$ ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf

Deploy and Verify Intel® EII scenario¶

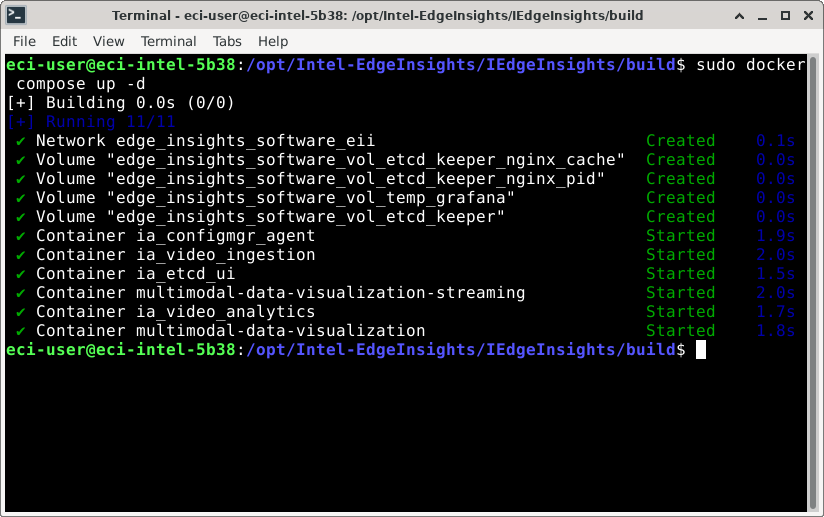

Deploy EII scenario

$ sudo docker compose up -d

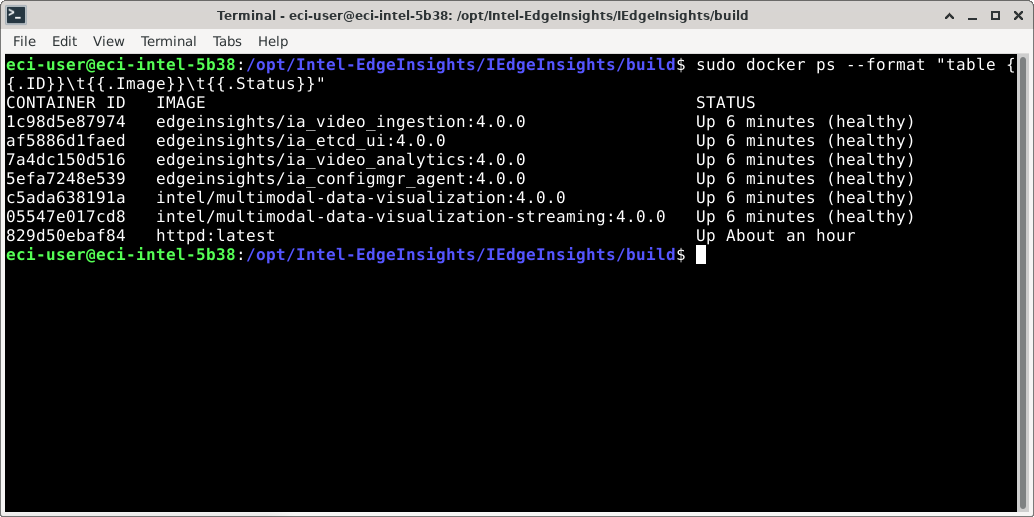

Verify correct deployment

After deploying the EII scenario, there should a number of microservices actively running on the system. To check the status of these microservices, run the following command:

$ docker ps

The command will output a table of currently running microservices. Examine the

STATUScolumn, and verify that each microservice reports(healthy).Note: When deployed on a headless (non-graphical) system, microservices which execute graphical windowing API calls may fail.