eBPF Offload Native Mode XDP on Intel® Ethernet Linux Driver¶

Intel® ECI enables Linux eXpress Data Path (XDP) Native Mode (for example, XDP_FLAGS_DRV_MODE) on Intel® Ethernet Linux drivers across multiple industry-graded Ethernet Controllers:

[Ethernet PCI

8086:7aacand8086:7aad] 13th and 12th Gen Intel® Core™ S-Series [Raptor Lake and Alder Lake] Ethernet GbE Time-Sensitive Network Controller[Ethernet PCI

8086:a0ac] 11th Gen Intel® Core™ U-Series and P-Series [Tiger Lake] Ethernet GbE Time-Sensitive Network Controller[Ethernet PCI

8086:4b32and8086:4ba0] Intel® Atom® x6000 Series [Elkhart Lake] Ethernet GbE Time-Sensitive Network Controller[Ethernet PCI

8086:15f2] Intel® Ethernet Controller I225-LM for Time-Sensitive Networking (TSN)[Ethernet PCI

8086:125b] Intel® Ethernet Controller I226-LM for Time-Sensitive Networking (TSN)[Ethernet PCI

8086:157b,8086:1533,…] Intel® Ethernet Controller I210-IT for Time-Sensitive Networking (TSN)

Install Linux BPFTool¶

The following section is applicable to:

Make sure that the running Linux distribution kernel matches the necessary XDP and BPF configuration:

$ cat /boot/config-$(uname -r) | grep -e .*BPF.* -e .*XDP.*

or alternatively :

$ zcat /proc/config.gz | grep -e .*BPF.* -e .*XDP.*

CONFIG_CGROUP_BPF=y CONFIG_BPF=y # CONFIG_BPF_LSM is not set CONFIG_BPF_SYSCALL=y CONFIG_ARCH_WANT_DEFAULT_BPF_JIT=y # CONFIG_BPF_JIT_ALWAYS_ON is not set CONFIG_BPF_JIT_DEFAULT_ON=y # CONFIG_BPF_UNPRIV_DEFAULT_OFF is not set # CONFIG_BPF_PRELOAD is not set CONFIG_XDP_SOCKETS=y # CONFIG_XDP_SOCKETS_DIAG is not set CONFIG_IPV6_SEG6_BPF=y # CONFIG_NETFILTER_XT_MATCH_BPF is not set # CONFIG_BPFILTER is not set CONFIG_NET_CLS_BPF=m CONFIG_NET_ACT_BPF=m CONFIG_BPF_JIT=y # CONFIG_BPF_STREAM_PARSER is not set CONFIG_LWTUNNEL_BPF=y CONFIG_HAVE_EBPF_JIT=y CONFIG_BPF_EVENTS=y # CONFIG_BPF_KPROBE_OVERRIDE is not set # CONFIG_TEST_BPF is not set

Install

bpftool, provided by ECI, corresponding to the exact Linux Intel® tree or from the Linux distribution mainlinebpftool/stable:

Install from individual Deb package

$ sudo apt search bpftoolFor example, an Debian distribution set up with ECI repository will list the following:

Sorting... Done Full Text Search... Done bpftool/stable 7.1.0+6.1.76-1 amd64 Inspection and simple manipulation of BPF programs and maps bpftool-6.1/unknown,now 6.1.59-rt16-r0-0eci1 amd64 [installed] Inspection and simple manipulation of BPF programs and mapsNote: Intel® ECI ensures that Linux XDP support always matches the Linux Intel® LTS branches (for example, no delta between

kernel/bpfandtools/bpf).Install the

bpftoolfor Debian 12 (Bookworm):$ sudo apt install bpftool-6.1Install the

bpftoolfor Canonical® Ubuntu® 22.04 (Jammy Jellyfish):$ sudo apt install bpftool-5.15

Install from individual RPM package

$ sudo apt install bpftool

Install Linux XDP Tools¶

The following section is applicable to:

Make sure both kernel and bpftool supports XDP :

Install

bpftool, provided by ECI, corresponding to the exact Linux Intel® tree or from the Linux distribution mainlinebpftool/stable:

Install from individual Deb package

Install the

xdp-toolsfor Debian 12 (Bookworm):$ sudo apt install xdp-toolsNote: Intel® ECI ensures XDP tools is made available on Canonical® Ubuntu® 22.04 (Jammy Jellyfish)

$ sudo apt search xdp-toolsFor example, an Debian distribution set up with ECI repository will list the following:

Sorting... Done Full Text Search... Done xdp-tools/unknown,unknown,now 1.2.9-1~bpo11+1 amd64 [installed,automatic] library and utilities for use with XDP

Install from individual RPM package

$ sudo apt install xdp-tools

Usage

The Linux xdp-project community xdpdump program provides a stable reference to understand and experiment with network packet processing using BPF program with XDP APIs.

$ xdpdump -hUsage: xdpdump [options] XDPDump tool to dump network traffic Options: --rx-capture <mode> Capture point for the rx direction (valid values: entry,exit) -D, --list-interfaces Print the list of available interfaces --load-xdp-mode <mode> Mode used for --load-xdp-mode, default native (valid values: native,skb,hw,unspecified) --load-xdp-program Load XDP trace program if no XDP program is loaded -i, --interface <ifname> Name of interface to capture on --perf-wakeup <events> Wake up xdpdump every <events> packets -p, --program-names <prog> Specific program to attach to -P, --promiscuous-mode Open interface in promiscuous mode -s, --snapshot-length <snaplen> Minimum bytes of packet to capture --use-pcap Use legacy pcap format for XDP traces -w, --write <file> Write raw packets to pcap file -x, --hex Print the full packet in hex -v, --verbose Enable verbose logging (-vv: more verbose) --version Display version information -h, --help Show this help

Note: every Loading and Unloading program in Linux eXpress Data Path (XDP) Native Mode flushes Intel® Ethernet Linux Ethernet Controllers TX-RX hw-queues.

Sanity Check #1: Load and Execute eBPF Offload Program with “Generic mode” XDP¶

Install both

xdpdumpand iPerf - The ultimate speed test tool for TCP, UDP and SCTP:

- Install from individual Deb package

$ sudo apt install xdp-tools iperf3

Install from individual RPM package

$ sudo dnf install xdp-tools iperf3

Generate UDP traffic between talker and listener:

Set a L4-level UDP Listener on Ethernet device

enp1s0with IPv4, for example set to 192.168.1.206:$ ip addr add 192.168.1.206/24 brd 192.168.0.255 dev enp1s0 $ iperf3 -s

----------------------------------------------------------- Server listening on 5201 -----------------------------------------------------------

Set a L4-level UDP Talker with 1448 bytes payload size on another node Ethernet device

enp1s0with a different IPv4 address, for example set to 192.168.1.203:$ ip addr add 192.168.1.203/24 brd 192.168.0.255 dev enp1s0 $ iperf3 -c 192.168.1.206 -t 600 -b 0 -u -l 1448

Connecting to host 192.168.1.206, port 5201 [ 5] local 192.168.1.203 port 36974 connected to 192.168.1.206 port 5201 [ ID] Interval Transfer Bitrate Total Datagrams [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 82590 [ 5] 1.00-2.00 sec 114 MBytes 956 Mbits/sec 82540

Execute the precompiled BPF XDP program loaded on the device in “Generic mode” XDP (for example,

XDP_FLAGS_SKB_MODE) with successfulXDP_PASSandXDP_DROPactions:$ xdpdump -i enp1s0 --load-xdp-mode skb

Current rlimit 8388608 already >= minimum 1048576 WARNING: Specified interface does not have an XDP program loaded, capturing in legacy mode! listening on enp1s0, link-type EN10MB (Ethernet), capture size 262144 bytes 1712162549.137596: packet size 68 bytes on if_name "enp1s0" 1712162550.137605: packet size 68 bytes on if_name "enp1s0" 1712162551.137612: packet size 68 bytes on if_name "enp1s0"

Press Ctrl + C to unload the XDP program:

^C 3 packets captured 0 packets dropped by kernel

Sanity Check #2: Load and Execute eBPF Offload program with “Native mode” XDP¶

Generate UDP traffic between talker and listener similar to sanity check #1.

Execute the precompiled BPF XDP program loaded on the device in “Native mode” XDP with successful

XDP_PASSandXDP_DROPactions:$ xdpdump -i enp1s0 --load-xdp-mode native

Current rlimit 8388608 already >= minimum 1048576 WARNING: Specified interface does not have an XDP program loaded, capturing in legacy mode! listening on enp1s0, link-type EN10MB (Ethernet), capture size 262144 bytes 1712162563.137741: packet size 68 bytes on if_name "enp1s0" 1712162564.137746: packet size 68 bytes on if_name "enp1s0" 1712162565.137762: packet size 68 bytes on if_name "enp1s0"

Press Ctrl + C to the unload XDP program:

^C 3 packets captured 0 packets dropped by kernel

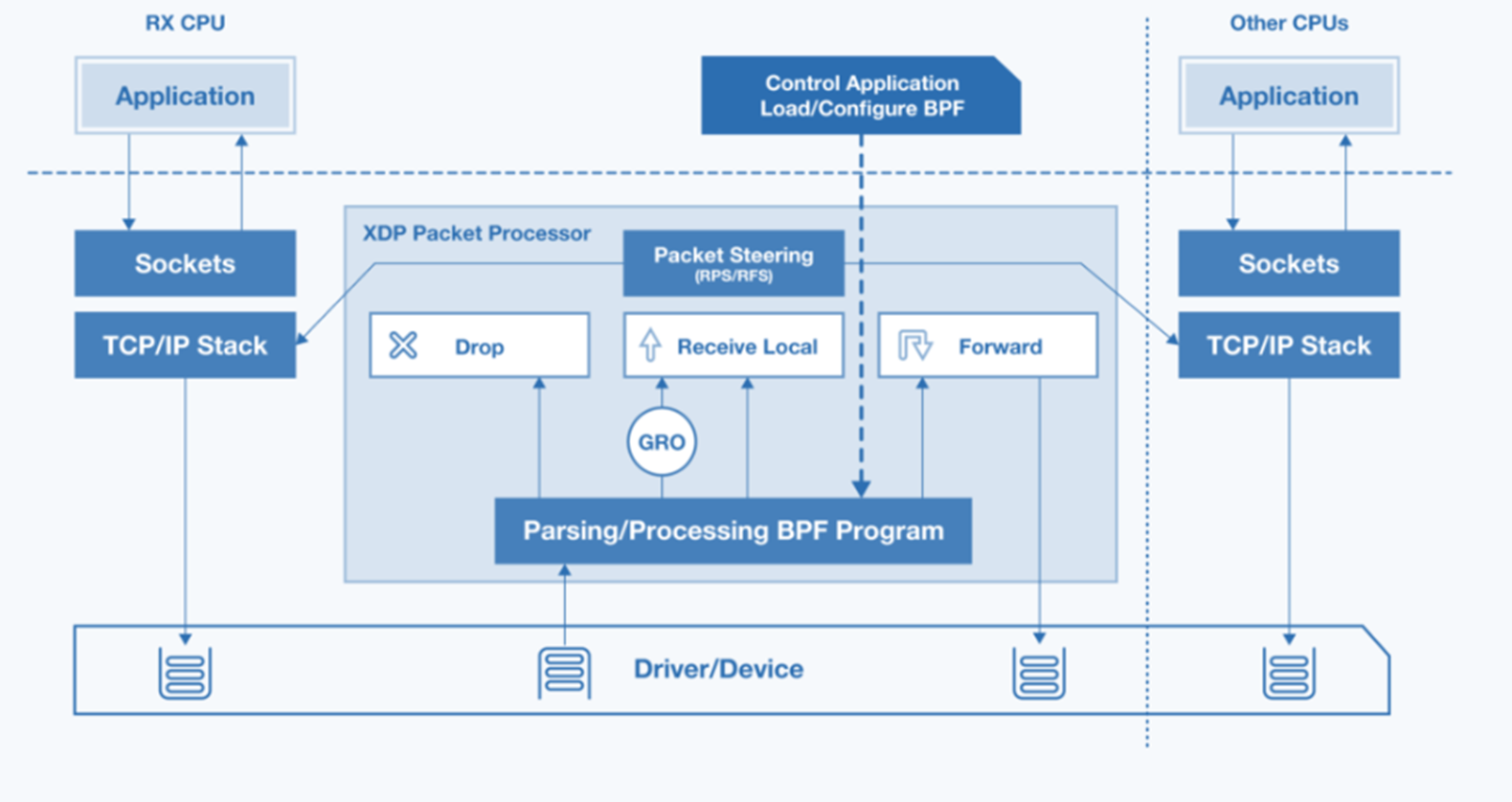

Linux eXpress Data Path (XDP)¶

The Intel® Linux Ethernet drivers’ Native node XDP offers a standardized Linux API to achieve low-overhead Ethernet Layer2 packet-processing (encoding, decoding, filtering, and so on) on industrial protocols like UADP ETH, ETherCAT, or Profinet-RT/IRT, without any prior knowledge of Intel® Ethernet Controller architecture.

Intel® ECI can leverage the Native mode XDP across Linux eBPF program offload XDP ** and Traffic Control (TC) **BPF Classifier (cls_bpf) in industrial networking usage models.

Linux QDisc AF_PACKET socket presents performance limitation. The following table compares both design approaches.

AF_PACKETS Socket with QDisc

AF_XDP Socket/eBPF Offload

Linux Network Stack (TCP/IP, UDP/IP)

Yes

BPF runtime program/library

idg_xdp_ringdirect DMAOSI Layer L4 (Protocol)-L7 (Application)

Yes

No

Number of net packets copied across kernel to users

Several skb_data

memcpyNone in UMEM/Zero-copy mode A few in UMEM/copy mode

IEEE 802.1Q-2018 Enhancements for Scheduled Traffic (EST) Frame Preemption (FPE)

Standardize API for Hardware offload

Customize Hardware offload

Deterministic Ethernet Network Cycle-Time requirement

Moderate

Tight

Per-Packets RX-OFFLOAD timestamp

Yes

Yes

Per-Packets TX-OFFLOAD launch-time (TBS)

Yes, AF_PACKETS SO_TXTIME cmsg

No

IEEE 802.1AS L2/PTP RX and TX hardware offload

Yes L2/PTP

Yes L2/PTP

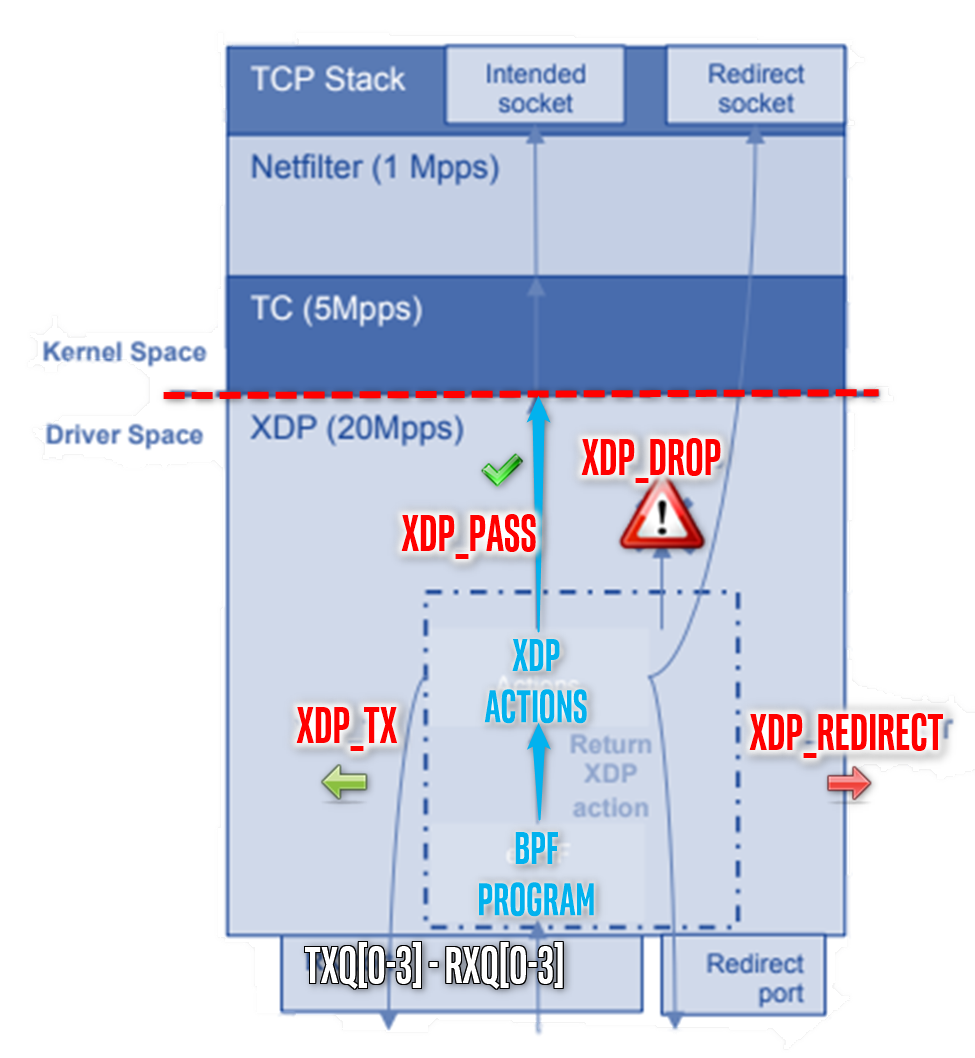

Intel® Ethernet Controllers Linux drivers can handle the most commonly used XDP actions, allowing Ethernet L2-level packets traversing networking stack to be reflected, filtered, or redirected with lowest-latency overhead (for example, DMA accelerated transfer with limited memcpy, XDP_COPY or without any XDP_ZEROCOPY):

eBPF programs classify/modify traffic and return XDP actions:

XDP_PASS

XDP_DROP

XDP_TX

XDP_REDIRECT

XDP_ABORTNote:

cls_bpfin Linux Traffic Control (TC) works in same manner as the kernel Space.

The following table summarizes the eBPF offload Native mode XDP support available on the Intel® Linux Ethernet controllers.

eBPF Offload

v5.1x.y/v6.1.y APIs

Intel® I210/igb.ko

Intel® GbE/stmmac.ko

Intel® I22x-LM/igc.ko

XDP program features

XDP_DROPYes

Yes

Yes

XDP_PASSYes

Yes

Yes

XDP_TX8Yes

Yes

Yes

XDP_REDIRECTYes

Yes

Yes

XDP_ABORTEDYes

Yes

Yes

Packet read access

Yes

Yes

Yes

Conditional statements

Yes

Yes

Yes

xdp_adjust_head()Yes

Yes

Yes

bpf_get_prandom_u32()Yes

Yes

Yes

perf_event_output()Yes

Yes

Yes

Partial offload

Yes

Yes

Yes

RSS

rx_queue_indexselectYes

Yes

Yes

bpf_adjust_tail()

Yes

Yes

Yes

XDP maps features

Offload ownership for maps

Yes

Yes

Yes

Hash maps

Yes

Yes

Yes

Array maps

Yes

Yes

Yes

bpf_map_lookup_elem()Yes

Yes

Yes

bpf_map_update_elem()Yes

Yes

Yes

bpf_map_delete_elem()Yes

Yes

Yes

Atomic

sync_fetch_and_adduntested

untested

untested

Map sharing between ports

untested

untested

untested

uarch optimization features

Localized packet cache

untested

untested

untested

32 bit BPF support

untested

untested

untested

Localized maps

untested

untested

untested

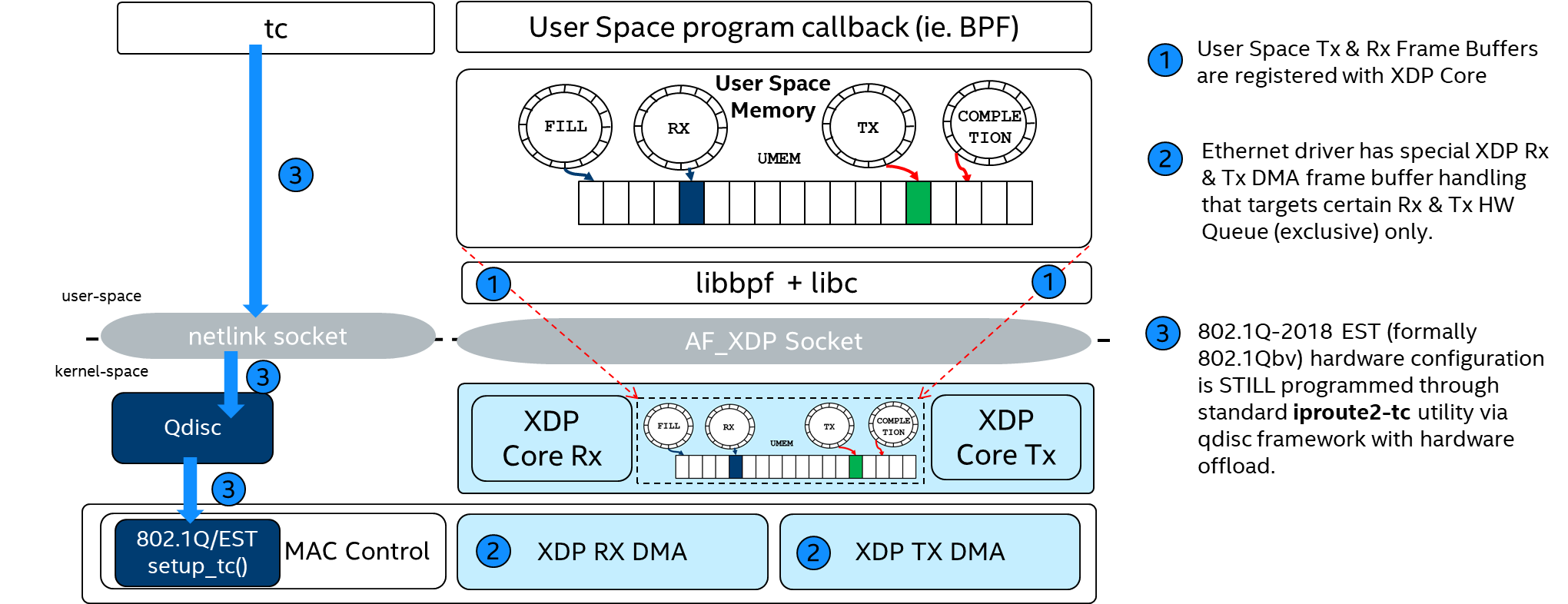

AF_XDP Socket (CONFIG_XDP_SOCKETS)¶

An AF_XDP socket (XSK) is created with the normal socket() system call. Two rings are associated with each XSK: the RX ring and the TX ring. A socket can receive packets on the RX ring and it can send packets on the TX ring. These rings are registered and sized with the setsockopts - XDP_RX_RING and XDP_TX_RING, respectively. It is mandatory to have at least one of these rings for each socket. An RX or TX descriptor ring points to a data buffer in a memory area called a UMEM. RX and TX can share the same UMEM so that a packet does not have to be copied between RX and TX. Moreover, if a packet needs to be kept for a while due to a possible retransmit, the descriptor that points to that packet can be changed to point to another and reused right away. This avoids copying of data.

Kernel feature CONFIG_XDP_SOCKETS allows the Linux drivers igb.ko, igc.ko, and stmmac-pci.ko to offload to the eBPF program XDP for transferring the packets up to the user space using AF_XDP.

You can install all officially supported Linux BPF samples eBPF sample programs as Deb packages from the ECI repository.

The following section is applicable to:

Setup the ECI repository, then perform either of the following commands to install this component:

Install from individual Deb package

For example, on Debian 12 (Bookworm), run the following command to install the

linux-bpf-samplespackage:$ sudo apt install xdpsock bpftool-6.1Alternatively on Canonical® Ubuntu® 22.04 (Jammy Jellyfish), run the following command to install the

linux-bpf-samplespackage:$ sudo apt install xdpsock bpftool-5.15Note: From Linux v5.15 onward, the

vmlinux.hgenerated header (CONFIG_DEBUG_INFO_BTF=y) is recommended for the BPF program to improve portability whenlibbpfenables Compile once, run everywhere (CO:RE)”. It contains all type definitions that Linux Intel® 2021/lts running Linux kernel uses in its own source code.$ bpftool btf dump file /sys/kernel/btf/vmlinux format c > /tmp/vmlinux.h

Install from individual RPM package

This package is not yet available for Red Hat® Enterprise Linux®.

Usage

The Linux xdp-project community xdpsock program provides a stable reference to understand and experiment with the AF_XDP socket API.

$ /opt/xdp/bpf-examples/af-xdp/xdpsock -hUsage: xdpsock [OPTIONS] Options: -r, --rxdrop Discard all incoming packets (default) -t, --txonly Only send packets -l, --l2fwd MAC swap L2 forwarding -i, --interface=n Run on interface n -q, --queue=n Use queue n (default 0) -p, --poll Use poll syscall -S, --xdp-skb=n Use XDP skb-mod -N, --xdp-native=n Enforce XDP native mode -n, --interval=n Specify statistics update interval (default 1 sec). -O, --retries=n Specify time-out retries (1s interval) attempt (default 3). -z, --zero-copy Force zero-copy mode. -c, --copy Force copy mode. -m, --no-need-wakeup Turn off use of driver need wakeup flag. -f, --frame-size=n Set the frame size (must be a power of two in aligned mode, default is 4096). -u, --unaligned Enable unaligned chunk placement -M, --shared-umem Enable XDP_SHARED_UMEM (cannot be used with -R) -d, --duration=n Duration in secs to run command. Default: forever. -w, --clock=CLOCK Clock NAME (default MONOTONIC). -b, --batch-size=n Batch size for sending or receiving packets. Default: 64 -C, --tx-pkt-count=n Number of packets to send. Default: Continuous packets. -s, --tx-pkt-size=n Transmit packet size. (Default: 64 bytes) Min size: 64, Max size 4096. -P, --tx-pkt-pattern=nPacket fill pattern. Default: 0x12345678 -V, --tx-vlan Send VLAN tagged packets (For -t|--txonly) -J, --tx-vlan-id=n Tx VLAN ID [1-4095]. Default: 1 (For -V|--tx-vlan) -K, --tx-vlan-pri=n Tx VLAN Priority [0-7]. Default: 0 (For -V|--tx-vlan) -G, --tx-dmac=<MAC> Dest MAC addr of TX frame in aa:bb:cc:dd:ee:ff format (For -V|--tx-vlan) -H, --tx-smac=<MAC> Src MAC addr of TX frame in aa:bb:cc:dd:ee:ff format (For -V|--tx-vlan) -T, --tx-cycle=n Tx cycle time in micro-seconds (For -t|--txonly). -y, --tstamp Add time-stamp to packet (For -t|--txonly). -W, --policy=POLICY Schedule policy. Default: SCHED_OTHER -U, --schpri=n Schedule priority. Default: 0 -x, --extra-stats Display extra statistics. -Q, --quiet Do not display any stats. -a, --app-stats Display application (syscall) statistics. -I, --irq-string Display driver interrupt statistics for interface associated with irq-string. -B, --busy-poll Busy poll. -R, --reduce-cap Use reduced capabilities (cannot be used with -M)

Note: every Loading and Unloading program in Linux eXpress Data Path (XDP) Native Mode flushes Intel® Ethernet Linux Ethernet Controllers TX-RX hw-queues.

Sanity Check #3: Load and Execute “Native mode” AF_XDP Socket Default XDP_COPY¶

Set up two or more ECI nodes according to the AF_XDP Socket (CONFIG_XDP_SOCKETS) guidelines.

Execute a

XDP_RXaction from the precompiled BPF XDP program loaded usingigc.koorigb.koandstmmac.koIntel® Ethernet Controller Linux interface under Native mode with default XDP_COPY:xdpsock -i enp0s30f4 -q 0 -N -c

sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.01 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 ^C sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 0.47 rx 0 0 tx 0 0

Display the eBPF program actively exposing the

AF_XDPsocket:$ bpftool prog show

... 29: xdp tag 992d9ddc835e5629 loaded_at 2020-03-13T16:14:38+0000 uid 0 xlated 176B not jited memlock 4096B map_ids 1

Display the active

xsk_mapkernel memory space allocated by the eBPF program:$ bpftool map

1: xskmap name xsks_map flags 0x0 key 4B value 4B max_entries 6 memlock 4096B

Press Ctrl + C to unmount AF_XDP socket and unload eBPF program

Sanity Check #4: Load and Execute “Native mode” AF_XDP Socket Default XDP_ZEROCOPY¶

Set up two or more ECI nodes according to the AF_XDP Socket (CONFIG_XDP_SOCKETS) guidelines.

Execute a

XDP_RXaction from the precompiled BPF XDP program loaded usingigc.koorigb.koandstmmac.koIntel® Ethernet Controller Linux interface under Native mode withXDP_ZEROCOPYenabled:$ xdpsock -i enp0s30f4 -q 0 -N -z

sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.01 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 ^C sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 0.47 rx 0 0 tx 0 0

Display the eBPF program actively exposing the

AF_XDPsocket:$ bpftool prog show

30: xdp tag 992d9ddc835e5629 loaded_at 2020-03-13T16:04:37+0000 uid 0 xlated 176B not jited memlock 4096B map_ids 2

Display the active

xsk_mapkernel memory space allocated by the eBPF program:$ bpftool map

2: xskmap name xsks_map flags 0x0 key 4B value 4B max_entries 6 memlock 4096B

Press Ctrl + C to unmount the

AF_XDPsocket and unload eBPF program.

BPF Compiler Collection (BCC)¶

BPF Compiler Collection (BCC) makes it easy to build and load BPF programs into the kernel directly from Python code. This can be used for XDP packet processing. For more details, refer to the BCC web site.

By using BCC from a container in ECI, you can develop and test BPF programs attached to TSN NICs directly on the target without the need for a separate build system.

The following BCC XDP Redirect example is derived from the kernel self test. It creates two namespaces with two veth peers, and forwards packets in-between using generic XDP.

The following section is applicable to:

Create the

vethdevices and their peers in their respective namespaces:$ ip netns add ns1 $ ip netns add ns2 $ ip link add veth1 index 111 type veth peer name veth11 netns ns1 $ ip link add veth2 index 222 type veth peer name veth22 netns ns2 $ ip link set veth1 up $ ip link set veth2 up $ ip -n ns1 link set dev veth11 up $ ip -n ns2 link set dev veth22 up $ ip -n ns1 addr add 10.1.1.11/24 dev veth11 $ ip -n ns2 addr add 10.1.1.22/24 dev veth22

Make sure that pinging from the

vethpeer in one namespace to the othervethpeer in another namespace does not work in any direction without XDP redirect:$ ip netns exec ns1 ping -c 1 10.1.1.22 PING 10.1.1.22 (10.1.1.22): 56 data bytes --- 10.1.1.22 ping statistics --- 1 packets transmitted, 0 packets received, 100% packet loss

$ ip netns exec ns2 ping -c 1 10.1.1.11 PING 10.1.1.11 (10.1.1.11): 56 data bytes --- 10.1.1.11 ping statistics --- 1 packets transmitted, 0 packets received, 100% packet loss

In another terminal, run the BCC container:

$ docker run -it --rm \ --name bcc \ --privileged \ --net=host \ -v /lib/modules/$(uname -r)/build:/lib/modules/host-build:ro \ bcc

Inside the container, create a new file

xdp_redirect.pywith the following content:#!/usr/bin/python from bcc import BPF import time import sys b = BPF(text = """ #include <uapi/linux/bpf.h> int xdp_redirect_to_111(struct xdp_md *xdp) { return bpf_redirect(111, 0); } int xdp_redirect_to_222(struct xdp_md *xdp) { return bpf_redirect(222, 0); } """, cflags=["-w"]) flags = (1 << 1) # XDP_FLAGS_SKB_MODE #flags = (1 << 2) # XDP_FLAGS_DRV_MODE b.attach_xdp("veth1", b.load_func("xdp_redirect_to_222", BPF.XDP), flags) b.attach_xdp("veth2", b.load_func("xdp_redirect_to_111", BPF.XDP), flags) print("BPF programs loaded and redirecting packets, hit CTRL+C to stop") while 1: try: time.sleep(1) except KeyboardInterrupt: print("Removing BPF programs") break; b.remove_xdp("veth1", flags) b.remove_xdp("veth2", flags)

Run

xdp_redirect.pyto load eBPF program :$ python3 xdp_redirect.py BPF programs loaded and redirecting packets, hit CTRL+C to stop

In the first terminal, make sure that pinging from the

vethpeer in one namespace$ ip netns exec ns1 ping -c 1 10.1.1.22

PING 10.1.1.22 (10.1.1.22): 56 data bytes 64 bytes from 10.1.1.22: seq=0 ttl=64 time=0.067 ms --- 10.1.1.22 ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 0.067/0.067/0.067 ms

In the second terminal,

vethpeer in another namespace works in both directions due to XDP redirect:$ ip netns exec ns2 ping -c 1 10.1.1.11

PING 10.1.1.11 (10.1.1.11): 56 data bytes 64 bytes from 10.1.1.11: seq=0 ttl=64 time=0.044 ms --- 10.1.1.11 ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 0.044/0.044/0.044 ms