Deploy a Cluster with ClusterAPI¶

This section explains the procedure to configure and run Edge Conductor tool to deploy a cluster with ClusterAPI

Cluster with ClusterAPI - Preparation¶

Follow the Hardware and Software Requirements for Edge Conductor Day-0 Host to prepare the Day-0 host hardware and software.

Follow the instructions in Build and Install Edge Conductor Tool to build and install the Edge Conductor tool. Go to the _workspace folder to run the Edge Conductor tool.

Before deploying the ClusterAPI, do the following:

Hardware

Prepare bare metal servers on which a workload cluster will be deployed. These servers must have Baseboard Management Controller (BMC) support. It is a hard requirement for the current stage.

Make necessary configurations, such as IP address, username, password, and so on. to enable BMC on these bare metal servers.

Set the correct time zone and system time in BIOS to avoid time mismatch between the Edge Conductor Day-0 machine and these bare metal servers.

Make the physical connections. The servers must be connected to two networks: the BMC network and the provisioning network. The bare metal servers should be able to access the Internet through the provisioning network. The Edge Conductor Day-0 machine must be able to access both the BMC network and the provisioning network also.

Software

Prepare a Kubernetes cluster and its

kubeconfigto access it. This cluster will be transformed into a management cluster.

Operating System

Download the node OS image. For example, download the Ubuntu* OS provision image .

Convert the image to raw format using the following command and generate

md5checksum if it is not a raw image:

qemu-img convert -O raw UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1.qcow2 UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1_ubuntu-raw.img md5sum UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1_ubuntu-raw.img | cut -f1 -d' ' > UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1_ubuntu-raw.img.md5sum

Configuration

Prepare ClusterAPI YAML files for creating all custom resources of the workload cluster to be deployed:

clustercustom resource yaml file: Example:cluster_cr.yml (example/cluster/clusterapi/v1alpha4_cluster_ubuntu.yaml)controlplanecustom resource yaml file: Example:controlplane_cr.yml (example/cluster/clusterapi/v1alpha4_controlplane_ubuntu.yaml)workercustom resource yaml file: Example:worker_cr.yml (example/cluster/clusterapi/v1alpha4_workers_ubuntu.yaml)bare metalhost custom resource yaml file: Example:baremetalhost_cr.yml (example/cluster/clusterapi/bmhosts_crs.yaml)

Prepare a

clusterapi_cluster.yml, with the following content, for the workload cluster to be deployed.

cluster_name: < the workload cluster name defined in the management cluster > cluster_namespace: < the namespace for all custom resources in the management cluster > cluster_cr: < point to the cluster custom resource yaml file, file:// > controleplane_cr: < point to the controlplane custom resource yaml file, file:// > worker_cr: < point to the worker custom resource yaml file, file:// > baremetalhost_cr: < point to the bare metal host custom resource yaml file, file:// >

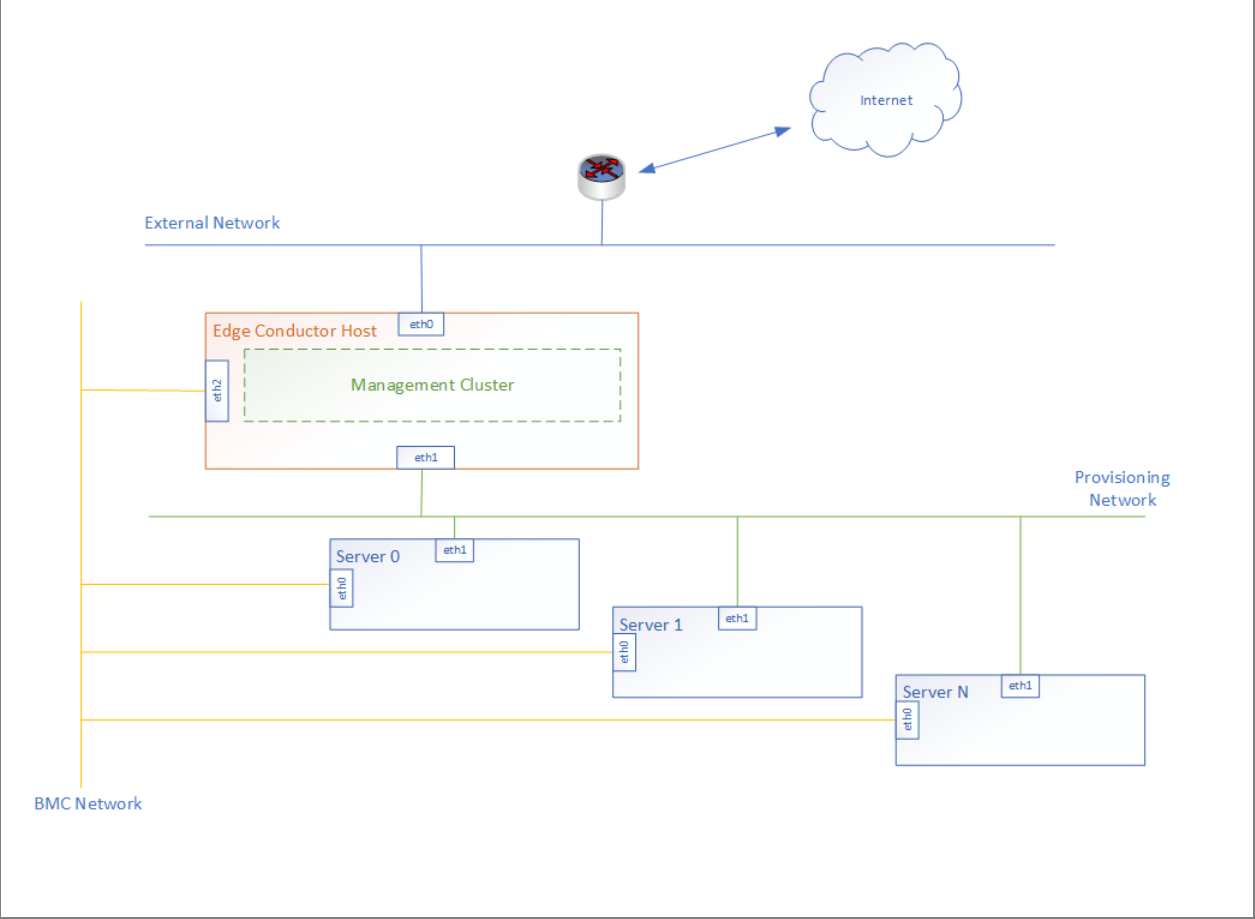

The following figure illustrates the topology of the Edge Conductor Day-0 machine and bare metal servers. The management cluster is deployed on the Edge Conductor Day-0 machine. It can be deployed elsewhere as long as the Edge Conductor Day-0 machine can assess it.

Make necessary configurations on the Edge Conductor Day-0 machine to forward traffic from the workload cluster to the external network. This means that the Edge Conductor Day-0 machine will act as the gateway for the workload cluster. Following is an example of using iptables to configure the Edge Conductor Day-0 machine as the gateway. Such setting will not persist after reboot; you need to reconfigure.

# assume the IP addresses used by the workload cluster is 192.168.206.0/24, which is the Provision Network illustrated in above image.

# enable ip forwarding for ipv4

sudo sysctl -w net.ipv4.ip_forward=1

# add an iptables rule for SNAT

sudo ip addr add 192.168.206.1/24 dev eth1

sudo iptables -t nat -A POSTROUTING -s 192.168.206.0/24 ! -d 192.168.206.0/24 -j MASQUERADE

sudo iptables -t filter -A FORWARD -s 192.168.206.0/24 -j ACCEPT

sudo iptables -t filter -A FORWARD -d 192.168.206.0/24 -j ACCEPT

Cluster with ClusterAPI - Top Config¶

Create clusterapi-top-config.yml for ClusterAPI cluster as shown in the following example :

Cluster:

type: "clusterapi"

config: "config/cluster/clusterapi_cluster.yml"

The config/cluster/clusterapi_cluster.yml is generated during Cluster with ClusterAPI - Preparation.

For other config sections and detailed description, refer to Top Config.

Cluster with ClusterAPI - Custom Config¶

Prepare a custom config file customcfg.yml following Custom Config. Note that the custom config file is a mandatory parameter for conductor init

Cluster with ClusterAPI - Initialize Edge Conductor Environment¶

Run the following command to initialize the Edge Conductor environment:

./conductor init -c clusterapi-top-config.yml -m customcfg.yml

Cluster with ClusterAPI - Updated Resource YAML¶

Make sure to correctly update the resource yaml. For example, update the following details:

emetalhost_cr: Edit the boot mac address for provisioning NIC and update BMC username, password, and BMC endpoints.cluster_cr: Update the cluster name and namespace name.controleplane_crandworker_cr: Edit the cluster name and namespace name, add proxy and DNS settings.

Also, prepare the OS image provision declared in controleplane_cr and worker_cr, for example, if declared http://192.168.206.1/images/UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1_ubuntu-raw.img, prepare an OS image and copy to _workspace/runtime/clusterapi/ironic/html/images/.

wget https://artifactory.nordix.org/artifactory/airship/images/k8s_v1.21.1/UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1.qcow2

qemu-img convert -O raw UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1.qcow2 UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1_ubuntu-raw.img

md5sum UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1_ubuntu-raw.img | cut -f1 -d' ' > UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1_ubuntu-raw.img.md5sum

cp UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1_ubuntu-raw.img UBUNTU_20.04_NODE_IMAGE_K8S_v1.21.1_ubuntu-raw.img.md5sum <edge conductor folder>/_workspace/runtime/clusterapi/ironic/html/images/

Cluster with ClusterAPI - Use Local Registry¶

If you are using a local registry with TLS enabled, you should distribute the CA certificate to each ClusterAPI node and configure the username and password on each ClusterAPI node.

Distribute a Certificate Authority (CA) certificate from the Day-0 machine to each ClusterAPI node.

For example:

examples/cluster/clusterapi/v1alpha4_controlplane_ubuntu.yamlspec: infrastructureTemplate: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: Metal3MachineTemplate name: test1-controlplane kubeadmConfigSpec: files: # Prepare the Day-0 machine ssh private key in order to copy the local registry certificate to the ClusterAPI node. - content: | # copy user metal3 private key from the Day-0 machine at here <user metal3 private key of the Day-0 machine> permissions: "0600" owner: root:root path: /root/id_rsa preKubeadmCommands: # Create the local registry certificate directory - mkdir -p /etc/containers/certs.d/<registry>:<registry port> # Copy the local registry certificate from the Day-0 machine to the ClusterAPI node - scp -i /root/id_rsa -o StrictHostKeyChecking=no <local registry CA certificate> /etc/containers/certs.d/<registry>:<registry port>/ca.crt

Create a local registry authentication file with base64 of username and password on each ClusterAPI node.

Run

docker login < local registry IP:local registry port >to log on the local registry server. The local registry authentication file (~/.docker/config.json) will be generated.Configure the local registry authentication file (

/etc/containers/auth.json) on each ClusterAPI node. The contents ofauth.jsonis same as that of theconfig.jsonfile of the Local registry.

For example:

examples/cluster/clusterapi/v1alpha4_controlplane_ubuntu.yamlspec: infrastructureTemplate: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: Metal3MachineTemplate name: test1-controlplane kubeadmConfigSpec: files: # Create the local registry authentication file with base64 of username and password - content: | { "auths": { "<local registry IP:local registry port>": { "auth": "<base64 of local registry user@password>" } } } owner: root:root path: /etc/containers/auth.json

Configure the local registry on each ClusterAPI node.

For example:

examples/cluster/clusterapi/v1alpha4_controlplane_ubuntu.yamlspec: infrastructureTemplate: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: Metal3MachineTemplate name: test1-controlplane kubeadmConfigSpec: files: # Configure the local registry - content: | [registries.search] registries = ['<local registry IP:local registry port>'] global_auth_file="/etc/containers/auth.json" stream_tls_ca="/etc/containers/certs.d/<private registry>:<registry port>/ca.crt" owner: root:root path: /etc/containers/registries.conf

Restart the

CRI-Oservice on each ClusterAPI node.For example:

examples/cluster/clusterapi/v1alpha4_controlplane_ubuntu.yamlspec: infrastructureTemplate: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: Metal3MachineTemplate name: test1-controlplane kubeadmConfigSpec: preKubeadmCommands: # Restart crio service - systemctl stop crio - systemctl daemon-reload - systemctl enable --now crio kubelet

Cluster with ClusterAPI - Build and Deploy¶

Run the following commands to build and deploy the ClusterAPI cluster.

./conductor cluster build

./conductor cluster deploy

The kubeconfig will be copied to the default path ~/.kube/config.

Check the ClusterAPI Cluster¶

Install the kubectl tool (v1.20.0) to interact with the target cluster.

kubectl get nodes --kubeconfig kubeconfig

For more details, refer to Interact with Nodes.