Intel® Ethernet Controllers TSN Enabling and Testing frameworks¶

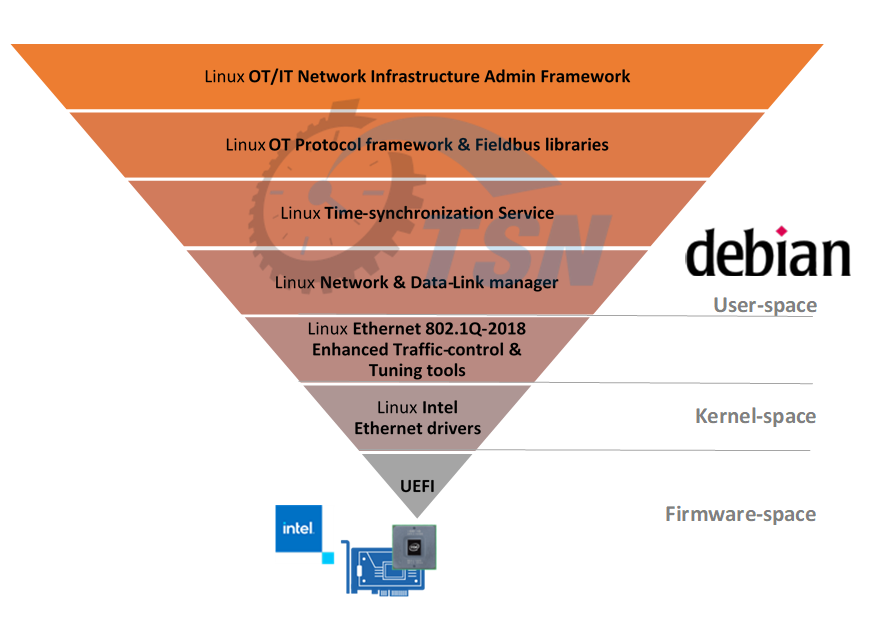

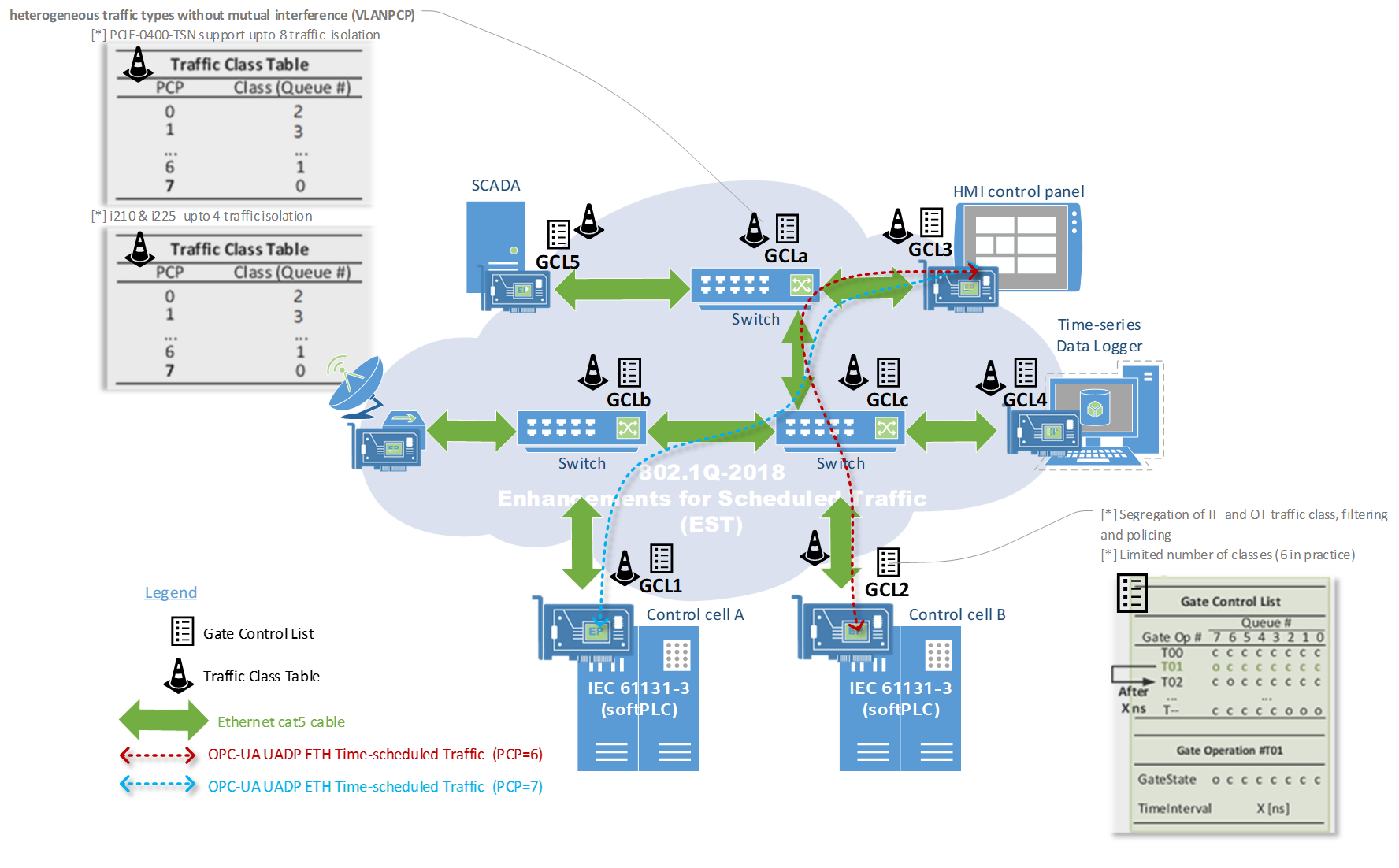

In Avnu Alliance™ Best Practices Theory of Operation for TSN-enabled Systems (Revision 1.0) Eric Gardiner presented Time-Synchronized Networking (TSN) and related efforts as mechanisms to solve applications using standard Ethernet technologies in a manner that enables convergence between Operational Technology (OT) and Information Technology (IT) data on a common physical network. By increases connectivity to industrial devices, it enables a faster path to new business possibilities including the Industrial Internet of Things (IIoT), big data analytics, and smart connected systems and machines.

The Intel® Edge Controls for Industrial (ECI) enables both Automation engineering and OT systems administrators team to achieve Industry-graded Ethernet Quality-of-Service (QoS) IEEE std 802.1Q-2018 for OT/IT converged Time-Synchronized Networking (TSN) domains.

Intel® ECI introduces Linux latest and greatest concept & mechanism to achieve OT/IT converged Advanced Traffic-Control and Express Data-Path (XDP) accelerated packet processing .

Only certain Intel® Ethernet Controllers are capable of delivering together Atomic-clock (TAI) accurate Time-synchronization, Time-Aware Traffic Scheduling and programmatic Launch Time L2/Ethernet hw-offload capabilities, for threaded IA64 Control runtimes to achieve guaranteed-latency and priority-based isochronous traffic-shaping as standardized into IEEE std 802.1Q-2018 specifications, and illustrated below :

[Endpoint PCI

8086:a0ac] 11th Gen Intel® Core™ U-Series [Tiger Lake UP3] Ethernet GbE Time-Sensitive Network Controller[Endpoint PCI

8086:4b32and ,8086:4ba0] Intel® Atom™ x6000 Series [Elkhart Lake] Ethernet GbE Time-Sensitive Network Controller[Endpoint PCI

8086:15f2] Intel® Ethernet Controller I225-LM for Time-Sensitive Networking (TSN)[Endpoint PCI

8086:157b,8086:1533,…] Intel® Ethernet Controller I210-T1 for Time-Sensitive Networking (TSN)[Switch PCI

1059:a100] Kontron PCIE-0400-TSN Network Interface Card

Explore the sections from the table below to Ethernet TSN overview and enabling/testing Linux software framework for Intel® Industrial Ethernet Controllers.

Description |

Sections |

|---|---|

How can Kontron® PCIE-0400-TSN product (ie. TTTECH® DE-IP TSN switch on Intel® CycloneV FPGA) bootstrap OT/IT networking QoS? |

|

How can Linux |

|

How to develop applications to take advantage of Express Data Path (XDP)? |

|

How to compare Intel® Industrial Ethernet TSN Endpoints hw-offload performance at Linux driver-level? |

|

How to develop applications to utilize open61541 OPC UA library with Express Data Path (XDP)? |

|

How to utilize IEC-62541 workload to collect TSN domain interoperability dataset during IIC TSN plug-fest events? |

IEEE 802.1AS Time-Aware Systems overview¶

Precision Time Protocol (PTP) is defined in IEEE 1588 as Precision Clock Synchronization for Networked Measurements and Control Systems. The IEEE 802.1AS standard specifies the use of IEEE 1588 specifications where applicable in the context of IEEE Std 802.1D-2004 and IEEE Std 802.1Q-2005. It includes distributed device clocks of varying precision and stability.

PTP protocol is designed specifically for industrial control systems and is optimal for use in distributed systems because it requires minimal bandwidth and very little cpu processing overhead.

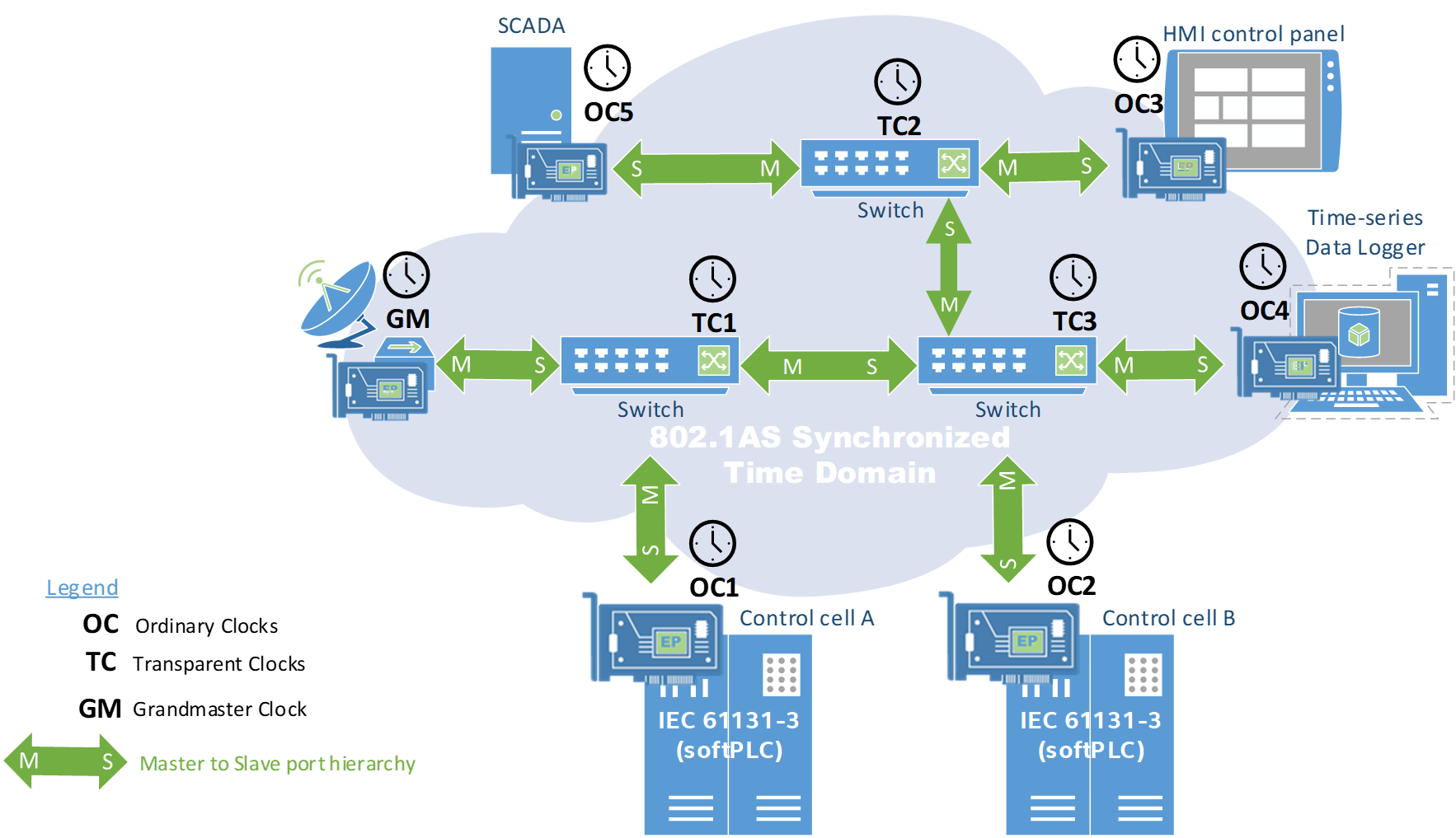

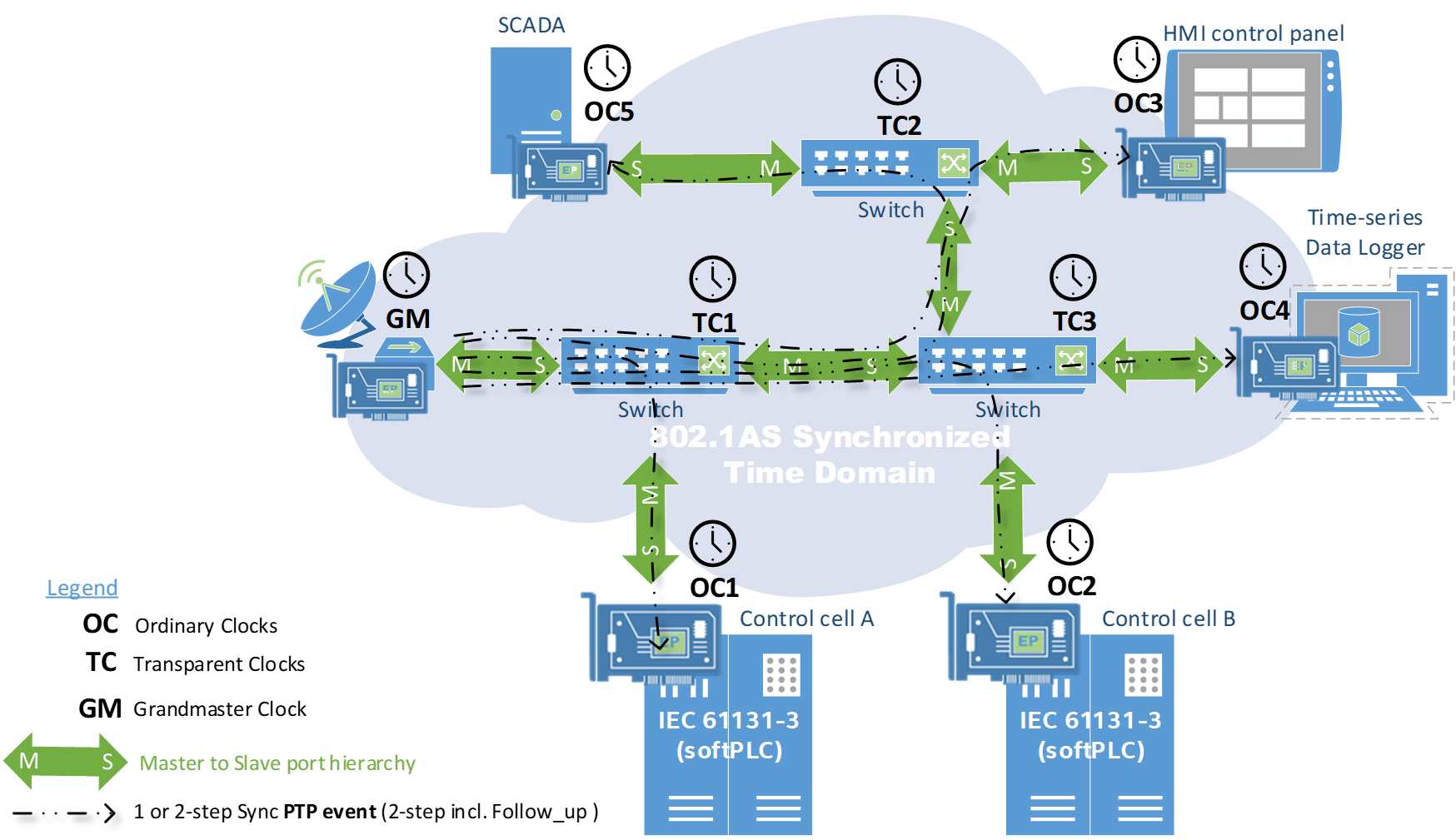

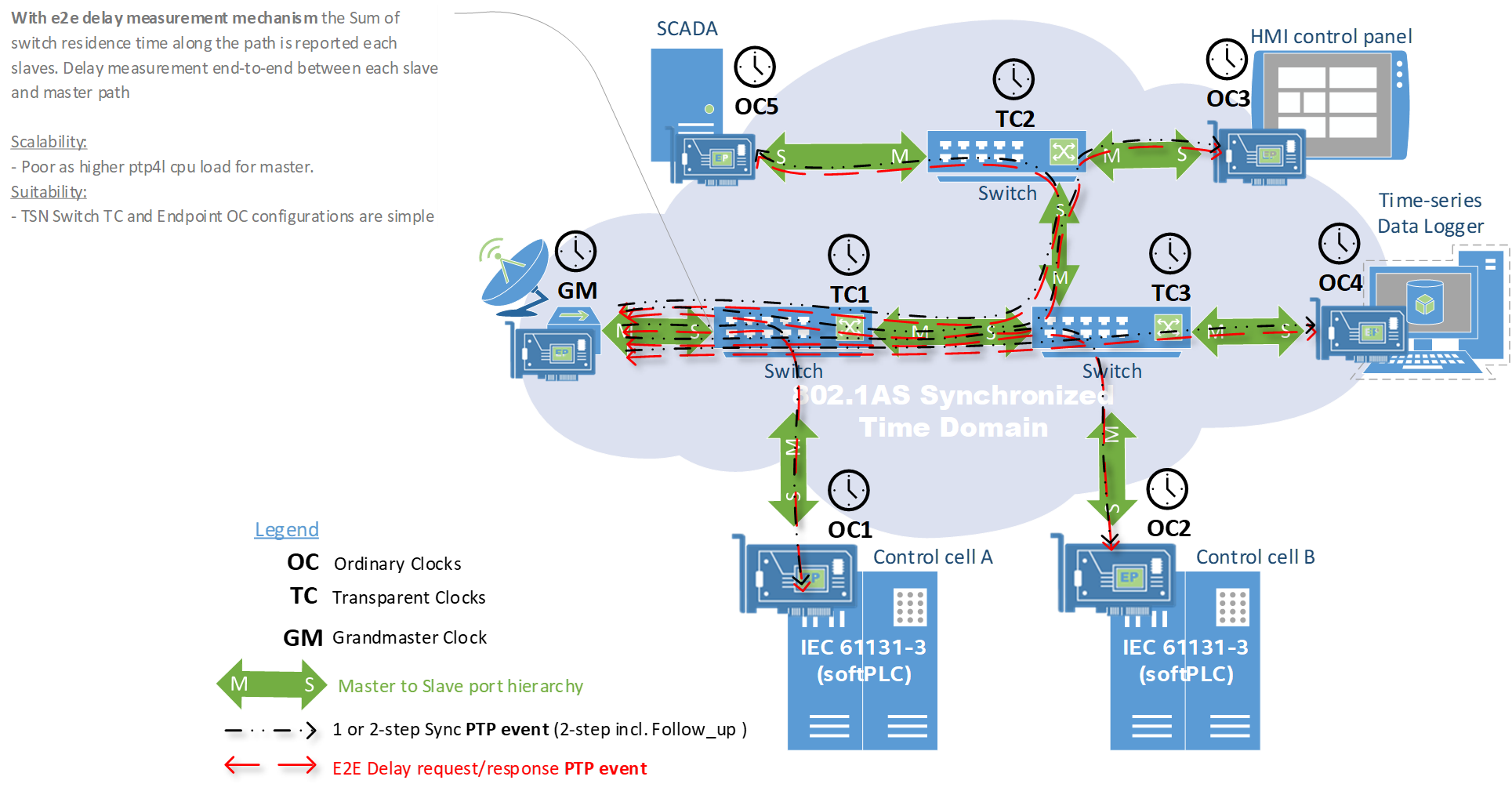

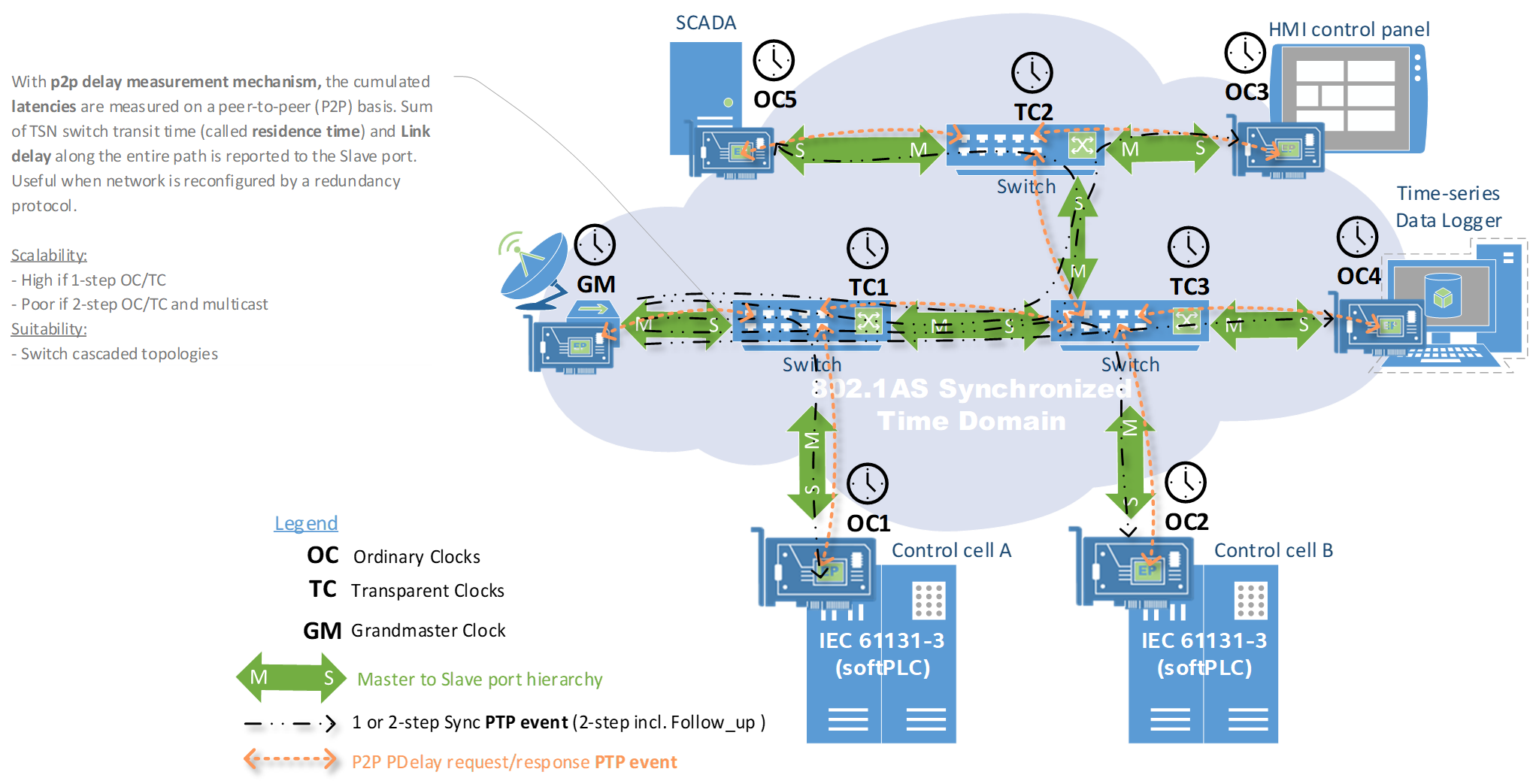

A IEEE 802.1AS capable TSN domain is made up of PTP enable time-aware Ethernet endpoints and switches. The following figure illustrates PTP clocks in a master and slave Ethernet port hierarchy within a TSN domain.

PTP Clocks¶

The Egress and Ingress timestamp precision is of paramount importance for robust IEEE 802.1AS time synchronization. It is best obtained via PTP protocol directly over Layer 2 Ethernet Type field 0x88F7 (i.e. PTP), VLAN tag(s) and timestamping with PTP Hardware Clock (PHC) assistance on present MAC multicast addresses :

01-1B-19-00-00-00for all except peer delay measurement01-80-C2-00-00-0Efor peer delay measurement

A PTP network may comprehend the following Clock Type:

Ordinary Clock¶

An Ordinary Clock (OC) functions as a single Precision Time Protocol (PTP) port and can be selected by the Best Master Clock Algorithm (BMCA) to serve as a master port or slave port within a IEEE 802.1 TSN domain.

OC are the most common clock type because they are used as Ethernet endpoints on a network that is connected to devices requiring synchronization.

Best Master Clock Algorithm

The Best Master Clock Algorithm (BMCA) is the foundation of PTP functionality. It provide a mean to establish : Who is the best master clock in its subdomain? out-of all advertised IEEE 802.1AS clock on the network, using PTP unicast or multicast packets.

Th BMCA must run locally on each ethernet port of the IEEE 802.1 TSN network, to continuously monitors PTP packets at every Announce interval to quickly adjust for changes of Time synchronization configuration.

BMCA based on IEEE 1588-2008 uses Announce PTP general messages for advertising clock properties.

The BMCA assess the best master clock in the subdomain using the following criteria:

Clock quality (GPS is considered the highest quality)

Clock accuracy of the clock’s time base (decimal from 0-255)

Stability of the local oscillator

Closest clock to the grandmaster

For synchronizing a Local free-running Clock, the BMCA based on IEEE 1588-2008 collects several set-points to determine the best clock based using the following attributes, in the indicated order:

Priority1 - User-assigned priority to each clock. The range is from 0 to 255.

Class - Class to which the a clock belongs to, each class has its own priority

Accuracy - Precision between clock and UTC, in nanoseconds

Variance - Variability of the clock (ECI default OxFFFF )

Priority2 - Final-defined priority. The range is from 0 to 255.

Extended Unique Identifier (EUI) a 64-bit Unique Identifier.

Transparent Clock¶

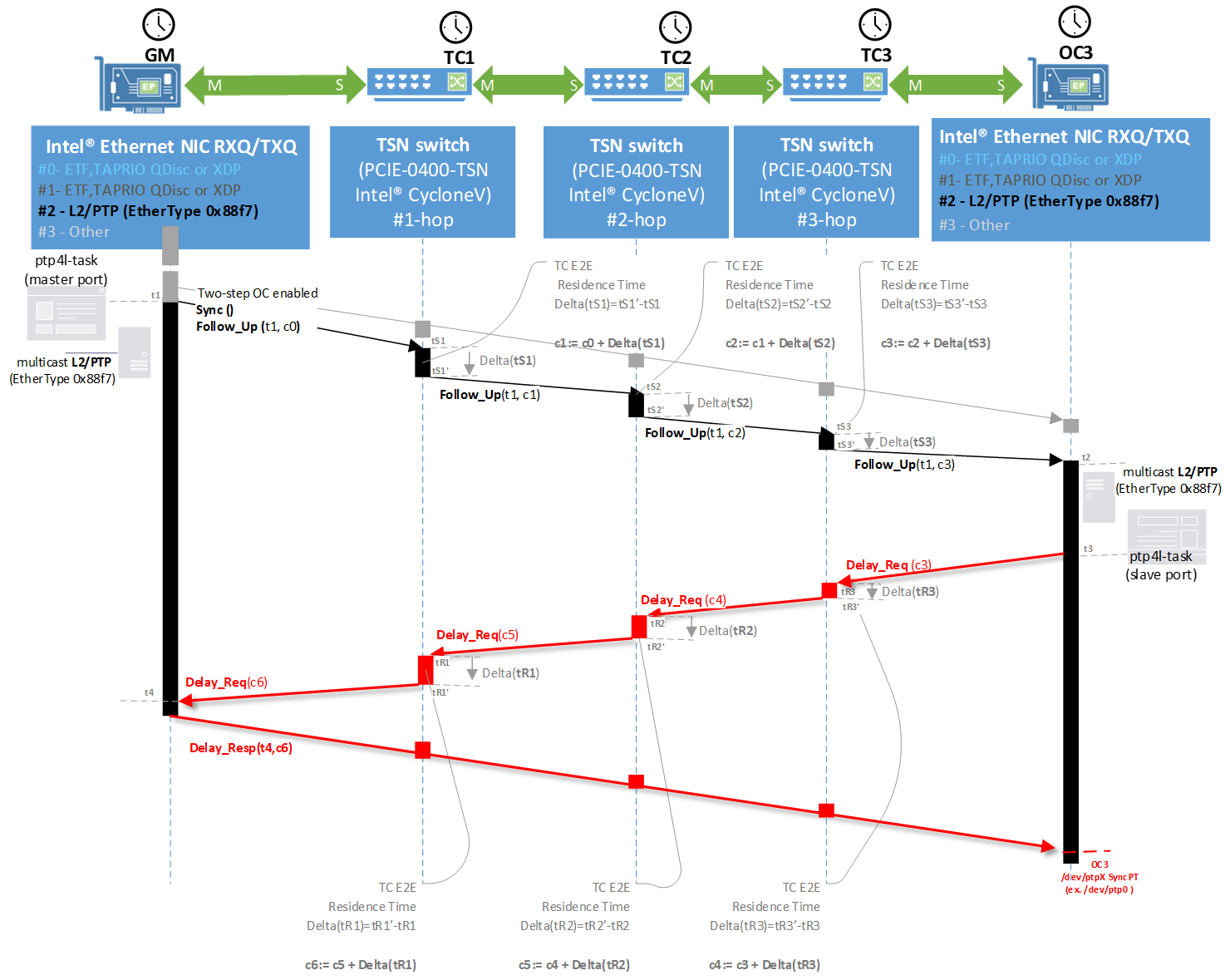

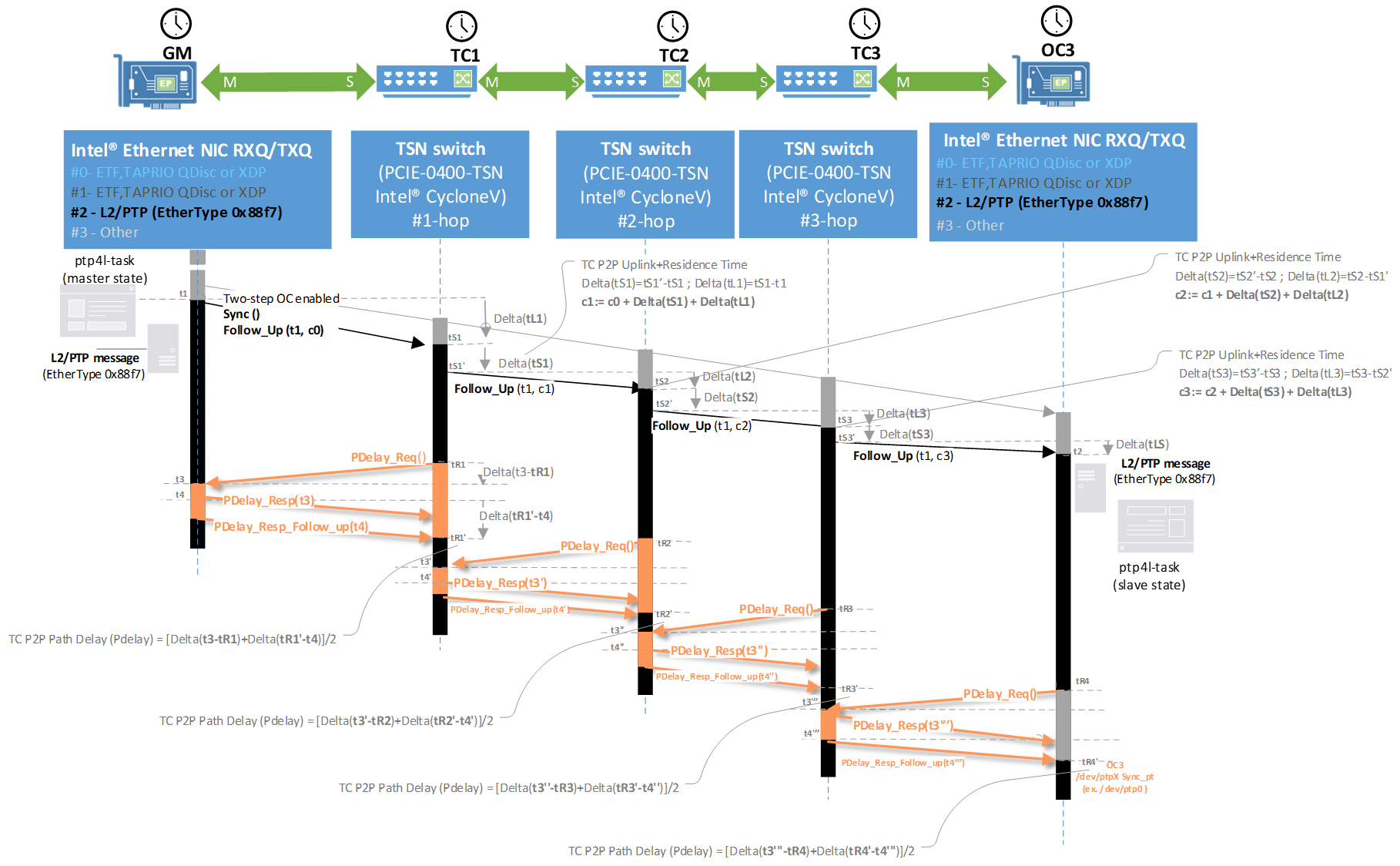

The role of Transparent Clocks (TC) in a IEEE 802.1 TSN domain with TSN switch hops is to update the time-interval field that is part of the PTP event message.

TC provides correction that compensates for TSN switch propagation delay on downstream data-link to all slave ports receiving the PTP event message sequences during time-synchronization cycles.

There are two types of TC :

End-to-end (E2E) transparent clock (TC) measures the PTP event message transit time (also call residence time). It does not provide compensation for the propagation delay of the link itself.

Peer-to-peer (P2P) transparent clock (TC) measures PTP event message residence time (same as for E2E TC) and provides the compensation for the link propagation delay.

End-to-End Delay Measurement Mechanism

The End-to-End (E2E) delay request-response mechanism uses the following PTP general and event messages to generate and communicate timing information to synchronize ordinary, boundary and transparent clocks:

SyncDelay_ReqFollow_UpDelay_Resp

The measured residence time of a Sync PTP event message is added to the correction field of the corresponding Sync or Follow_Up PTP event message.

The measured residence time of a Delay_Req PTP event message is added to the correction field of the corresponding Delay_Resp PTP event message.

The slave port uses the correction field information when determining the offset between the itself and the master port time.

Peer-to-Peer Delay Measurement Mechanism

The Peer-to-Peer (P2P) delay request-response mechanism improves accuracy of the transmit time added offset (also know as resident time), by the including the link delay measured between two clock ports implementing the P2P TC.

P2P uses the following PTP general and event messages to generate and communicate link delay information : * Pdelay_Req * Pdelay_Resp * Pdelay_Resp_Follow_Up

The upstream link delay is the estimated packet propagation delay between the upstream neighbor P2P TC and the P2P TC under consideration.

Both residence time and upstream link delay are added to the correction field of the PTP event message and the correction field of the message received by the slave port contains the sum of all link delays.

P2P delay measurement is very useful when the network is reconfigured by a redundancy protocol. However this mechanism utilized by IEEE802.1AS requires that every node will be PTP enabled.

Grandmaster Clock¶

The grandmaster clock (GM) is the primary source of time for clock synchronization using PTP protocol. The GM clock usually has a very precise time source, such as a external GPS (example UART NMEA protocol) or atomic clock accurate pulse-per-second (PPS) signal.

When the Industrial IEEE 802.1 TSN domain does not require any external time reference and only needs to be synchronized with single time reference, the GM clock can free running oscillator.

Boundary Clock¶

A Boundary Clock (BC) in a IEEE 802.1 TSN domain operates in place of a standard network switch. The BC typically provide an interface between TSN domains. Such device need more than one PTP enabled Ethernet port, and each port provides access to dissociated PTP communication path.

The Boundary Clock (BC) uses the Best Master Clock Algorithm (BMCA) to select the best clock seen by any port. The selected Ethernet port is then set as a slave port. The master port synchronizes the clocks connected downstream, while the slave port synchronizes with the upstream master clock.

Linuxptp stack 802.1AS gPTP Profile¶

Intel® Ethernet Controller provides hardware offloading capability developed to synchronize the clocks in packet-based networks as defined in IEEE 802.1AS PTP Event Message Sequences.

The open-source linuxptp 3.1 is the essential ingredient to setup an IEEE 802.1AS-2011 defined gPTP Profile on Intel® Ethernet Controller :

Supports IEEE 802.1AS-2011 in the role of TSN endpoint

asCapableImplements Ordinary Clock (OC), Transparent Clock (TC) and Boundary Clock (BC).

PTPv2 message Transport over UDP/IPv4, UDP/IPv6, and Layer 2 Ethernet (Ethertype

0x88f7).Implements Unicast, Multicast and Hybrid mode operation

Implements Peer-to-Peer (P2P) and End-to-End (E2E) Delay Measurement mechanisms (one-step or two-step)

Supports Hardware offloading and software time-stamping via the Linux SO_TIMESTAMPING socket option.

Supports the Linux PTP Hardware Clock (PHC) subsystem by using the

clock_gettimefamily of calls, including theclock_adjtimexsystem call.Adds

ts2phcPin-control and GPS NMEA external time-reference source.Supports vlan interfaces

The current ECI release uses following hardware with compatible with IEEE 1588-2008 and IEEE 802.1AS-2011 :

[Endpoint ] Intel® Tiger Lake UP3 (TGL) Ethernet MAC Controller Time-Sensitive Networking (TSN) Reference Software

[Endpoint ] Intel® Ethernet Controller I225 Time-Sensitive Networking (TSN) Linux Reference upstream

[Endpoint ] Intel® Ethernet Controller I210 Time-Sensitive Networking (TSN) Linux Reference Software

[Switch] Kontron Kbox C-102-2 TSN StarterKit

IEEE 802.1as gPTP profile essential¶

below a recommended for OT network administrator to stand-up IEEE 802.1as Time-Domain :

|

ECI Endpoints (ie. Master-only port)

|

Kontron PCIE-0400-TSN (ie switch-ports)

|

ECI Endpoints OCx (Slave-state port)

|

DeepCascade 802.1as

|

[global]

gmCapable 1

priority1 248

priority2 248

logAnnounceInterval 1

logSyncInterval -3

syncReceiptTimeout 3

neighborPropDelayThresh 800

min_neighbor_prop_delay -20000000

assume_two_step 1

path_trace_enabled 1

follow_up_info 1

ptp_dst_mac 01:80:C2:00:00:0E

network_transport L2

delay_mechanism P2P

tx_timestamp_timeout 100

transportSpecific 0x1

#

clockClass 248

clockAccuracy 0xfe

offsetScaledLogVariance 0xffff

timeSource 0xa0

#

#

#

#

#

#

#

|

[global]

gmCapable 0

priority1 254

#

tc_spanning_tree 1

summary_interval 1

#

#

#

assume_two_step 1

#

follow_up_info 1

ptp_dst_mac 01:80:C2:00:00:0E

network_transport L2

delay_mechanism P2P

tx_timestamp_timeout 10

clock_type P2P_TC

#

productDescription Kontron;

manufacturerIdentity 00:3a:98

#

[CE01]

transportSpecific 0x1

[CE02]

transportSpecific 0x1

[CE03]

transportSpecific 0x1

[CE04]

transportSpecific 0x1

|

[global]

gmCapable 0

priority1 128

priority2 128

logAnnounceInterval 1

logSyncInterval -3

syncReceiptTimeout 3

neighborPropDelayThresh 8000

min_neighbor_prop_delay -20000000

assume_two_step 1

path_trace_enabled 1

follow_up_info 1

ptp_dst_mac 01:80:C2:00:00:0E

network_transport L2

delay_mechanism P2P

tx_timestamp_timeout 100

transportSpecific 0x1

|

Star 802.1as

|

[global]

gmCapable 1

priority1 248

priority2 248

logAnnounceInterval 1

logSyncInterval -3

syncReceiptTimeout 3

neighborPropDelayThresh 800

min_neighbor_prop_delay -20000000

assume_two_step 1

path_trace_enabled 1

follow_up_info 1

ptp_dst_mac 01:80:C2:00:00:0E

network_transport L2

delay_mechanism P2P

tx_timestamp_timeout 100

summary_interval 0

#

productDescription Kontron;

manufacturerIdentity 00:3a:98

[CE01]

transportSpecific 0x1

[CE02]

transportSpecific 0x1

[CE03]

transportSpecific 0x1

[CE04]

transportSpecific 0x1

|

[global]

gmCapable 0

priority1 128

priority2 128

logAnnounceInterval 1

logSyncInterval -3

syncReceiptTimeout 3

neighborPropDelayThresh 8000

min_neighbor_prop_delay -20000000

assume_two_step 1

path_trace_enabled 1

follow_up_info 1

ptp_dst_mac 01:80:C2:00:00:0E

network_transport L2

delay_mechanism P2P

tx_timestamp_timeout 100

transportSpecific 0x1

|

|

Direct 802.1as

|

[global]

gmCapable 1

priority1 248

priority2 248

logAnnounceInterval 1

logSyncInterval -3

syncReceiptTimeout 3

neighborPropDelayThresh 800

min_neighbor_prop_delay -20000000

assume_two_step 1

path_trace_enabled 1

follow_up_info 1

ptp_dst_mac 01:80:C2:00:00:0E

network_transport L2

delay_mechanism P2P

tx_timestamp_timeout 100

transportSpecific 0x1

#

clockClass 248

clockAccuracy 0xfe

offsetScaledLogVariance 0xffff

timeSource 0xa0

#

#

#

#

#

|

[global]

gmCapable 0

priority1 128

priority2 128

logAnnounceInterval 1

logSyncInterval -3

syncReceiptTimeout 3

neighborPropDelayThresh 8000

min_neighbor_prop_delay -20000000

assume_two_step 1

path_trace_enabled 1

follow_up_info 1

ptp_dst_mac 01:80:C2:00:00:0E

network_transport L2

delay_mechanism P2P

tx_timestamp_timeout 100

transportSpecific 0x1

|

OT network administrator may decide to modify PTP master parameters at runtime using SET GRANDMASTER_SETTINGS_NP as below :

/usr/bin/pmc -u -b 0 -t 1 "SET GRANDMASTER_SETTINGS_NP clockClass 248 clockAccuracy 0xfe offsetScaledLogVariance 0xffff currentUtcOffset 37 leap61 0 leap59 0 currentUtcOffsetValid 1 ptpTimescale 1 timeTraceable 1 frequencyTraceable 0 timeSource 0xa0"

ts2phc¶

ts2phc is used to synchronizes one or more PTP Hardware Clocks using external time-stamps.

Usage: ts2phc -f [configuration file]

Configuration File

The configuration file is divided into sections. Each section starts with a line containing its name enclosed in brackets and it follows with settings. Each setting is placed on a separate line, it contains the name of the option and the value separated by whitespace characters. Empty lines and lines starting with # are ignored.

There are two different section types.

The global section (indicated as [global]) sets the program options and default slave clock options. Other sections are clock specific sections, and they override the default options.

Slave clock sections give the name of the configured slave (e.g. [eth0]). Slave clocks specified in the configuration file need not be specified with the -c command line option.

Examples

# # This example uses a PPS signal from a GPS receiver as an input to # the SDP0 pin of an Intel i210 card. The pulse from the receiver has # a width of 100 milliseconds. # # Important! The polarity is set to "both" because the i210 always # time stamps both the rising and the falling edges of the input # signal. # [global] use_syslog 0 verbose 1 logging_level 6 ts2phc.pulsewidth 100000000 [eth6] ts2phc.channel 0 ts2phc.extts_polarity both ts2phc.pin_index 0# # This example shows ts2phc keeping a group of three Intel i210 cards # synchronized to each other in order to form a Transparent Clock. # The cards are configured to use their SDP0 pins connected in # hardware. Here eth3 and eth4 will be slaved to eth6. # # Important! The polarity is set to "both" because the i210 always # time stamps both the rising and the falling edges of the input # signal. # [global] use_syslog 0 verbose 1 logging_level 6 ts2phc.pulsewidth 500000000 [eth6] ts2phc.channel 0 ts2phc.master 1 ts2phc.pin_index 0 [eth3] ts2phc.channel 0 ts2phc.extts_polarity both ts2phc.pin_index 0 [eth4] ts2phc.channel 0 ts2phc.extts_polarity both ts2phc.pin_index 0

check_clocks¶

check_clocks is used to check time-synchronization between PTP Hardware Clocks (phc), Linux CLOCK_TAI and CLOCK_REALTIME system time.

Usage: check_clocks -d [ethernet interface] -v

Examples

check_clocks -d enp3s0 -v Dumping timestamps and deltas rt tstamp: 1521111579039529335 tai tstamp: 1521111579039529428 phc tstamp: 1521111579039531493 rt latency: 45 tai latency: 40 phc latency: 8384 phc-rt delta: 2158 phc-tai delta: 2065

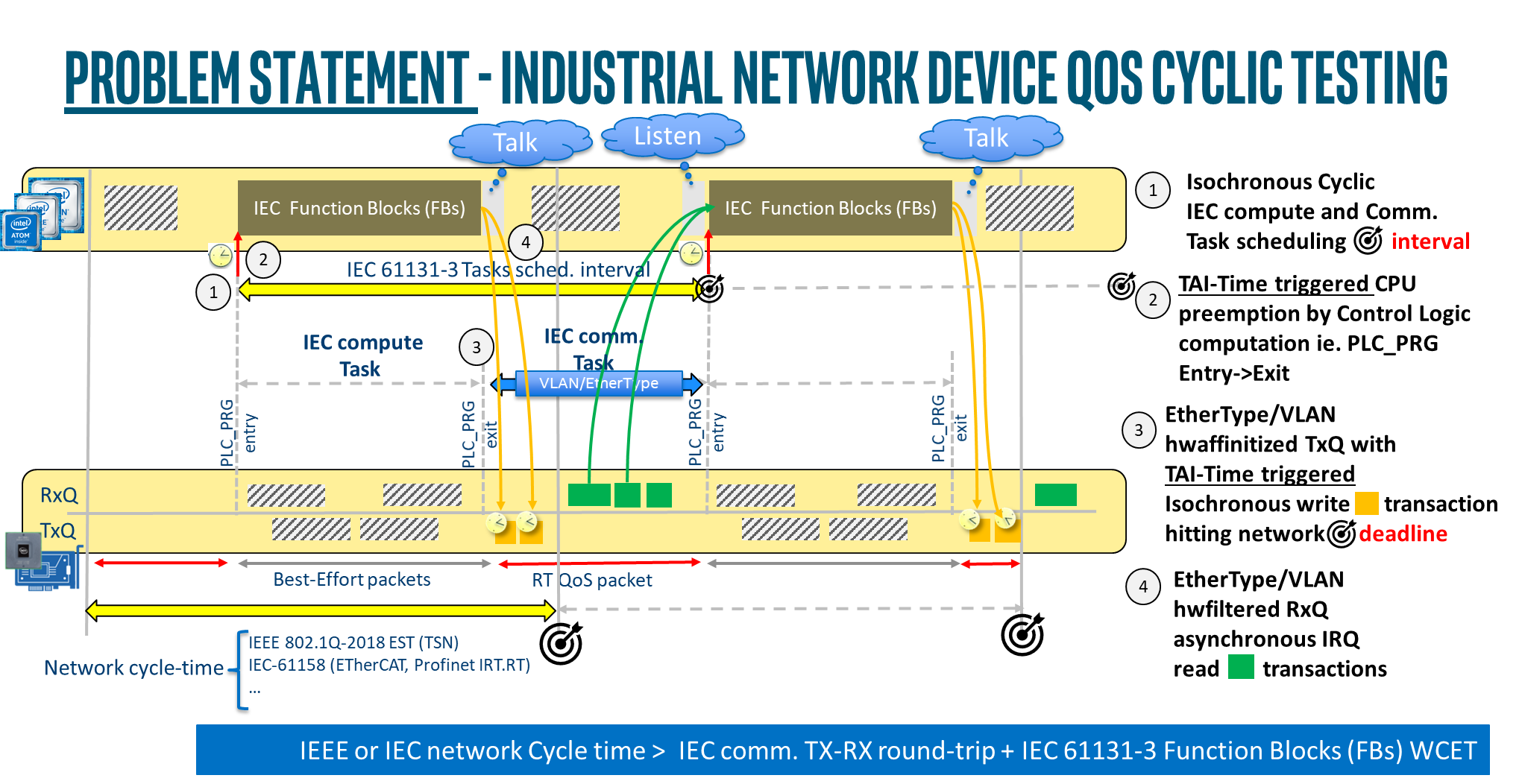

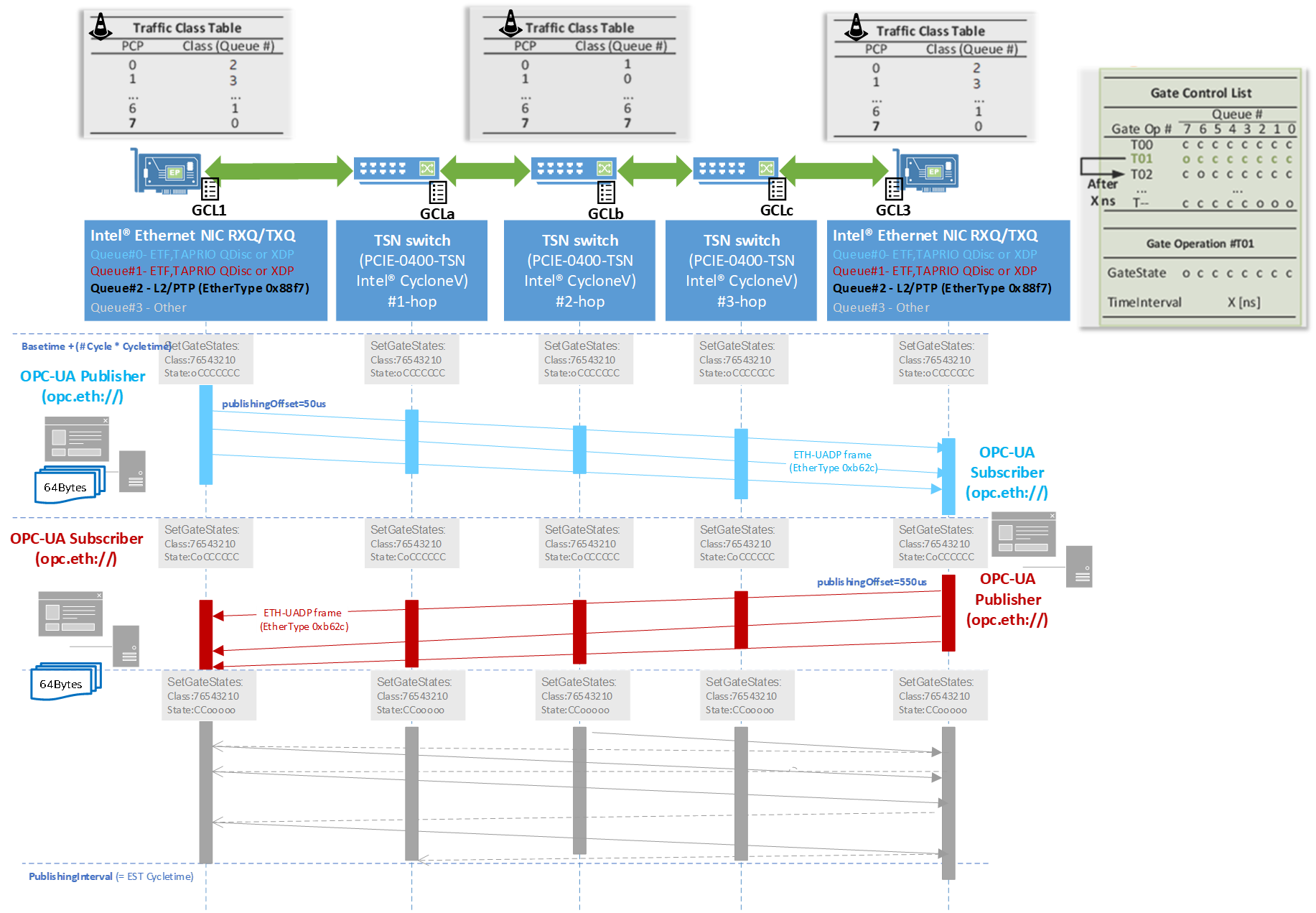

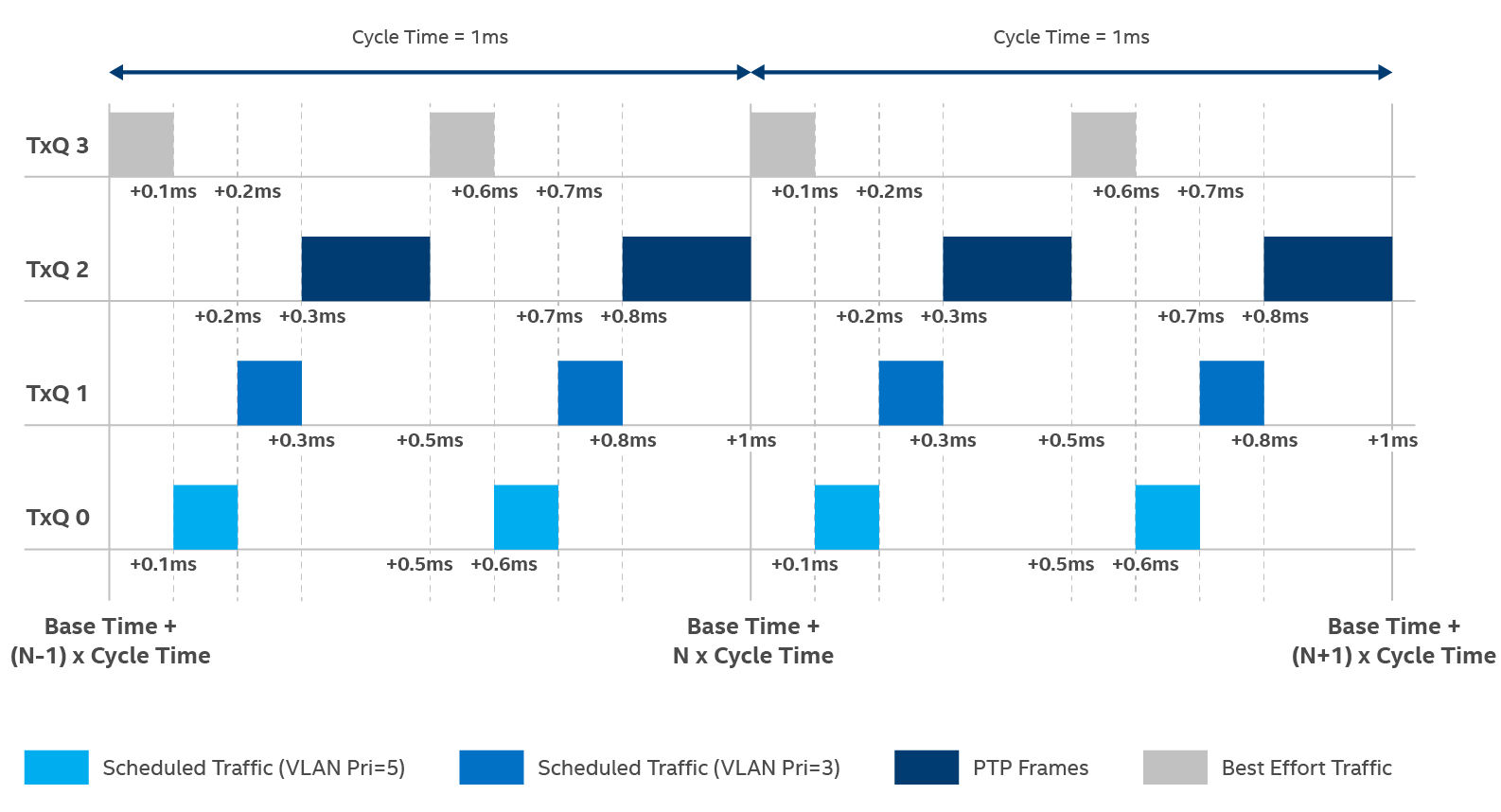

IEEE 802.1Q-2018 Industrial Ethernet Quality-of-Service (QoS) overview¶

IEEE 802.1Q-2018 has enforced predictable time of delivery by divided Ethernet traffic into different classes thus ensuring that, at specific times, only one traffic class (or set of traffic classes) has access to the network.

TSN endpoints and TSN bridge need Time-Aware traffic scheduling to enable quality of service for time-sensitive stream communication between Talkers and Listeners. To enable time-aware scheduling, bridges support the mechanisms defined in IEEE 802.1Q-2018 Enhancements for Scheduled Traffic (EST) feature (formerly known as 802.1Qbv).

By introducing hardware differentiator, Intel® Linux Ethernet controllers supports OT network QoS administration enforcing Gate Control List (GCL) to define which traffic-queues is permitted to transmit at a specific point in time within a control network cycle :

Differentiate traffic between high-priority traffic and low priority or best-effort traffic (PCP)

Manage TX Hardware Queues to be switched ON or OFF according to a global Time-Aware scheduling-policy, that indicates the length of time for which an entry will be active on each port of each network device.

Virtual LANs (VLANs)¶

Virtual LANs (VLANs) QoS is the center pillar in IEEE 802.1Q standard for all Endpoints and Bridges to supporting Forward and Queuing Enhancements Time-Sensitive Streams (FQTSS) mechanisms:

to recognize VLAN Priority Information (PCP)

to identify Stream Reservation (SR) traffic classes

the VLAN interface is created using the ip-link command from iproute2 project which is pre-installed in ECI.

PCP value

Priority

Acronym

Traffic types

1

0 (lowest)

BK

Background

0

1 (default)

BE

Best effort

2

2

EE

Excellent effort

3

3

CA

Critical applications

4

4

VI

Video, < 100 ms latency and jitter

5

5

VO

Voice, < 10 ms latency and jitter

6

6

IC

Inter-network control

7

7 (highest)

NC

Network control

The following example creates a VLAN interface for Traffic-class .

sudo ip link add link eth0 name eth0.5 type vlan id 5 egress-qos-map 2:2 3:3After running this command:

The egress-qos-map argument defines a mapping of Linux internal packet priority (SO_PRORITY) to VLAN header PCP field for outgoing frames.

The format is FROM:TO with multiple mappings separated by spaces maps here SO_PRIORITY 2 into PCP 2 and SO_PRIORITY 3 into PCP 3.

For further information about command arguments, see ip-link(8) manpage.

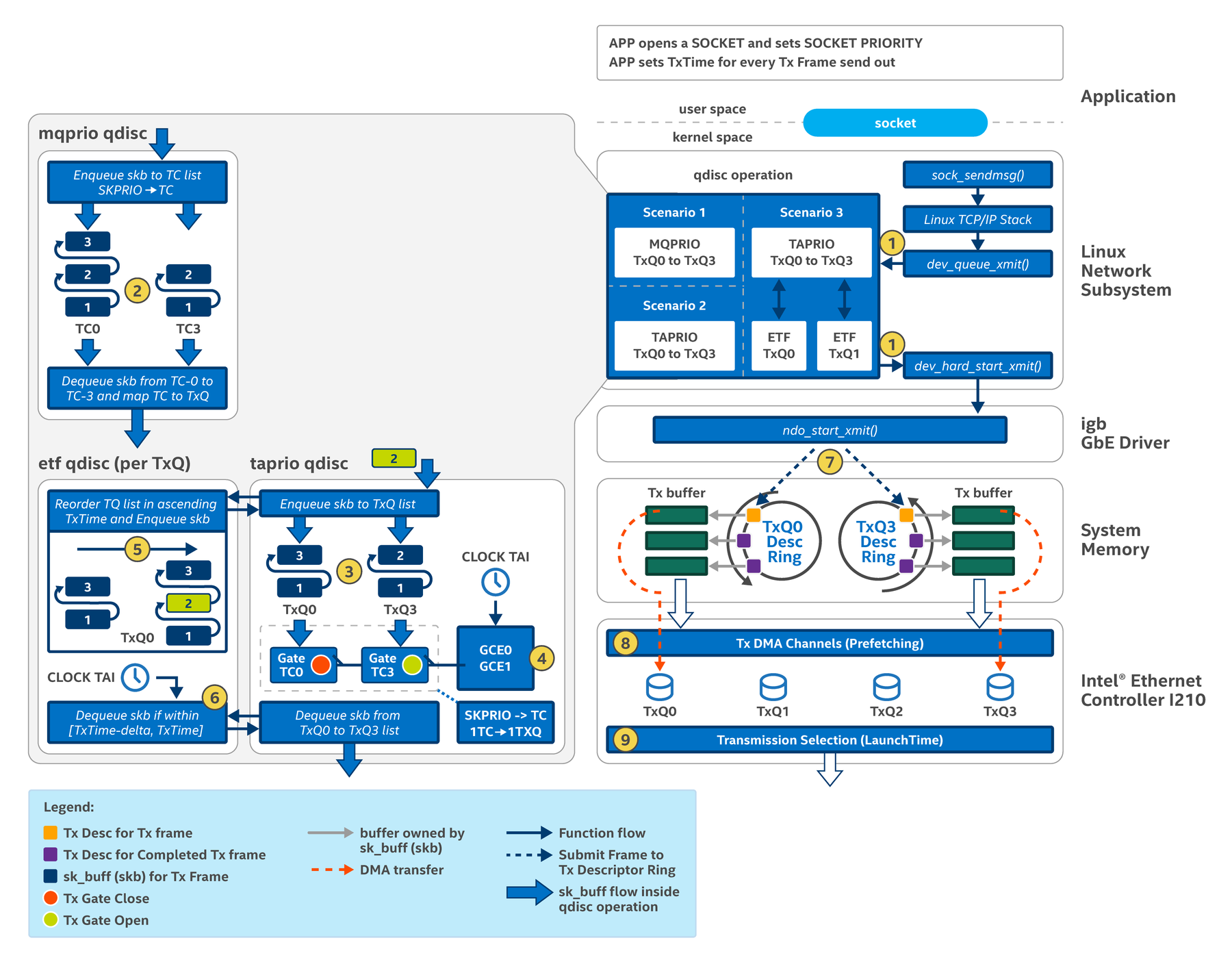

Linux Traffic Control (TC)¶

Linux Traffic Control (TC) provide various packet scheduling policy to ensure inter-packet transmission latency on Intel(R) Ethernet Controllers meet deterministically a Industrial network specific and/or user-defined cycle deadline.

Every ECI nodes is capable of supporting the transmission algorithms specified in the Forwarding and Queuing for Time-Sensitive Streams (FQTSS) chapter of IEEE 802.1Q-2018 via TC Queuing Disciplines (qdiscs) usages.

The following Linux Network Queue Discipline (QDisc) becomes increasingly important for any TSN Endpoint willing to comply with Enhancements for Scheduled Traffic (EST) feature (formerly known as 802.1Qbv) standardized feature.

These qdiscs present several offload option to leverage the hardware-queueing mechanism support, as well as certain software implementation that could be utilized as a fallback.

For more detailed and exhaustive user guide please refer https://tsn.readthedocs.io/qdiscs.html

Earliest TxTime First (NET_SCHED_ETF) QDisc¶

While not an actual FQTSS feature, Intel® Ethernet Linux drivers also provides the Earliest TxTime First (ETF) qdisc which enables the LaunchTime feature present in Intel(R) Ethernet Controller I210, I225-LM and TGL mGBE.

In Linux, this hardware feature is enabled through the SO_TXTIME sockopt and ETF qdisc.

The SO_TXTIME socket option allows applications to configure the transmission time for each frame while the ETF qdiscs ensures frames coming from multiple sockets are sent to the hardware ordered by transmission time.

The following steps describe how an application sends a time-scheduled packet :

Open a raw and low-level packet interface socket.

socket(AF_PACKET, SOCK_RAW, IPPROTO_RAW);

Set the socket priority option (SO_PRIORITY) corresponding the desired Virtual LANs (VLANs) QoS

setsockopt(fd, SOL_SOCKET, SO_PRIORITY, &priority, sizeof(priority));

Set the socket transmit time option (SO_TXTIME)

sk_txtime.clockid = CLOCK_TAI; sk_txtime.flags = (use_deadline_mode | receive_errors); if (setsockopt(sock, SOL_SOCKET, SO_TXTIME, &sk_txtime, sizeof(sk_txtime))) { exit_with_error("setsockopt SO_TXTIME"); }

Note

The flags take bit-wise fields: report_error at bit 1 and deadline_mode at bit 0. For details about these fields, refer to Add a new socket option for a future transmit time and Make etf report drops on error_queue .

For every TX packet, the user-space application shall specify the per-packet transmit time in the socket control message (cmsg) ancillary data before sending it, as illustrated in the following snippet of code :

struct msghdr msg; // struct cmsghdr *cmsg; struct iovec iov; iov.iov_base = rawpktbuf; // the transmit packet iov.iov_len = sizeof(rawpktbuf); // the size of the transmit packet msg.msg_iov = &iov; // internal scatter/gather array for transmit packet cmsg = CMSG_FIRSTHDR(&msg); // obtain the control message cmsg->cmsg_level = SOL_SOCKET; // Set to socket level cmsg->cmsg_type = SCM_TXTIME; // Set ancillary data is TXTIME socket control message type cmsg->cmsg_len = CMSG_LEN(sizeof(__u64)); *((__u64 *) CMSG_DATA(cmsg)) = txtime; // Set per-packet transmit time sendmsg(fd, &msg, 0);

Note

A TX packet sent from the user-space copies data when the packet enters the kernel-space. The copied packets are stored in the data buffer that is pointed to by

sk_buff, the socket buffer structure inside the Linux kernel that tracks network packets. For additional details, refer to the socket interface in the Linux networking subsystem .Data copying becomes the bottleneck when 100us network cycle with very low-latency injection time are needed.

The ETF qdisc operates on a per-queue basis so the either TAPRIO or MQPRIO qdisc configurations is required in addition to expose the hardware transmission queues.

Both MQPRIO and TAPRIO does more than just expose the hardware transmission queues, it also defines how Linux network priorities map into traffic classes and how traffic classes map into hardware queues.

The command-line example below to illustrate Queue configuration using the MQPRIO qdisc for Intel(R) Ethernet Controller I210 which has 4 transmission queues.

sudo tc qdisc add dev eth0 parent root handle 6666 mqprio \ num_tc 3 \ map 2 2 1 0 2 2 2 2 2 2 2 2 2 2 2 2 \ queues 1@0 1@1 2@2 \ hw 0After running this command:

MQPRIO is installed as root qdisc on eth0 interface with handle ID 6666;

3 different traffic-classes are defined (from 0 to 2), where Linux priority 3 maps into traffic class 0, Linux priority 2 maps into traffic class 1, and all other Linux priorities map into traffic class 2;

Packets belonging to traffic class 0 go into 1 queue at offset 0 (i.e queue index 0 or TXQ0), packet from traffic class 1 go into 1 queue at offset 1 (i.e. queue index 1 or TXQ1), and packets from traffic class 2 go into 2 queues at offset 2 (i.e. queues index 2 and 3, or TXQ2 and TXQ3);

No hardware offload is enabled.

Note

By configuring MQPRIO, Stream Reservation (SR) Class A (Priority 3) is enqueued on Q0, the highest priority transmission queue in Intel(R) Ethernet Controller, while SR Class B (Priority 2) is enqueued on TXQ1, the second priority. All best-effort traffic goes into TXQ2 or TXQ3.

In the example below, the ETF qdisc is installed on TXQ0 and offload feature is enabled since the Intel(R) Ethernet Controller I210 driver supports the LaunchTime feature.

sudo tc qdisc add dev eth0 parent 6666:1 etf \ clockid CLOCK_TAI \ delta 500000 \ offloadAfter running this command:

The clockid parameter specifies which clock is utilized to set the transmission timestamps from frames (Only CLOCK_TAI is supported) moreover, ETF requires the System clock to be in sync with the PTP Hardware Clock

The delta parameter specifies the length of time before the transmission timestamp the ETF qdisc sends the frame to hardware. That value depends on multiple factors and can vary from system to system. in this example uses 500us.

Important

Intel Ethernet Controller I210 [Springville] Traffic Shaping hw-offload is ONLY available on hw-queues 0 and 1, providing usage of four-TXQ to traffic to queues map in the format count@offset. The GCL assisted-mode configuration required OT admin to map the priority 0..15 to traffic-class in descending-order to enforce GCL egress qos policy from TXQ[0] to TXQ[3] to hw-queue, considering only TXQ[0] and TXQ[1] can achived hw-offload EST scheduling

For Ethernet Time-Sensitive Networking for 11th Gen Intel® Core™ Processors [Tiger Lake] certain traffic-Shaping configuration ONLY on hw-queues 2 and 3, providing usage of four-TXQ to traffic to queues map in the format count@offset. The GCL offload-mode configuration required OT admin to map the priority 0..15 to traffic-class in ascending-order to enforce GCL egress qos policy from TXQ[0] to TXQ[3]. The GCL assisted-mode configuration required OT admin to map the priority 0..15 to traffic-class in descending-order to enforce GCL egress qos policy from TXQ[0] to TXQ[3] to hw-queue, considering only TXQ[2] and TXQ[3] can achived hw-offload EST scheduling

For Intel® Ethernet Controller I225 [Foxville] Software User Manual certain traffic-Shaping configuration ONLY on hw-queues 2 and 3, providing usage of four-TXQ to traffic to queues map in the format count@offset. The GCL offload-mode configuration required OT admin to map the priority 0..15 to traffic-class in ascending-order to enforce GCL egress qos policy from TXQ[0] to TXQ[3]. The GCL assisted-mode configuration required OT admin to map the priority 0..15 to traffic-class in descending-order to enforce GCL egress qos policy from TXQ[0] to TXQ[3], considering only TXQ[0] and TXQ[1] can achived hw-offload EST scheduling

For further information about command arguments, see tc-etf manpage

Time Aware Priority (NET_SCHED_TAPRIO) QDisc¶

IEEE 802.1Q-2018 introduces the Enhancements for Scheduled Traffic (EST) feature (formerly known as 802.1Qbv) which allows packet transmission from each Endpoint and Bridge hardware queue to be scheduled relative to a known time-slice Control Gate List (GCL) within the TSN Domains.

The Linux Control Gate List (GCL) abstraction are quite simple :

A gate is associated with each Transmission queue (TXQ)

The

OpenorClosedstates of the transmission gate determines queued frames policy.Each Port is associated with a Gate Control List (GCL) which contains an ordered list of gate operations.

For further details about the EST algorithm please refer to section 8.6.8.4 of IEEE 802.1Q standard

The EST feature is supported in Linux via the TAPRIO qdisc. Similar to MQPRIO, the qdisc defines how Linux networking stack priorities map into traffic classes and how traffic classes map into hardware queues. Besides that, it also enables the user to configure the GCL for a given interface.

Note

Intel Ethernet Controller I210 [Springville] Traffic Shaping hw-offload is ONLY available on hw-queues 0 and 1, providing usage of four-TXQ to traffic to queues map in the format count@offset. Consequently, TC mapping can only b archived by mapping the priority map 0..15 to traffic-class in descending-order to enforce affinity to hw-offload capable TXQ[0] and TXQ[1]. Any other order will issue RTNETLINK answers: Invalid argument

In case of Ethernet Time-Sensitive Networking for 11th Gen Intel® Core™ Processors [Tiger Lake] Traffic Shaping hw-offload are ONLY available on hw-queues 2 and 3, providing usage of four-TXQ to traffic to queues map in the format count@offset. Consequently, TC mapping can only b archived by mapping the priority map 0..15 to traffic-class in ascending-order to enforce affinity to hw-offload capable TXQ[2] and TXQ[3].

For further information about command arguments, see tc-taprio manpage

For more detailed and exhaustive user guide please refer Intel(R) Ethernet Controller I210 Time-Sensitive Networking (TSN) Linux Reference Software and Intel® Tiger Lake UP3 (TGL) Ethernet MAC Controller Time-Sensitive Networking (TSN) Reference Software.

TAPRIO Enhancements for Scheduled Traffic (EST) TXQ GCL Offload mode

IEEE 802.1Q-2018 standard introduced both the Enhancements for Scheduled Traffic (EST) (formerly known as 802.1Qbv) hardware feature to enforce packet transmission scheduling-policy from each Endpoint and Bridge hardware queue within the predefined TSN Domains cycletime, possibly also preempted in-between time-window transition.

TAPRIO setup on Intel® Ethernet Controller I225 Time-Sensitive Networking (TSN) Linux driver and Intel® Tiger Lake UP3 (TGL) Ethernet MAC Controller Linux drivers allow Hw-Queues to be set as preemptible queues:

BASE=$(expr $(date +%s) + 5)000000000 echo "$BASE" tc qdisc add dev eth0 parent root handle 100 taprio \ num_tc 4 \ map 0 1 2 3 3 3 3 3 3 3 3 3 3 3 3 3 \ queues 1@0 1@1 1@2 1@3 \ base-time $BASE \ sched-entry S 01 5000000 \ sched-entry S 02 5000000 \ sched-entry S 04 5000000 \ sched-entry S 08 5000000 \ sched-entry S 00 5000000 \ flags 0x2 \ txtime-delay 0 \Important

in TAPRIO GCL TXQ Offload mode

For issue please open https://premiersupport.intel.com/ (Login with intel account is necessary) and fill a new Intel Edge Software Recipes Case under Category Software/Driver/OS and Subcategory Industrial Edge Control Software.

rmmod igc insmod /lib/modules/5.4.59-rt36-intel-pk-preempt-rt/kernel/drivers/net/ethernet/intel/igc/igc.ko debug=16

Following parameters are added to support GCL offload-mode:

flagsused to enable GCL hwoffload value=0x2 capability and TX and RX traffic-shaping frames automatically (Note: These are transparent to the user or kernel)

txtime-delayThe value zero indicates that the packet scheduling and preemption are entirely hw-managed.

fpe-qmaskused to enable FPE hwoffload value=0x1 capability and preempt or reassemble frames automatically (Note: These are transparent to the user or kernel)Note

Intel® Ethernet Controller drivers does not yet support SET_AND_HOLD and SET_AND_RELEASE Gate Control List (GCL) for IEEE 802.1Qbu Frame-Preemption hw-offload enabling.

sched-entry S 01 5000000 \ sched-entry H 01 5000000 \ sched-entry R 02 5000000 \Only global IEEE 802.1Qbu Frame-Preemption FPE hwoffload enabling is supported via

tc qdisc .. taprio ... fpe-qmask=0x1flagsAll queues as used by the Intel® Tiger Lake UP3 (TGL) Ethernet MAC Controller Linux drivers are express queues by default. High priority traffic-class (TC) should be

mapto express queues (example, set to Queue 0) as express queue packets will otherwise not be preempted.

TAPRIO Enhancements for Scheduled Traffic (EST) TXQ GCL assisted-mode

Certain Intel(R) Ethernet Controller don’t provide the GCL hw-tc-offload feature.

However, Linux 802.1Q-2018 EST can still be leveraged on a ECI node since it provides latest version of the TAPRIO Qdisc with SO_TXTIME assisted-mode that combines skb_data with SO_TXTIME feature as provided by the ETF qdisc at kernel-level (reduce packets scheduling corner-cases).

In SO_TXTIME assisted-mode, the LaunchTime feature in the Intel(R) Ethernet Controller I210 is used to schedule packet transmissions, emulating the EST algorithm.

In essence, for all the skb_data packets which do not have the SO_TXTIME field set, taprio qdisc will :

Set the transmit timestamp (set

skb->tstamp).Ensure that the transmit time for the packet is set to when the gate is open.

Validate whether the timestamp (in

skb->tstamp) occurs when the gate corresponding to skb’s traffic class is open (whenSO_TXTIMEis set).

This mechanism reduces the risk in Intel(R) Ethernet Controller for time non-critical packets being transmitted outside of their timeslice, due to induced delay in the 802.3 & PHY or hi-priority hardware queues starving the lo-priority queues.

BASE=$(expr $(date +%s) + 5)000000000 echo "$BASE" tc -d qdisc replace dev enp1s0 parent root handle 100 taprio \ num_tc 4 \ map 0 1 2 3 3 3 3 3 3 3 3 3 3 3 3 3 \ queues 1@0 1@1 1@2 1@3 \ base-time $BASE \ sched-entry S 01 5000000 \ sched-entry S 02 5000000 \ sched-entry S 04 5000000 \ sched-entry S 08 5000000 \ sched-entry S 00 5000000 \ clockid CLOCK_TAI \ flags 0x1 \ txtime-delay 5000000 tc qdisc replace dev enp1s0 parent 100:1 \ etf clockid CLOCK_TAI delta 5000000 \ offload skip_sock_check

Following parameters are added to support Enhancements for Scheduled Traffic (EST) SO_TXTIME assisted-mode:

flagsused to enable Enhancements for Scheduled Traffic (EST) SO_TXTIME assisted-mode value=0x1 for kernel to TXTIME timestamps on every egress packets (ie.skb_data) DMA descriptor sent through ETF hw offload queues.

txtime-delayThis indicates the minimum time it will take for the packet to hit the wire. This is useful in determining whether we can transmit the packet in the remaining time if the gate corresponding to the packet is currently open.

TAPRIO Enhancements for Scheduled Traffic (EST) TXQ GCL software-fallback

This mechanism reduces the risk in Intel(R) Ethernet Controller for time non-critical packets being transmitted outside of their timeslice, due to induced delay in the 802.3 & PHY or hi-priority hardware queues starving the lo-priority queues.

BASE=$(expr $(date +%s) + 5)000000000 echo "$BASE" tc qdisc add dev eth0 parent root handle 100 taprio \ num_tc 4 \ map 0 1 2 3 3 3 3 3 3 3 3 3 3 3 3 3 \ queues 1@0 1@1 1@2 1@3 \ base-time $BASE \ sched-entry S 01 5000000 \ sched-entry S 02 5000000 \ sched-entry S 04 5000000 \ sched-entry S 08 5000000 \ sched-entry S 00 5000000 \ clockid CLOCK_TAI \ flags 0x0 \ txtime-delay 5000000

Following two parameters are added to support Enhancements for Scheduled Traffic (EST) software fallback :

flagsused to enable Enhancements for Scheduled Traffic (EST) value=0x0 to scheduled packet queueing using Linux hi-resolution timer-interrupt and softirq kernel workqueues.

txtime-delayThis indicates the minimum time it will take for the packet to hit the wire. This is useful in determining whether we can transmit the packet in the remaining time if the gate corresponding to the packet is currently open.

Packet Classifier (CONFIG_NET_CLS_FLOWER)¶

Traffic-Class (TC) Flower classifier allows matching packets against pre-defined flow key fields:

Packet headers: f.e. IPv6 source address

Tunnel metadata: f.e. Tunnel Key ID

Metadata: Input port

TC actions allow packet to be modified, forwarded, dropped, etc…

pedit: modify packet data

mirrored: output packet

vlan: push, pop or modify VLAN

An ECI node Admin (root-user) seeking for the lowest ingress traffic latency using the Intel i210 controllers hw-filters (ie. kernel CONFIG_NET_CLS_FLOWER) to steer for example onto i210 device RX Queue #2 all UADP ETH (Ethertype 0xb62c) Layer2 ingress ethernet packets, may setup the following iproute2 tc filter ... flower command :

per-netdev configuration Allow disabling/enabling adding flows to hardware

$ ethtool -K eth0 hw-tc-offload on $ ethtool -K eth0 hw-tc-offload off

skip_hwandskip_swflags Allow users to influence placement of flows by kernel. Default is to add to hardware and try to add to software$ tc filter add dev eth0 parent ffff: proto 0xb62c flower \ src_mac cc:cc:cc:cc:cc:cc \ hw_tc 1 skip_sw

in_hwandnot_in_hwflags Allow kernel to report presence of flow in hardware. Policy was to only add rule to hardwareskip_swRule is present in hardwarein_hw# tc filter show dev ethO ingress filter parent ffff. protocol ip pref 49152 flower chain 0 handle 0x1 eth_type ipv4 Ip_ proto sctp dst port 80 skip_sw in_hw

When tc filter ... flower capabilities are too limited for complex ingress traffic scenario or simply not supported in Intel® controller, ethtool -U flow-type can be used alternatively.

Steer ingress UADP ETH (Ethertype

0xb62c) Layer 2 Ethernet packet to RX Queue #2$ ethtool -U eth2 flow-type ether proto 0xb62c action 2

Steer ingress PTPv2 (Ethertype 0x88f7) Layer 2 Ethernet packet to RX Queue #2

$ ethtool -U eth2 flow-type ether proto 0x88f7 action 2

Steer ingress packet with VLAN priority 3 (see IEEE_802.1Q header format ) to RX Queue #2

$ ethtool -U eth2 flow-type ether proto 0x8100 vlan 0x6000 m 0x1FFF action 2

Steer ingress packet with specified mac source address to RX Queue #2

$ ethtool -U eth2 flow-type ether src cc:cc:cc:cc:cc:cc action 2

Note

The Intel(R) Ethernet Controller I210 doesn’t support steering traffic based on the source address alone, we need to use another steering traffic, in this case we are using the ethernet type (0xb62c) to steer traffic to tx-queues.

Also tc filter ... flower steering traffic in_hw is limited to 1. It is recommended to use ethtool -U flow-type ntuples filters For ingress traffic 802.1Q steering user case.

The Intel® Ethernet Controller I225 Time-Sensitive Networking (TSN) Linux Reference upstream doesn’t yet support tc filter ... flower steering traffic based Ethertype. Only mac add based offload condition can be.

It is recommended to use ethtool -U flow-type ntuples filters For ingress traffic 802.1Q steering user case.

For further information about command arguments, see tc-flower manpage