eBPF offload XDP support on Intel® Ethernet Linux driver¶

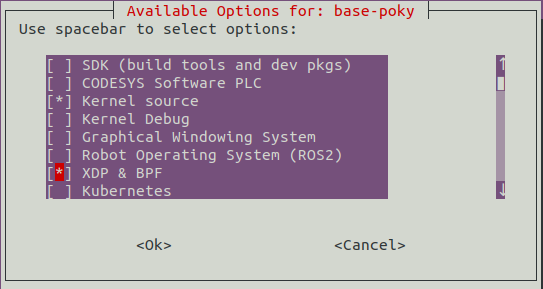

Linux Express Data Path (XDP) is ECI an optional user feature (also kas Project configuration files opt-xdp-bpf.dunfell.yml).

Linux eXpress Data Path (XDP)¶

Intel® upstream contribution accelerates the Linux Express Data Path (XDP) adoption from the legacy to latest Intel® Ethernet Linux drivers (ie. e1000, igb, igc, stmmac-pci,…).

ECI provide Linux eBPF offload XDP and Traffic Control (TC) BPF Classifier (aka cls_bpf) support for both Intel® Tiger Lake UP3 (TGL) Ethernet MAC Controller (mGBE) and Intel® Ethernet Controller I210

Note

Intel® Ethernet Controller I225-LM

Stay tuned subscribing to Intel® Linux upstream intel-wired-lan maillist.

Linux Qdisc AF_PACKETS socket present performance limitation that table below compare side-by-side both design approach :

AF_PACKETS Socket with Qdisc

AF_XDP Socket / eBPF offload

Linux Network Stack (TCP/IP, UDP/IP,…)

Yes

BPF runtime program/library idg_xdp_ring direct DMA

OSI Layer L4(Protocol)-L7(Application)

Yes

No

# of net-packets copy across kernel-to-users

Several skb_data

memcpynone in UMEM/Zero-copy mode a few in UMEM/copy mode

IEEE 802.1Q-2018 Enhancements for Scheduled Traffic (EST) Frame Preemption (FPE)

Standardize API for Hardware offload

Customize Hardware offload

Deterministic Ethernet Network Cycle-Time requirement

Moderate

Tight

Per-Packets TxTime constraints

Yes, AF_PACKETS SO_TXTIME cmsg

Yes AF_XDP (xdp_desc txtime)

IEEE 802.1AS L2/PTP RX & TX hwoffload

Yes L2/PTP

Yes L2/PTP

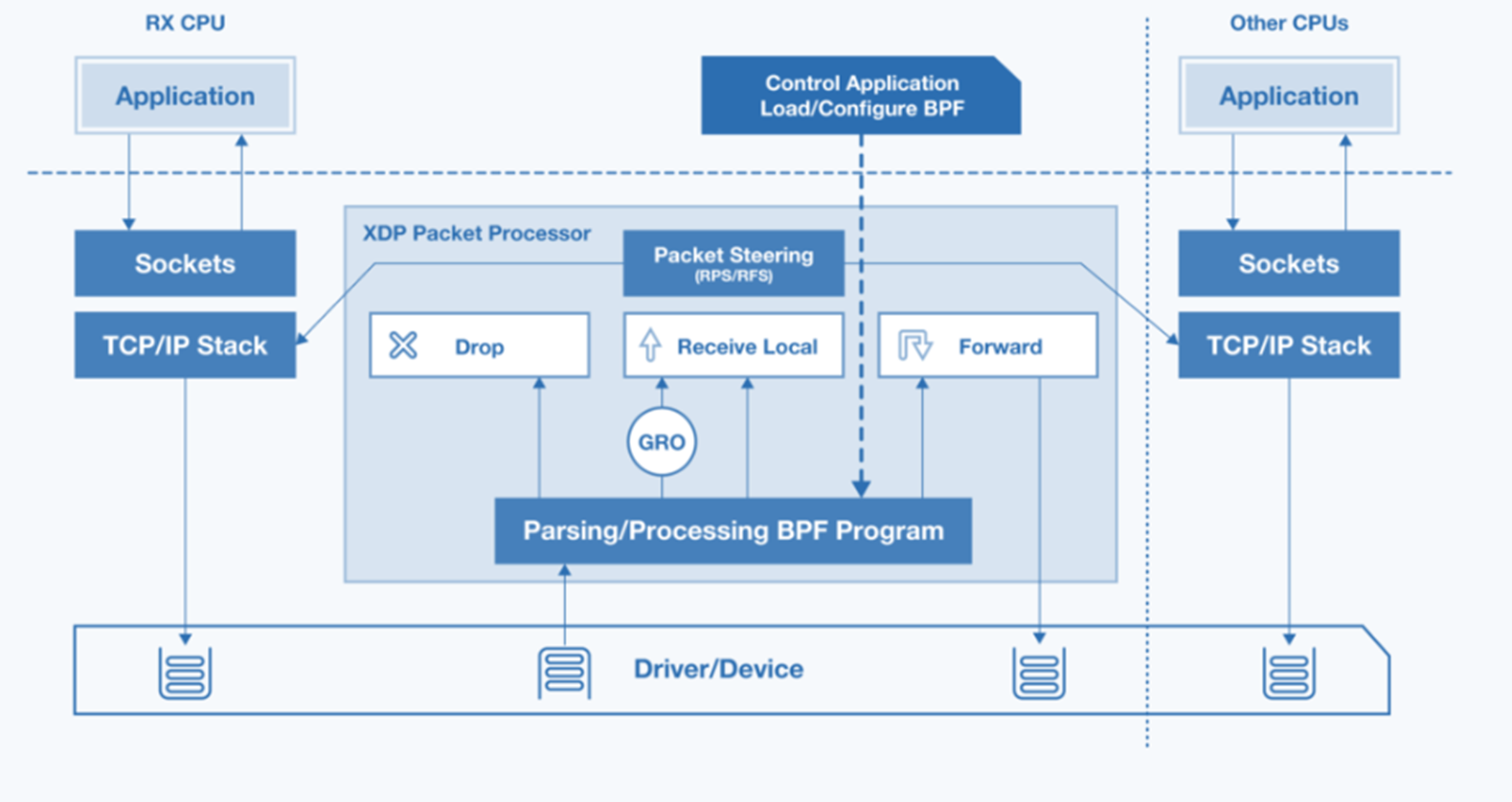

The ECI Linux Intel® Ethernet kernel driver Native XDP API support for Intel® I210 Ethernet PCI Controller or Intel® TGL integrated Ethernet MAC (mGBE) from eBPF Head-of-Time compiled program or AF_XDP socket API.

This allows for Industrial network engineer to offload a significant part of UADP ETH, ETherCAT or Profinet-RT/IRT Layer2 frames encoding/decoding as eBPF programs without requiring any microcode knowledge or understanding of Ethernet Controller architecture.

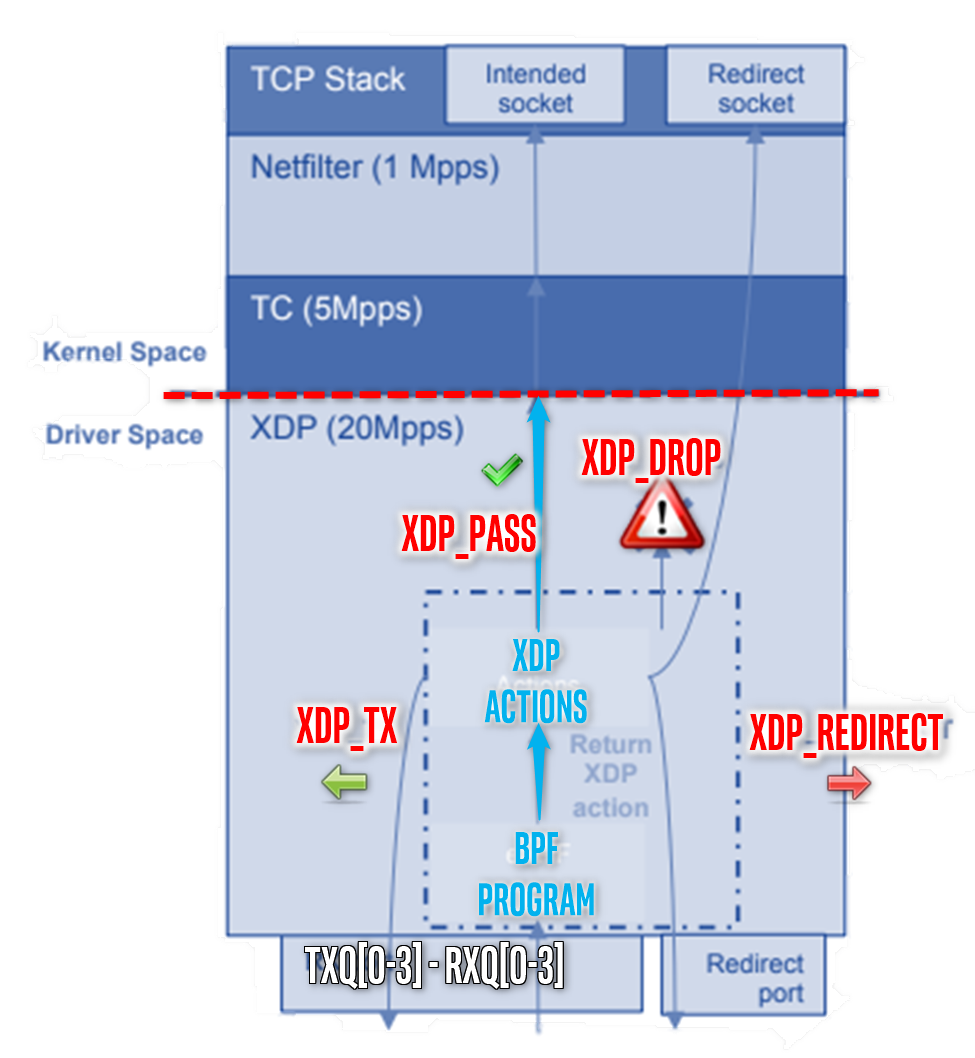

Under such configuration, ECI Intel® Ethernet kernel modules are compiled to handle XDP_ACTIONS allowing packets to be reflected, filtered or redirected without traversing networking stack :

eBPF programs classify/modify traffic and return XDP actions:

XDP_PASS

XDP_DROP

XDP_TX

XDP_REDIRECT

XDP_ABORT

Note

cls_bpfin Linux Traffic Control (TC) works in same manner kernel Space

This summarized on eBPP offload XDP support available on the ECI Linux kernel.

eBPF offload

v5.4.y Functionality

Intel® I210/igb

Intel® TGL/stmmac

Intel®I225-LM/igc

xdp program features

XDP_DROP

Yes

Yes

Yes(experimental)

XDP_PASS

Yes

Yes

Yes(experimental)

XDP_TX

Yes

Yes

Yes(experimental)

XDP_REDIRECT

Yes

Yes

Yes(experimental)

XDP_ABORTED

Yes

Yes

Yes(experimental)

Packet read access

Yes

Yes

Yes(experimental)

Conditional statements

Yes

Yes

Yes(experimental)

xdp_adjust_head()

Yes

Yes

Yes(experimental)

bpf_get_prandom_u32()

Yes

Yes

Yes(experimental)

perf_event_output()

Yes

Yes

Yes(experimental)

Partial offload

Yes

Yes

Yes(experimental)

RSS rx_queue_index select

Yes

Yes

Yes(experimental)

bpf_adjust_tail()

Yes

Yes

Yes(experimental)

xdp maps features

Offload ownership for maps

Yes

Yes

Yes(experimental)

Hash maps

Yes

Yes

Yes(experimental)

Array maps

Yes

Yes

Yes(experimental)

bpf_map_lookup_elem()

Yes

Yes

Yes(experimental)

bpf_map_update_elem()

Yes

Yes

Yes(experimental)

bpf_map_delete_elem()

Yes

Yes

Yes(experimental)

Atomic sync_fetch_and_add

untested

untested

untested

Map sharing between ports

untested

untested

untested

uarch optimization features

Localized packet cache

untested

untested

untested

32 bit BPF support

untested

untested

untested

Localized maps

untested

untested

untested

eBPF offload XDP featuring Intel® Ethernet Controller can be enabled at ECI Targets built-time (see Building ECI) .

# ifconfig eth3 10.11.12.1 up mtu 1500 # ethtool -L eth3 rx 0 tx 0 combined 4 # ethtool -G eth3 rx 2048 tx 2048Note

The maximum number of allowed igb_xdp_ring rings for eBPF on “Driver mode” is 4 combined per Intel® Ethernet Controller I210 PCI

The following applications available build-in on ECI image root file system :

Linux Intel® Ethernet Controller I210

igbXDP 9cbc948b5a20 (“igb: add XDP support”) driver patchset backported on kernel v5.4.y :

e1000support XDP_PASS, XDP_TX :

0001-e1000-add-initial-XDP-support.patch

0002-e1000-Remove-explicit-invocations-of-mmiowb.patch

igb/i210support XDP_DROP, XDP_PASS, XDP_TX and XDP_REDIRECT :

0003-igb-add-XDP-support.patch

0004-igb-XDP-xmit-back-fix-error-code.patch

0005-igb-avoid-transmit-queue-timeout-in-xdp-path.patch

0006-Add-xdp_query_prog-api.patch[EXPERIMENTAL] intel-wired-lan 5.4.y Initial AF_XDP on igc/i225 (UNDER UPSTREAM 5.11 )

opt-experimental.ymloptional user-feature Project configuration files

0001-igc-Fix-igc_ptp_rx_pktstamp.patch

0002-igc-Remove-unused-argument-from-igc_tx_cmd_type.patch

0003-igc-Introduce-igc_rx_buffer_flip-helper.patch

0004-igc-Introduce-igc_get_rx_frame_truesize-helper.patch

0005-igc-Refactor-Rx-timestamp-handling.patch

0006-igc-Add-set-clear-large-buffer-helpers.patch

0007-igc-Add-initial-XDP-support.patch

0008-igc-Add-support-for-XDP_TX-action.patch

0009-igc-Add-support-for-XDP_REDIRECT-action.patch

0010-SQUASHME-backport-to-convert_to_xdp_frame.patch

0011-SQUASHME-too-many-arguments-to-function-xdp_rxq_info.patch

0012-SQUASHME-fix-xdp_do_flush-missing-and-ignore-frame_s.patch

0013-SQUASHME-igc-add-missing-xdp_query-callback-in-5.4-k.patch[EXPERIMENTAL] intel-wired-lan 5.4.y backport enable PCIE PTM for igc/i225 (UNDER UPSTREAM 5.11 )

opt-experimental.ymloptional user-feature Project configuration files

0014-Revert-PCI-Make-pci_enable_ptm-private.patch

0015-igc-Enable-PCIe-PTM.patch

0016-igc-Add-support-for-PTP-getcrosststamp.patch[EXPERIMENTAL] intel-wired-lan 5.4.y backport add PPS and SDP support for igc/i225 (UNDER UPSTREAM 5.11 )

opt-experimental.ymloptional user-feature Project configuration files

0017-igc-Enable-internal-i225-PPS.patch

0018-igc-enable-auxiliary-PHC-functions-for-the-i225.patchoptionally

igb/i210under-development support of XSK_UMEM API and XDP_ZEROCOPY support can be reviewed and added manual to the distro

0007-igb-add-AF_XDP-zero-copy-Rx-and-Tx-support.patch

0008-igb-add-XDP-ZC-descriptor-txtime-and-launchtime-ring.patchCONFIG_XDP_SOCKETS=y CONFIG_BPF=y CONFIG_CGROUP_BPF=y CONFIG_BPF_SYSCALL=y # CONFIG_BPF_JIT_ALWAYS_ON is not set CONFIG_NETFILTER_XT_MATCH_BPF=m CONFIG_NET_CLS_BPF=m CONFIG_NET_ACT_BPF=m CONFIG_TEST_BPF=m CONFIG_BPFILTER=y CONFIG_BPF_JIT=y CONFIG_BPF_STREAM_PARSER=y CONFIG_LWTUNNEL_BPF=y CONFIG_HAVE_EBPF_JIT=y CONFIG_BPF_EVENTS=y # CONFIG_BPF_KPROBE_OVERRIDE is not set CONFIG_TEST_BPF=m

Linux

kernel-selftest(aligned with v5.4.61)/usr/kernel-selftest/bpfTestsuite programs runtime for eBPF and XDP API compliance.

BFP samples referenced from https://github.com/xdp-project/xdp-tutorial

Collection

/opt/xdp/bpf-samplesBPF sample applications allowing simple eBPF offload featureSanity-check xdpdump with in Generic-mode (ie. XDP_FLAGS_SKB_MODE ) and Driver-mode (ie. XDP_FLAGS_DRV_MODE for Direct-DMA igb_xdp_ring)

# ethtool -i eth3 driver: igb version: 5.4.0-k firmware-version: 3.25, 0x800005cf expansion-rom-version: bus-info: 0000:04:00.0 supports-statistics: yes supports-test: yes supports-eeprom-access: yes supports-register-dump: yes supports-priv-flags: yes # ./xdpdump -i eth3 -h xdpdump -i interface [OPTS] OPTS: -h help -N Native Mode (XDPDRV) -S SKB Mode (XDPGENERIC) -x Show packet payload # ./xdpdump -i eth3 -N 87661.930672 IP 0.0.0.0:68 > 255.255.255.255:67 UDP, length 548 87666.931007 IP 0.0.0.0:68 > 255.255.255.255:67 UDP, length 548 87670.754805 IP 0.0.0.0:68 > 255.255.255.255:67 UDP, length 297 87671.931312 IP 0.0.0.0:68 > 255.255.255.255:67 UDP, length 548 87676.931589 IP 0.0.0.0:68 > 255.255.255.255:67 UDP, length 548

Linux

iproute2installed ip version to ensure that the version is newer than 2018-04.User to load and attach eBPF programs to TC XDP or set TC Classifier netdev

# ip -V ip utility, iproute2-ss190708 # ip link show dev eth3 6: eth3: <NO-CARRIER,BROADCAST,MULTICAST,DYNAMIC,UP> mtu 1500 xdp qdisc mq state DOWN mode DEFAULT group default qlen 1000 link/ether 20:46:a1:03:69:7a brd ff:ff:ff:ff:ff:ff prog/xdp id 64 tag 5a94accd9bce3666

Package

commit

URL

iproute2id-74ea2526bfe6f3a5c605e49354fdf087fb18c192The upstream libbpf-next runtime library abstract API offering complex mechanism such as offloading BPF program through

bpf_prog_load_xattr()calls and fallbacks when XDP_FLAGS_DRV_MODE is not set for example.BPF header files

/usr/includeBPF dynamic and static prebuilt library allowing for user space program access to eBPF api

Package

commit

URL

libbpfid-ab067ed3710550c6d1b127aac6437f96f8f99447https://github.com/libbpf/libbpf/archive/ab067ed3710550c6d1b127aac6437f96f8f99447.zip

Important

please note that several patches applies with libbpf_git.bb Yocto ROOTFS build recipes on top of the upstream libbpf source-code :

0001-libbpf-add-txtime-field-in-xdp_desc-struct.patch Adds a UAPI xdp_desc entry to set AF_XDP packet txtime on NIC drivers that support the LaunchTime feature :

The following patch series applies

meta-intel-tsn/recipes-connectivity/libbpf/libbpf_git.bbappendfixes for BTF-sanitization warnings bpf_prog_load_xattr() call backported from upstream to :

0004-libbpf-Detect-minimal-BTF-support-and-skip-BTF-loadi.patch

0005-libbpf-Add-btf__set_fd-for-more-control-over-loaded-.patch

0006-libbpf-Improve-BTF-sanitization-handling.patch

0007-libbpf-Fix-memory-leak-and-optimize-BTF-sanitization.patch

Linux

bpftool(aligned with v5.4.59) is a user space utility used for introspection and management of eBPF objects (maps and programs).Lists active bpf programs and maps

Interactions with eBPF maps (lookups or updates)

Dump assembly code (JIT and Pre-JIT)

# bpftool prog show 64: xdp name process_packet tag 5a94accd9bce3666 gpl loaded_at 2020-07-28T10:20:03+0000 uid 0 xlated 880B not jited memlock 4096B map_ids 5 # bpftool prog dump xlated id 64 0: (79) r3 = *(u64 *)(r1 +8) 1: (79) r2 = *(u64 *)(r1 +0) 2: (b7) r5 = 0 3: (7b) *(u64 *)(r10 -8) = r5 4: (7b) *(u64 *)(r10 -16) = r5 5: (7b) *(u64 *)(r10 -24) = r5 6: (7b) *(u64 *)(r10 -32) = r5 7: (7b) *(u64 *)(r10 -40) = r5 8: (7b) *(u64 *)(r10 -48) = r5 9: (bf) r0 = r2 10: (07) r0 += 14 11: (2d) if r0 > r3 goto pc+96 12: (71) r4 = *(u8 *)(r2 +12) 13: (71) r6 = *(u8 *)(r2 +13) 14: (67) r6 <<= 8 15: (4f) r6 |= r4 16: (dc) r6 = be16 r6 17: (6b) *(u16 *)(r10 -12) = r6 18: (15) if r6 == 0x86dd goto pc+16 19: (b7) r4 = 14 20: (55) if r6 != 0x800 goto pc+42 21: (bf) r4 = r2 22: (07) r4 += 34 23: (2d) if r4 > r3 goto pc+84 24: (71) r4 = *(u8 *)(r0 +0) 25: (57) r4 &= 15 26: (55) if r4 != 0x5 goto pc+81 27: (61) r4 = *(u32 *)(r2 +26) ... # bpftool map 5: perf_event_array name perf_map flags 0x0 key 4B value 4B max_entries 128 memlock 4096B

libbpf XDP API helper overview¶

User space programs can interact with the offloaded program in the same way as normal eBPF programs. The kernel will try and offload the program if a non-null ifindex is supplied to the bpf() linux syscall for loading the program.

Maps can be accessed from the kernel using user space eBPF map lookup/update commands.

BPF Helpers include/uapi/linux/bpf.h are used to add functionality that would otherwise be difficult :

Key XDP Map helpers :

bpf_map_lookup_elem

bpf_map_update_elem

bpf_map_delete_elem

bpf_redirect_map

Head Extend:

bpf_xdp_adjust_head

bpf_xdp_adjust_meta

Others

bpf_perf_event_output

bpf_ktime_get_ns

bpf_trace_printk

bpf_tail_call

bpf_redirect

This section provides the steps for creating a basic IPv4/IPv6 UDP packet processing eBPF XDP program:

Create the eBPF offload XDP

<..._kern>.cthat :Define SEC(“maps”) XDP Event of

bpf_map_deftypes such as the user-space can query the object map using the built kernel map lookupbpf_map_lookup_elem()API calls, which are subsequently relayed toigbdriver. In this example, eBPF program may communicate with userspace using the kernel’s perf tracing events.1struct bpf_map_def SEC("maps") perf_map = { 2 .type = BPF_MAP_TYPE_PERF_EVENT_ARRAY, 3 .key_size = sizeof(__u32), 4 .value_size = sizeof(__u32), 5 .max_entries = MAX_CPU, 6};

Declare all sub-functions as

static __always_inline1static __always_inline bool parse_udp(void *data, __u64 off, void *data_end, 2 struct pkt_meta *pkt) 3{ 4 struct udphdr *udp; 5 6 udp = data + off; 7 if (udp + 1 > data_end) 8 return false; 9 10 pkt->port16[0] = udp->source; 11 pkt->port16[1] = udp->dest; 12 return true; 13}

Declare

SEC("xdp")program entry takingxdp_md *ctxas input and defining the appropriate XDP actions as output, XDP_PASS` in the example below.When ingress packets enter the XDP program, packet metadata is extracted and stored into a data structure. The XDP program sends this metadata, along with the packet contents, to the ring buffer denoted in the eBPF perf event map using the current CPU index as the key.

1SEC("xdp") 2int process_packet(struct xdp_md *ctx) 3{ 4 void *data_end = (void *)(long)ctx->data_end; 5 void *data = (void *)(long)ctx->data; 6 struct ethhdr *eth = data; 7 struct pkt_meta pkt = {}; 8 __u32 off; 9 10 /* parse packet for IP Addresses and Ports */ 11 off = sizeof(struct ethhdr); 12 if (data + off > data_end) 13 return XDP_PASS; 14 15 pkt.l3_proto = bpf_htons(eth->h_proto); 16 17 if (pkt.l3_proto == ETH_P_IP) { 18 if (!parse_ip4(data, off, data_end, &pkt)) 19 return XDP_PASS; 20 off += sizeof(struct iphdr); 21 } else if (pkt.l3_proto == ETH_P_IPV6) { 22 if (!parse_ip6(data, off, data_end, &pkt)) 23 return XDP_PASS; 24 off += sizeof(struct ipv6hdr); 25 } 26 27 if (data + off > data_end) 28 return XDP_PASS; 29 30 /* obtain port numbers for UDP and TCP traffic */ 31 if (if (pkt.l4_proto == IPPROTO_UDP) { 32 if (!parse_udp(data, off, data_end, &pkt)) 33 return XDP_PASS; 34 off += sizeof(struct udphdr); 35 } else { 36 pkt.port16[0] = 0; 37 pkt.port16[1] = 0; 38 } 39 40 pkt.pkt_len = data_end - data; 41 pkt.data_len = data_end - data - off; 42 43 bpf_perf_event_output(ctx, &perf_map, 44 (__u64)pkt.pkt_len << 32 | BPF_F_CURRENT_CPU, 45 &pkt, sizeof(pkt)); 46 return XDP_PASS; 47}

Compile XDP program as x86_64 eBPF assembler using following command on build system or into yocto build recipe:

# clang -O2 -S \ -D __BPF_TRACING__ \ -I$(LIBBPF_DIR)/root/usr/include/ \ -Wall \ -Wno-unused-value \ -Wno-pointer-sign \ -Wno-compare-distinct-pointer-types \ -Werror \ -emit-llvm -c -g <..._kern>.c -o <..._kern>.S # llvm -march=bpf -filetype=obj -o <..._kern>.o <..._kern>.S

Create <.._user.c> user-space

main()program that :Initiates

bpf_prog_load_xattr()API call to offload LLV-compile..._kern.oeBPF XDP program, either inXDP_FLAGS_SKB_MODEorXDP_FLAGS_DRV_MODEusingxdp_flagsinput parameter ofbpf_set_link_xdp_fd()API.1static void usage(const char *prog) 2{ 3 fprintf(stderr, 4 "%s -i interface [OPTS]\n\n" 5 "OPTS:\n" 6 " -h help\n" 7 " -N Native Mode (XDPDRV)\n" 8 " -S SKB Mode (XDPGENERIC)\n" 9 " -x Show packet payload\n", 10 prog); 11} 12 13int main(int argc, char **argv) 14{ 15 static struct perf_event_mmap_page *mem_buf[MAX_CPU]; 16 struct bpf_prog_load_attr prog_load_attr = { 17 .prog_type = BPF_PROG_TYPE_XDP, 18 .file = "xdpdump_kern.o", 19 }; 20 struct bpf_map *perf_map; 21 struct bpf_object *obj; 22 int sys_fds[MAX_CPU]; 23 int perf_map_fd; 24 int prog_fd; 25 int n_cpus; 26 int opt; 27 28 xdp_flags = XDP_FLAGS_DRV_MODE; /* default to DRV */ 29 n_cpus = get_nprocs(); 30 dump_payload = 0; 31 32 if (optind == argc) { 33 usage(basename(argv[0])); 34 return -1; 35 } 36 37 while ((opt = getopt(argc, argv, "hi:NSx")) != -1) { 38 switch (opt) { 39 case 'h': 40 usage(basename(argv[0])); 41 return 0; 42 case 'i': 43 ifindex = if_nametoindex(optarg); 44 break; 45 case 'N': 46 xdp_flags = XDP_FLAGS_DRV_MODE; 47 break; 48 case 'S': 49 xdp_flags = XDP_FLAGS_SKB_MODE; 50 break; 51 case 'x': 52 dump_payload = 1; 53 break; 54 default: 55 printf("incorrect usage\n"); 56 usage(basename(argv[0])); 57 return -1; 58 } 59 } 60 61 if (ifindex == 0) { 62 printf("error, invalid interface\n"); 63 return -1; 64 } 65 66 /* use libbpf to load program */ 67 if (bpf_prog_load_xattr(&prog_load_attr, &obj, &prog_fd)) { 68 printf("error with loading file\n"); 69 return -1; 70 } 71 72 if (prog_fd < 1) { 73 printf("error creating prog_fd\n"); 74 return -1; 75 } 76 77 signal(SIGINT, unload_prog); 78 signal(SIGTERM, unload_prog); 79 80 /* use libbpf to link program to interface with corresponding flags */ 81 if (bpf_set_link_xdp_fd(ifindex, prog_fd, xdp_flags) < 0) { 82 printf("error setting fd onto xdp\n"); 83 return -1; 84 } 85 86 perf_map = bpf_object__find_map_by_name(obj, "perf_map"); 87 perf_map_fd = bpf_map__fd(perf_map); 88 89 if (perf_map_fd < 0) { 90 printf("error cannot find map\n"); 91 return -1; 92 } 93 94 /* Initialize perf rings */ 95 if (setup_perf_poller(perf_map_fd, sys_fds, n_cpus, &mem_buf[0])) 96 return -1; 97 98 event_poller(mem_buf, sys_fds, n_cpus); 99 100 return 0; 101}

Attach file handle

perf_map_fdusingbpf_object__find_map_by_name()API is used to to perf events map ringsbpf_map_update_elem()method call.1int setup_perf_poller(int perf_map_fd, int *sys_fds, int cpu_total, 2 struct perf_event_mmap_page **mem_buf) 3{ 4 struct perf_event_attr attr = { 5 .sample_type = PERF_SAMPLE_RAW | PERF_SAMPLE_TIME, 6 .type = PERF_TYPE_SOFTWARE, 7 .config = PERF_COUNT_SW_BPF_OUTPUT, 8 .wakeup_events = 1, 9 }; 10 int mmap_size; 11 int pmu; 12 int n; 13 14 mmap_size = getpagesize() * (PAGE_CNT + 1); 15 16 for (n = 0; n < cpu_total; n++) { 17 /* create perf fd for each thread */ 18 pmu = sys_perf_event_open(&attr, -1, n, -1, 0); 19 if (pmu < 0) { 20 printf("error setting up perf fd\n"); 21 return 1; 22 } 23 /* enable PERF events on the fd */ 24 ioctl(pmu, PERF_EVENT_IOC_ENABLE, 0); 25 26 /* give fd a memory buf to write to */ 27 mem_buf[n] = mmap(NULL, mmap_size, PROT_READ | PROT_WRITE, 28 MAP_SHARED, pmu, 0); 29 if (mem_buf[n] == MAP_FAILED) { 30 printf("error creating mmap\n"); 31 return 1; 32 } 33 /* point eBPF map entries to fd */ 34 assert(!bpf_map_update_elem(perf_map_fd, &n, &pmu, BPF_ANY)); 35 sys_fds[n] = pmu; 36 } 37 return 0; 38}

Defines

event_poller()loop for polling forperf_event_headerevents rings and epochtimestampis done by registering with bpf_perf_event_read_simple()` API the ringevent_received()andevent_print()call to the XDP program.1struct pkt_meta { 2 union { 3 __be32 src; 4 __be32 srcv6[4]; 5 }; 6 union { 7 __be32 dst; 8 __be32 dstv6[4]; 9 }; 10 __u16 port16[2]; 11 __u16 l3_proto; 12 __u16 l4_proto; 13 __u16 data_len; 14 __u16 pkt_len; 15 __u32 seq; 16}; 17 18struct perf_event_sample { 19 struct perf_event_header header; 20 __u64 timestamp; 21 __u32 size; 22 struct pkt_meta meta; 23 __u8 pkt_data[64]; 24}; 25 26static enum bpf_perf_event_ret event_received(void *event, void *printfn) 27{ 28 int (*print_fn)(struct perf_event_sample *) = printfn; 29 struct perf_event_sample *sample = event; 30 31 if (sample->header.type == PERF_RECORD_SAMPLE) 32 return print_fn(sample); 33 else 34 return LIBBPF_PERF_EVENT_CONT; 35} 36 37int event_poller(struct perf_event_mmap_page **mem_buf, int *sys_fds, 38 int cpu_total) 39{ 40 struct pollfd poll_fds[MAX_CPU]; 41 void *buf = NULL; 42 size_t len = 0; 43 int total_size; 44 int pagesize; 45 int res; 46 int n; 47 48 /* Create pollfd struct to contain poller info */ 49 for (n = 0; n < cpu_total; n++) { 50 poll_fds[n].fd = sys_fds[n]; 51 poll_fds[n].events = POLLIN; 52 } 53 54 pagesize = getpagesize(); 55 total_size = PAGE_CNT * pagesize; 56 for (;;) { 57 /* Poll fds for events, 250ms timeout */ 58 poll(poll_fds, cpu_total, 250); 59 60 for (n = 0; n < cpu_total; n++) { 61 if (poll_fds[n].revents) { /* events found */ 62 res = bpf_perf_event_read_simple(mem_buf[n], 63 total_size, 64 pagesize, 65 &buf, &len, 66 event_received, 67 event_printer); 68 if (res != LIBBPF_PERF_EVENT_CONT) 69 break; 70 } 71 } 72 } 73 free(buf); 74}

In this example, when an event is received, the

event_received()callback prints out the perf event’s metadatapkt_metaand epochtimestampto the terminal. The user can also specify if the packet contentspkt_datashould be dumped in hexadecimal format.1void meta_print(struct pkt_meta meta, __u64 timestamp) 2{ 3 char src_str[INET6_ADDRSTRLEN]; 4 char dst_str[INET6_ADDRSTRLEN]; 5 char l3_str[32]; 6 char l4_str[32]; 7 8 switch (meta.l3_proto) { 9 case ETH_P_IP: 10 strcpy(l3_str, "IP"); 11 inet_ntop(AF_INET, &meta.src, src_str, INET_ADDRSTRLEN); 12 inet_ntop(AF_INET, &meta.dst, dst_str, INET_ADDRSTRLEN); 13 break; 14 case ETH_P_IPV6: 15 strcpy(l3_str, "IP6"); 16 inet_ntop(AF_INET6, &meta.srcv6, src_str, INET6_ADDRSTRLEN); 17 inet_ntop(AF_INET6, &meta.dstv6, dst_str, INET6_ADDRSTRLEN); 18 break; 19 case ETH_P_ARP: 20 strcpy(l3_str, "ARP"); 21 break; 22 default: 23 sprintf(l3_str, "%04x", meta.l3_proto); 24 } 25 26 switch (meta.l4_proto) { 27 case IPPROTO_TCP: 28 sprintf(l4_str, "TCP seq %d", ntohl(meta.seq)); 29 break; 30 case IPPROTO_UDP: 31 strcpy(l4_str, "UDP"); 32 break; 33 case IPPROTO_ICMP: 34 strcpy(l4_str, "ICMP"); 35 break; 36 default: 37 strcpy(l4_str, ""); 38 } 39 40 printf("%lld.%06lld %s %s:%d > %s:%d %s, length %d\n", 41 timestamp / NS_IN_SEC, (timestamp % NS_IN_SEC) / 1000, 42 l3_str, 43 src_str, ntohs(meta.port16[0]), 44 dst_str, ntohs(meta.port16[1]), 45 l4_str, meta.data_len); 46} 47 48int event_printer(struct perf_event_sample *sample) 49{ 50 int i; 51 52 meta_print(sample->meta, sample->timestamp); 53 54 if (dump_payload) { /* print payload hex */ 55 printf("\t"); 56 for (i = 0; i < sample->meta.pkt_len; i++) { 57 printf("%02x", sample->pkt_data[i]); 58 59 if ((i + 1) % 16 == 0) 60 printf("\n\t"); 61 else if ((i + 1) % 2 == 0) 62 printf(" "); 63 } 64 printf("\n"); 65 } 66 return LIBBPF_PERF_EVENT_CONT; 67}

Compile and link program to

libbpfandlibelfusing GCC commandgcc -lbpf -lelf -I$(LIBBPF_DIR)/root/usr/include/ -I../headers/ -L$(LIBBPF_DIR) -c <.._user>.c -o <.._user>

RX Receive Side Scaling (RSS) Queue¶

The igb.ko and stmmac-pci.ko Linux drivers allows for the offloaded eBPF program XDP to choose the Receive Side Scaling (RSS) queue for transferring the packets up to the userspace.

For example, in the program below, all received packets will be placed onto queue 1. This can provide optimized I210 RX queue distributions for incoming network traffic-class.

SEC("xdp") int process_packet(struct xdp_md *ctx) { ctx->rx_queue_index = 1; ... return XDP_PASS; }

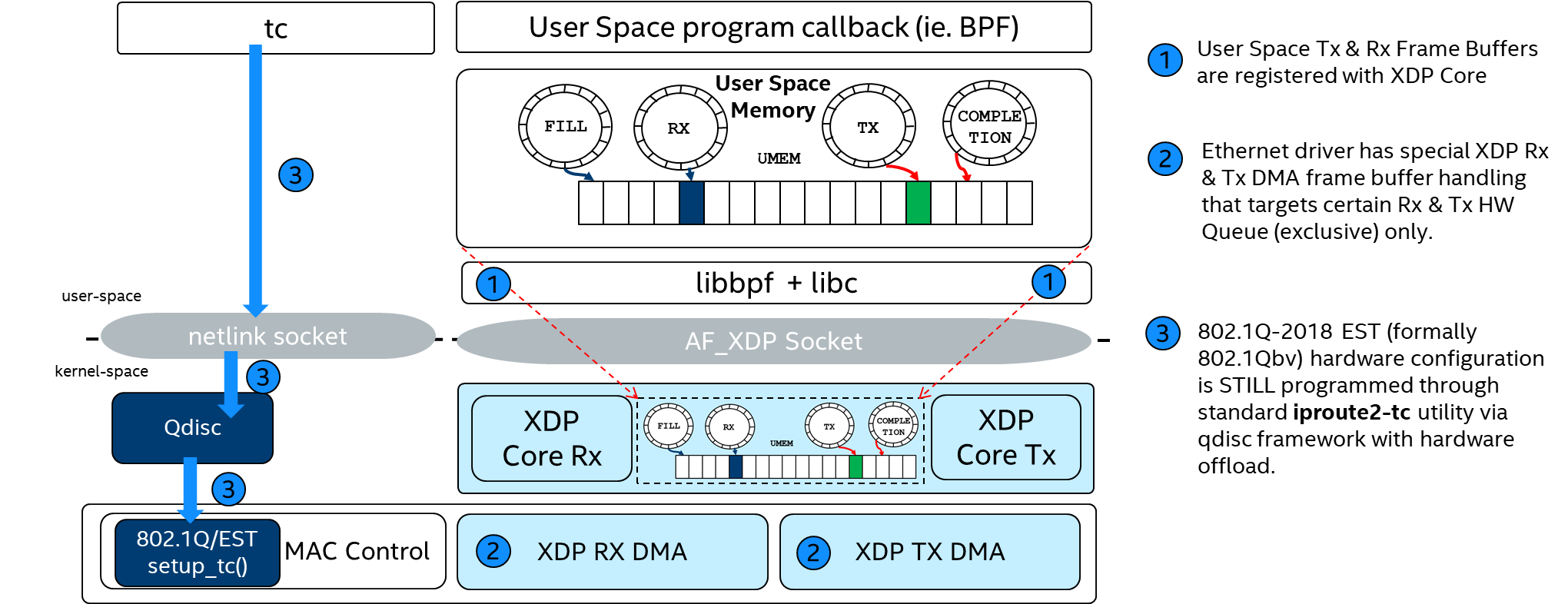

AF_XDP socket (CONFIG_XDP_SOCKETS)¶

An AF_XDP socket (XSK) is created with the normal socket() syscall. Associated with each XSK are two rings: the RX ring and the TX ring. A socket can receive packets on the RX ring and it can send packets on the TX ring. These rings are registered and sized with the setsockopts XDP_RX_RING and XDP_TX_RING, respectively. It is mandatory to have at least one of these rings for each socket. An RX or TX descriptor ring points to a data buffer in a memory area called a UMEM. RX and TX can share the same UMEM so that a packet does not have to be copied between RX and TX. Moreover, if a packet needs to be kept for a while due to a possible retransmit, the descriptor that points to that packet can be changed to point to another and reused right away. This again avoids copying data.

Kernel feature CONFIG_XDP_SOCKETS allows the igb.ko and stmmac-pci.ko Linux drivers to offload to the eBPF program XDP for transferring the packets up to the userspace using using AF_XDP.

Note

please note that CONFIG_XDP_SOCKETS Linux UAPI is patched in ECI allowing LaunchTime hw-offload capability on stmmac-pci.ko (EXPERIMENTAL-only on igb.ko) from the Linux AF_XDP API.

From 3db3767d1b06ac767b7752d2ff8d488cd191c46e Mon Sep 17 00:00:00 2001 From: "Mohamad Azman, Syaza Athirah" <syaza.athirah.mohamad.azman@intel.com> Date: Thu, 16 Jul 2020 18:00:51 -0400 Subject: [PATCH] libbpf: add txtime field in xdp_desc struct Add a xdp_desc entry to specify packet LaunchTime for drivers that support the feature. Signed-off-by: Mohamad Azman, Syaza Athirah <syaza.athirah.mohamad.azman@intel.com> --- include/uapi/linux/if_xdp.h | 1 + 1 file changed, 1 insertion(+) diff --git a/include/uapi/linux/if_xdp.h b/include/uapi/linux/if_xdp.h index be328c5..0e8ecdf 100644 --- a/include/uapi/linux/if_xdp.h +++ b/include/uapi/linux/if_xdp.h @@ -101,6 +101,7 @@ struct xdp_desc { __u64 addr; __u32 len; __u32 options; + __u64 txtime; }; /* UMEM descriptor is __u64 */ -- 2.17.1

The Linux 4.19 kernel BPF reference xdpsock_user is available in ECI image runtime under /opt/xdp/bpf-examples/xdpsock

# ./xdpsock --help

Usage: xdpsock [OPTIONS]

Options:

-r, --rxdropDiscard all incoming packets (default)

-t, --txonlyOnly send packets

-l, --l2fwdMAC swap L2 forwarding

-i, --interface=nRun on interface n

-q, --queue=nUse queue n (default 0)

-p, --pollUse poll syscall

-s, --shared-bufferUse shared packet buffer

-S, --xdp-skb=nUse XDP skb-mod

-N, --xdp-native=nEnfore XDP native mode

-n, --interval=nSpecify statistics update interval (default 1 sec).

# ./xdpsock -i eth3 -q 1 -N

sock0@eth3:1 rxdrop xdp-drv

pps pkts 1.01

rx 0 0

tx 0 0

sock0@eth3:1 rxdrop xdp-drv

pps pkts 1.00

rx 0 0

tx 0 0

# bpftool prog show

17: cgroup_skb tag 6deef7357e7b4530 gpl

loaded_at 2019-10-25T06:12:41+0000 uid 0

xlated 64B not jited memlock 4096B

18: cgroup_skb tag 6deef7357e7b4530 gpl

loaded_at 2019-10-25T06:12:41+0000 uid 0

xlated 64B not jited memlock 4096B

19: cgroup_skb tag 6deef7357e7b4530 gpl

loaded_at 2019-10-25T06:12:41+0000 uid 0

xlated 64B not jited memlock 4096B

20: cgroup_skb tag 6deef7357e7b4530 gpl

loaded_at 2019-10-25T06:12:41+0000 uid 0

xlated 64B not jited memlock 4096B

21: cgroup_skb tag 6deef7357e7b4530 gpl

loaded_at 2019-10-25T06:12:41+0000 uid 0

xlated 64B not jited memlock 4096B

22: cgroup_skb tag 6deef7357e7b4530 gpl

loaded_at 2019-10-25T06:12:41+0000 uid 0

xlated 64B not jited memlock 4096B

83: xdp name xdp_sock_prog tag c85daa2f1b3c395f gpl

loaded_at 2019-10-28T00:00:38+0000 uid 0

xlated 176B not jited memlock 4096B map_ids 45,46

# bpftool map show

45: array name qidconf_map flags 0x0

key 4B value 4B max_entries 1 memlock 4096B

46: xskmap name xsks_map flags 0x0

key 4B value 4B max_entries 4 memlock 4096B

47: percpu_array name rr_map flags 0x0

key 4B value 4B max_entries 1 memlock 4096B

The Linux 4.19 kernel BPF reference samples/bpf/xdpsock_kern.c XDP program defines queue configuration using qidconf_map object for all receiving packets to be redirected to userland as BPF_MAP_TYPE_XSKMAP object types.

1 // SPDX-License-Identifier: GPL-2.0 2 #define KBUILD_MODNAME "foo" 3 #include <uapi/linux/bpf.h> 4 #include "bpf_helpers.h" 5 6 /* Power-of-2 number of sockets */ 7 #define MAX_SOCKS 4 8 /* Round-robin receive */ 9 #define RR_LB 0 10 11 struct bpf_map_def SEC("maps") qidconf_map = { 12 .type = BPF_MAP_TYPE_ARRAY, 13 .key_size = sizeof(int), 14 .value_size = sizeof(int), 15 .max_entries = 1, 16 }; 17 18 struct bpf_map_def SEC("maps") xsks_map = { 19 .type = BPF_MAP_TYPE_XSKMAP, 20 .key_size = sizeof(int), 21 .value_size = sizeof(int), 22 .max_entries = 4, 23 }; 24 25 struct bpf_map_def SEC("maps") rr_map = { 26 .type = BPF_MAP_TYPE_PERCPU_ARRAY, 27 .key_size = sizeof(int), 28 .value_size = sizeof(unsigned int), 29 .max_entries = 1, 30 };The eBPF program entry

SEC("xdp_sock")takesxdp_md *ctxas input and defines the appropriate XDP actions as output,XDP_REDIRECTorXDP_ABORTEDin the example below. When ingress packets enter the XDP program kernel, raw packet data is extracted and mapped into UMEM space as axsks_mapdata structure usingbpf_redirect_mapalong with the packet index in to respective ring buffers (one per-CPU). If compiled, therr_mapkey object denotes round-robin indexing inBPF_MAP_TYPE_XSKMAPmapped objects.1SEC("xdp_sock") 2int xdp_sock_prog(struct xdp_md *ctx) 3{ 4 int *qidconf, key = 0, idx; 5#if RR_LB /* NB! RR_LB is configured in xdpsock.h */ 6 unsigned int *rr; 7#endif 8 9 qidconf = bpf_map_lookup_elem(&qidconf_map, &key); 10 if (!qidconf) 11 return XDP_ABORTED; 12 13 if (*qidconf != ctx->rx_queue_index) 14 return XDP_PASS; 15 16#if RR_LB /* NB! RR_LB is configured in xdpsock.h */ 17 rr = bpf_map_lookup_elem(&rr_map, &key); 18 if (!rr) 19 return XDP_ABORTED; 20 21 *rr = (*rr + 1) & (MAX_SOCKS - 1); 22 idx = *rr; 23#else 24 idx = 0; 25#endif 26 27 return bpf_redirect_map(&xsks_map, idx, 0); 28}

The Linux 5.4 reference BPF sample xdpsock_user.c provides more options to compare XDP_COPY and XDP_ZEROCOPY, it is available under /opt/xdp/bpf-examples/xdpsock_5.x

/opt/xdp/bpf-examples# ./xdpsock_5.x -h

Usage: xdpsock_5.x [OPTIONS]

Options:

-r, --rxdrop Discard all incoming packets (default)

-t, --txonly Only send packets

-l, --l2fwd MAC swap L2 forwarding

-i, --interface=n Run on interface n

-q, --queue=n Use queue n (default 0)

-p, --poll Use poll syscall

-S, --xdp-skb=n Use XDP skb-mod

-N, --xdp-native=n Enfore XDP native mode

-n, --interval=n Specify statistics update interval (default 1 sec).

-z, --zero-copy Force zero-copy mode.

-c, --copy Force copy mode.

-f, --frame-size=n Set the frame size (must be a power of two, default is 4096).

-m, --no-need-wakeup Turn off use of driver need wakeup flag.

-f, --frame-size=n Set the frame size (must be a power of two in aligned mode, default is -2003886466).

-u, --unaligned Enable unaligned chunk placement

XDP Sanity-Check Testing¶

Sanity-Check #1: Load & Execute eBPF offload XDP GENERIC_MODE program¶

Setup two or more ECI nodes according to Intel® Ethernet Controllers TSN Enabling and Testing frameworks guidelines

Generate UDP traffic between talker and listener

Listener: # ip addr add 192.168.1.206/24 brd 192.168.0.255 dev enp1s0 # iperf3 -s ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- Talker: # ip addr add 192.168.1.203/24 brd 192.168.0.255 dev enp1s0 # iperf3 -c 192.168.1.206 -t 600 -b 0 -u -l 1448 Connecting to host 192.168.1.206, port 5201 [ 5] local 192.168.1.203 port 36974 connected to 192.168.1.206 port 5201 [ ID] Interval Transfer Bitrate Total Datagrams [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 82590 [ 5] 1.00-2.00 sec 114 MBytes 956 Mbits/sec 82540

Execute the precompiled BPF XDP program loaded on the i210 device in xdp generic mode with successful XDP_PASS

Listener: # ./xdpdump -i enp1s0 -S 73717.493449 IP 192.168.1.203:36974 > 192.168.1.206:5201 UDP, length 1448 73717.493453 IP 192.168.1.203:36974 > 192.168.1.206:5201 UDP, length 1448 73717.493455 IP 192.168.1.203:36974 > 192.168.1.206:5201 UDP, length 1448 73717.493458 IP 192.168.1.203:36974 > 192.168.1.206:5201 UDP, length 1448 # ip link show dev enp1s0 3: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 xdpgeneric qdisc mq state UP mode DEFAULT group default qlen 1000 link/ether 9c:69:b4:61:82:73 brd ff:ff:ff:ff:ff:ff prog/xdp id 18 tag a5fe55ab7ae19273

Press Ctrl+C to unload XDP program

#./xdpdump -i enp1s0 -S ^Cunloading xdp program...

Sanity-Check #2: Load & Execute eBPF offload XDP DRV_MODE program¶

Setup two or more ECI nodes according to Intel® Ethernet Controllers TSN Enabling and Testing frameworks guidelines

Execute the precompiled BPF XDP program loaded on the i210 device in xdp native mode with successful XDP_PASS

Listener: # ./xdpdump -i enp1s0 -N 673485.966036 0026 :0 > :0 , length 46 673486.626951 88cc :0 > :0 , length 236 673486.646948 TIMESYNC :0 > :0 , length 52 673487.646943 TIMESYNC :0 > :0 , length 52 ... 673519.134258 IP 192.168.1.1:67 > 192.168.1.206:68 UDP, length 300 673519.136024 IP 192.168.1.1:67 > 192.168.1.206:68 UDP, length 300 673519.649768 TIMESYNC 192.168.1.1:0 > 192.168.1.206:0 , length 52 # ip link show dev enp1s0 3: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 xdp qdisc mq state UP mode DEFAULT group default qlen 1000 link/ether 9c:69:b4:61:82:73 brd ff:ff:ff:ff:ff:ff prog/xdp id 80 tag a5fe55ab7ae19273

Generate UDP traffic between talker and listener

Listener: # ip addr add 192.168.1.206/24 brd 192.168.0.255 dev enp1s0 # iperf3 -s ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- Talker: # ip addr add 192.168.1.203/24 brd 192.168.0.255 dev enp1s0 # iperf3 -c 192.168.1.206 -t 600 -b 0 -u -l 1448 Connecting to host 192.168.1.206, port 5201 [ 5] local 192.168.1.203 port 52883 connected to 192.168.1.206 port 5201 [ ID] Interval Transfer Bitrate Total Datagrams [ 5] 0.00-1.00 sec 114 MBytes 956 Mbits/sec 82540 [ 5] 1.00-2.00 sec 114 MBytes 956 Mbits/sec 82570

Press Ctrl+C to unload XDP program

#./xdpdump -i enp1s0 -N ^Cunloading xdp program...

Sanity-Check #3: Load & Execute AF_XDP socket in XDP_COPY mode¶

Setup two or more ECI nodes according to Intel® Ethernet Controllers TSN Enabling and Testing frameworks guidelines

Execute the precompiled BPF XDP program loaded on the TGL

stmmac-pci.koor i210igb.kodevice driver in XDP_COPY native mode with successful XDP_RX/opt/xdp/bpf-examples# ./xdpsock_5.x -i enp0s30f4 -q 0 -N -c sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.01 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 ^C sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 0.47 rx 0 0 tx 0 0 /opt/xdp/bpf-examples# ip link show dev enp0s30f4 3: enp0s30f4: <BROADCAST,MULTICAST,DYNAMIC,UP,LOWER_UP> mtu 1500 xdp qdisc mq state UP mode DEFAULT group default qlen 1000 link/ether 88:ab:cd:11:01:23 brd ff:ff:ff:ff:ff:ff prog/xdp id 29 tag 992d9ddc835e5629 /opt/xdp/bpf-examples# bpftool prog show ... 29: xdp tag 992d9ddc835e5629 loaded_at 2020-03-13T16:14:38+0000 uid 0 xlated 176B not jited memlock 4096B map_ids 1 /opt/xdp/bpf-examples# bpftool map 1: xskmap name xsks_map flags 0x0 key 4B value 4B max_entries 6 memlock 4096B

Press Ctrl+C to unload AF_XDP program

Sanity-Check #4: Load & Execute AF_XDP socket in XDP_ZEROCOPY mode¶

Setup two or more ECI nodes according to Intel® Ethernet Controllers TSN Enabling and Testing frameworks guidelines

Execute the precompiled BPF XDP program loaded on the TGL

stmmac-pci.kodevice in XDP_ZEROCOPY native mode with successful XDP_RX/opt/xdp/bpf-examples# ./xdpsock_5.x -i enp0s30f4 -q 0 -N -z sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.01 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 1.00 rx 0 0 tx 0 0 ^C sock0@enp0s30f4:0 rxdrop xdp-drv pps pkts 0.47 rx 0 0 tx 0 0 /opt/xdp/bpf-examples# ip link show dev enp0s30f4 3: enp0s30f4: <BROADCAST,MULTICAST,DYNAMIC,UP,LOWER_UP> mtu 1500 xdp qdisc mq state UP mode DEFAULT group default qlen 1000 link/ether 88:ab:cd:11:01:23 brd ff:ff:ff:ff:ff:ff prog/xdp id 30 tag 992d9ddc835e5629 /opt/xdp/bpf-examples# bpftool prog show ... 30: xdp tag 992d9ddc835e5629 loaded_at 2020-03-13T16:04:37+0000 uid 0 xlated 176B not jited memlock 4096B map_ids 2 /opt/xdp/bpf-examples# bpftool map 2: xskmap name xsks_map flags 0x0 key 4B value 4B max_entries 6 memlock 4096B

Press Ctrl+C to unload AF_XDP program