BPF Compiler Collection (BCC)¶

BPF Compiler Collection (BCC) makes it easy for developers to build and load BPF programs into the kernel directly from Python code. This can be used for XDP packet processing. More details can be found on the BCC web site https://github.com/iovisor/bcc.

By using BCC from a container in ECI, we can develop and test BPF programs attached to TSN NICs directly on the target without the need for a separate build system.

The following section is applicable to:

BCC Environment Setup¶

Important

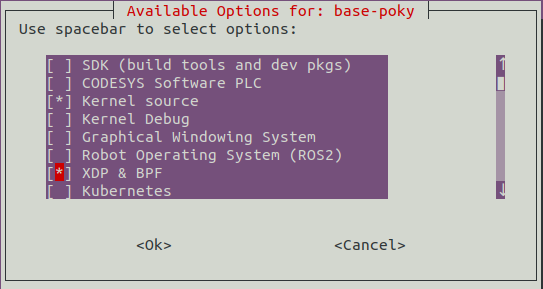

BPF Compiler Collection features must be enabled in the ECI image before BPF Compiler Collection can be used. Creating an ECI image that contains the BPF Compiler Collection features can be accomplished by selecting the XDP & BPF and Kernel source feature options during image setup. See section Building ECI for more information.

The setup relies on XDP support in the kernel and the BCC container.

Enable

XDP & BFPandKernel sourcefeature option during Setting up ECI BuildFollow the instructions to build and run Microservice: BPF Compiler Collection (BCC)

Example 1: BCC XDP redirect¶

This example is derived from this kernel self test https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/tree/tools/testing/selftests/bpf/test_xdp_redirect.sh. It creates 2 namespaces with two veth peers, and forward packets in-between using generic XDP.

Create the veth devices and their peers in their respective namespaces with:

ip netns add ns1 ip netns add ns2 ip link add veth1 index 111 type veth peer name veth11 netns ns1 ip link add veth2 index 222 type veth peer name veth22 netns ns2 ip link set veth1 up ip link set veth2 up ip -n ns1 link set dev veth11 up ip -n ns2 link set dev veth22 up ip -n ns1 addr add 10.1.1.11/24 dev veth11 ip -n ns2 addr add 10.1.1.22/24 dev veth22

Test that pinging from the veth peer in one namespace to the other veth peer in another namespace is not working in any direction without XDP redirect:

ip netns exec ns1 ping -c 1 10.1.1.22 PING 10.1.1.22 (10.1.1.22): 56 data bytes --- 10.1.1.22 ping statistics --- 1 packets transmitted, 0 packets received, 100% packet loss

ip netns exec ns2 ping -c 1 10.1.1.11 PING 10.1.1.11 (10.1.1.11): 56 data bytes --- 10.1.1.11 ping statistics --- 1 packets transmitted, 0 packets received, 100% packet loss

In another terminal, run the BCC container:

docker run -it --rm \ --name bcc \ --privileged \ --net=host \ -v /lib/modules/$(uname -r)/build:/lib/modules/host-build:ro \ bcc

Inside the container, create a new file xdp_redirect.py with the following content:

#!/usr/bin/python from bcc import BPF import time import sys b = BPF(text = """ #include <uapi/linux/bpf.h> int xdp_redirect_to_111(struct xdp_md *xdp) { return bpf_redirect(111, 0); } int xdp_redirect_to_222(struct xdp_md *xdp) { return bpf_redirect(222, 0); } """, cflags=["-w"]) flags = (1 << 1) # XDP_FLAGS_SKB_MODE #flags = (1 << 2) # XDP_FLAGS_DRV_MODE b.attach_xdp("veth1", b.load_func("xdp_redirect_to_222", BPF.XDP), flags) b.attach_xdp("veth2", b.load_func("xdp_redirect_to_111", BPF.XDP), flags) print("BPF programs loaded and redirecting packets, hit CTRL+C to stop") while 1: try: time.sleep(1) except KeyboardInterrupt: print("Removing BPF programs") break; b.remove_xdp("veth1", flags) b.remove_xdp("veth2", flags)

Now run it with:

python3 xdp_redirect.py BPF programs loaded and redirecting packets, hit CTRL+C to stop

Back to the first terminal, test that pinging from the veth peer in one namespace to the other veth peer in another namespace is working in both directions thanks to XDP redirect:

ip netns exec ns1 ping -c 1 10.1.1.22 PING 10.1.1.22 (10.1.1.22): 56 data bytes 64 bytes from 10.1.1.22: seq=0 ttl=64 time=0.067 ms --- 10.1.1.22 ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 0.067/0.067/0.067 ms

ip netns exec ns2 ping -c 1 10.1.1.11 PING 10.1.1.11 (10.1.1.11): 56 data bytes 64 bytes from 10.1.1.11: seq=0 ttl=64 time=0.044 ms --- 10.1.1.11 ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 0.044/0.044/0.044 ms